In this tutorial, we demonstrate how to build a powerful and intelligent question-answer system by combining the strengths of Tavily Search API, Chroma, Google Gemini LLMS and the Langchain frame. The pipeline utilizes real-time web search using Tavily, semantic document cache with Chroma Vector large and contextual response generation through the gemini model. These tools are integrated through Langchain’s modular components such as Runnablerlambda, Chat Promptemplate, Conversation Buffer Memory and Googlegenegenerativaimbedings. It goes beyond simple questions and answers by introducing a hybrid retrieval mechanism that controls for cache inputs before invoking fresh web searches. The retrieved documents are intelligently formatted, summarized and implemented a structured LLM prompt with attention to source attribution, user history and trust scoring. Key features such as advanced fast technique, mood and device analysis and dynamic vector store updates make this pipeline suitable for cases of advanced use such as research help, domain -specific summary and intelligent agents.

!pip install -qU langchain-community tavily-python langchain-google-genai streamlit matplotlib pandas tiktoken chromadb langchain_core pydantic langchainWe install and upgrade a comprehensive set of libraries required to build an advanced AI -Search Assistant. It includes tools for retrieval (Tavily-Python, Chromadb), LLM integration (Langchain-Google-Genai, Langchain), Data Management (Pandas, Pydantic), Visualization (Matplotlib, Streamlit) and Tokenization (Tikken). These components form the core foundation for the construction of a real time, context-conscious QA system.

import os

import getpass

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

import json

import time

from typing import List, Dict, Any, Optional

from datetime import datetimeWe import important Python libraries used throughout the laptop. It includes standard libraries for environmental variables, ensuring input, time tracking and data types (OS, GetPass, Time, Typing, Datetime). In addition, it brings core data science tools such as Pandas, Matplotlib and Numpy for data management, visualization and numeric calculations as well as JSON for parsing of structured data.

if "TAVILY_API_KEY" not in os.environ:

os.environ["TAVILY_API_KEY"] = getpass.getpass("Enter Tavily API key: ")

if "GOOGLE_API_KEY" not in os.environ:

os.environ["GOOGLE_API_KEY"] = getpass.getpass("Enter Google API key: ")

import logging

logging.basicConfig(level=logging.INFO, format="%(asctime)s - %(name)s - %(levelname)s - %(message)s")

logger = logging.getLogger(__name__)We initially initialize API keys to Tavily and Google Gemini by only praying users if they are not already set in the environment, ensuring secure and repeated access to external services. It also configures a standardized logging setup using Python’s logging module, which helps monitor the execution stream and catch troubleshooting or error messages throughout the laptop.

from langchain_community.retrievers import TavilySearchAPIRetriever

from langchain_community.vectorstores import Chroma

from langchain_core.documents import Document

from langchain_core.output_parsers import StrOutputParser, JsonOutputParser

from langchain_core.prompts import ChatPromptTemplate, SystemMessagePromptTemplate, HumanMessagePromptTemplate

from langchain_core.runnables import RunnablePassthrough, RunnableLambda

from langchain_google_genai import ChatGoogleGenerativeAI, GoogleGenerativeAIEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.chains.summarize import load_summarize_chain

from langchain.memory import ConversationBufferMemoryWe import key components from the Langchain ecosystem and its integrations. It brings Tavilse Archapiretries to real-time web search, Chroma for vector storage and googlegenerativai modules to chat and embedding models. Core Langchain modules such as Chat Promptemplate, Runnablerlambda, Conversation Buffmemory and Output Parties enable flexible fast construction, memory handling and pipeline performance.

class SearchQueryError(Exception):

"""Exception raised for errors in the search query."""

pass

def format_docs(docs):

formatted_content = []

for i, doc in enumerate(docs):

metadata = doc.metadata

source = metadata.get('source', 'Unknown source')

title = metadata.get('title', 'Untitled')

score = metadata.get('score', 0)

formatted_content.append(

f"Document {i+1} [Score: {score:.2f}]:n"

f"Title: {title}n"

f"Source: {source}n"

f"Content: {doc.page_content}n"

)

return "nn".join(formatted_content)We define two important components for search and document management. The Searchqueryerror class creates a custom exception to control invalid or failed search quotes gracefully. The Format_DOCs feature processes a list of retrieved documents by extracting metadata such as the title, source and relevance score and format them into a clean, readable string.

class SearchResultsParser:

def parse(self, text):

try:

if isinstance(text, str):

import re

import json

json_match = re.search(r'{.*}', text, re.DOTALL)

if json_match:

json_str = json_match.group(0)

return json.loads(json_str)

return {"answer": text, "sources": [], "confidence": 0.5}

elif hasattr(text, 'content'):

return {"answer": text.content, "sources": [], "confidence": 0.5}

else:

return {"answer": str(text), "sources": [], "confidence": 0.5}

except Exception as e:

logger.warning(f"Failed to parse JSON: {e}")

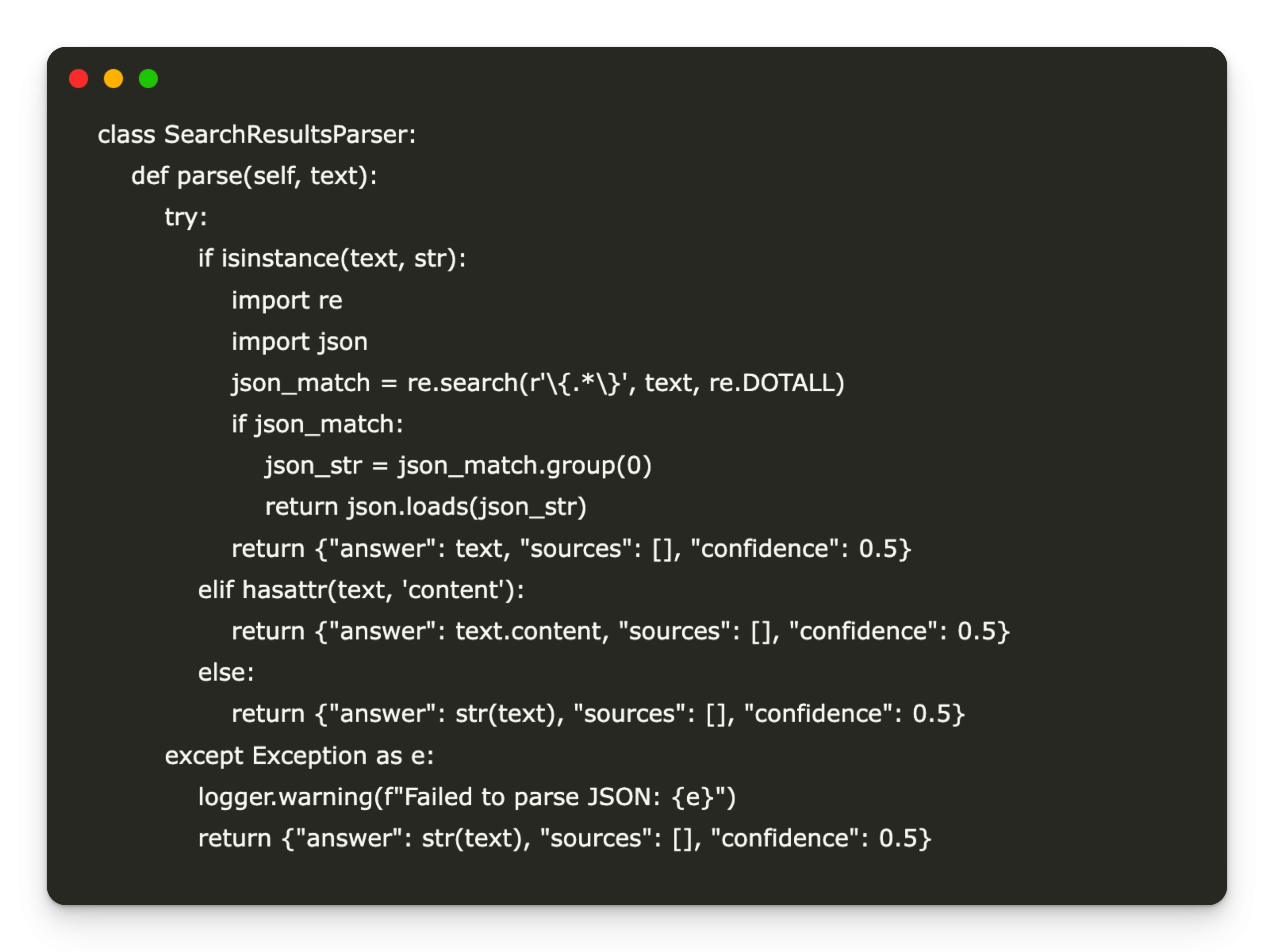

return {"answer": str(text), "sources": [], "confidence": 0.5}The SearchResult Saving Class provides a robust method of extracting structured information from LLM response. It tries to analyze a JSON-like string from the model output and return to a regular textual response format if parsing fails. It handles gracefully string outputs and message objects, ensuring a consistent downstream treatment. In case of error, it logs a warning and returns a relapse response containing the raw response, empty sources and a standard confidence score, which improves the system’s fault tolerance.

class EnhancedTavilyRetriever:

def __init__(self, api_key=None, max_results=5, search_depth="advanced", include_domains=None, exclude_domains=None):

self.api_key = api_key

self.max_results = max_results

self.search_depth = search_depth

self.include_domains = include_domains or []

self.exclude_domains = exclude_domains or []

self.retriever = self._create_retriever()

self.previous_searches = []

def _create_retriever(self):

try:

return TavilySearchAPIRetriever(

api_key=self.api_key,

k=self.max_results,

search_depth=self.search_depth,

include_domains=self.include_domains,

exclude_domains=self.exclude_domains

)

except Exception as e:

logger.error(f"Failed to create Tavily retriever: {e}")

raise

def invoke(self, query, **kwargs):

if not query or not query.strip():

raise SearchQueryError("Empty search query")

try:

start_time = time.time()

results = self.retriever.invoke(query, **kwargs)

end_time = time.time()

search_record = {

"timestamp": datetime.now().isoformat(),

"query": query,

"num_results": len(results),

"response_time": end_time - start_time

}

self.previous_searches.append(search_record)

return results

except Exception as e:

logger.error(f"Search failed: {e}")

raise SearchQueryError(f"Failed to perform search: {str(e)}")

def get_search_history(self):

return self.previous_searchesImproving TaviRRetriever class is a custom wrapping around TavilseSarchapiretriever, adding greater flexibility, control and traceability to search operations. It supports advanced features such as limiting search depth, domain insulation/exclusion filters and configurable result counts. The Invoke method performs web searches and tracks each query’s metadata (timestamp, response time and number of results) that store it for later analysis.

class SearchCache:

def __init__(self):

self.embedding_function = GoogleGenerativeAIEmbeddings(model="models/embedding-001")

self.vector_store = None

self.text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

def add_documents(self, documents):

if not documents:

return

try:

if self.vector_store is None:

self.vector_store = Chroma.from_documents(

documents=documents,

embedding=self.embedding_function

)

else:

self.vector_store.add_documents(documents)

except Exception as e:

logger.error(f"Failed to add documents to cache: {e}")

def search(self, query, k=3):

if self.vector_store is None:

return []

try:

return self.vector_store.similarity_search(query, k=k)

except Exception as e:

logger.error(f"Vector search failed: {e}")

return []The SEARCHCACHE class implements a semantic cache layer that stores and retrieves documents using vector holes for effective equality search. It uses Googlegenerativaimbedings to convert documents to close vectors and store them in a Chroma vector database. ADD_DOCUMMENTS method initializes or updates the vector store, while the search method enables quick fetching of the most relevant cache documents based on semantic equality. This reduces superfluous API calls and improves the response times for repeated or related queries serving as a slight hybrid memory layer in AI assistant pipeline.

search_cache = SearchCache()

enhanced_retriever = EnhancedTavilyRetriever(max_results=5)

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

system_template = """You are a research assistant that provides accurate answers based on the search results provided.

Follow these guidelines:

1. Only use the context provided to answer the question

2. If the context doesn't contain the answer, say "I don't have sufficient information to answer this question."

3. Cite your sources by referencing the document numbers

4. Don't make up information

5. Keep the answer concise but complete

Context: {context}

Chat History: {chat_history}

"""

system_message = SystemMessagePromptTemplate.from_template(system_template)

human_template = "Question: {question}"

human_message = HumanMessagePromptTemplate.from_template(human_template)

prompt = ChatPromptTemplate.from_messages([system_message, human_message])

We initialize the core components of the AI assistant: A semantic Searchcache, Enhanced TaviRetries for Web-Based Query and a Conversation Buffmemory to preserve the Chat Story across Swings. It also defines a structured prompt using chat promptemplate that controls LLM to act as a research assistant. The quick enforce strict rules of factual accuracy, context use, source litter and brief answer, ensuring reliable and grounded answers.

def get_llm(model_name="gemini-2.0-flash-lite", temperature=0.2, response_mode="json"):

try:

return ChatGoogleGenerativeAI(

model=model_name,

temperature=temperature,

convert_system_message_to_human=True,

top_p=0.95,

top_k=40,

max_output_tokens=2048

)

except Exception as e:

logger.error(f"Failed to initialize LLM: {e}")

raise

output_parser = SearchResultsParser()

We define the get_llm feature that initializes a Google Gemini language model with configurable parameters such as model name, temperature and decoding settings (eg top_p, top_k and max -tokens). It ensures robustness with error handling for unsuccessful model initialization. An instance of Search Result Saver is also created to standardize and structure LLM’s raw answers, which enables consistent downstream processing of answers and metadata.

def plot_search_metrics(search_history):

if not search_history:

print("No search history available")

return

df = pd.DataFrame(search_history)

plt.figure(figsize=(12, 6))

plt.subplot(1, 2, 1)

plt.plot(range(len(df)), df['response_time'], marker="o")

plt.title('Search Response Times')

plt.xlabel('Search Index')

plt.ylabel('Time (seconds)')

plt.grid(True)

plt.subplot(1, 2, 2)

plt.bar(range(len(df)), df['num_results'])

plt.title('Number of Results per Search')

plt.xlabel('Search Index')

plt.ylabel('Number of Results')

plt.grid(True)

plt.tight_layout()

plt.show()

Plot_search_metrics feature visualizes benefits strends from previous queries using MATPLOTLIB. It converts the search history to a data frame and plans two undergraphs: one shows response time per day. Search and the other shows the number of returns returned. This helps analyze the effectiveness and search quality of the system over time, help developers fine -tune the retriever or identify bottlenecks in the real world.

def retrieve_with_fallback(query):

cached_results = search_cache.search(query)

if cached_results:

logger.info(f"Retrieved {len(cached_results)} documents from cache")

return cached_results

logger.info("No cache hit, performing web search")

search_results = enhanced_retriever.invoke(query)

search_cache.add_documents(search_results)

return search_results

def summarize_documents(documents, query):

llm = get_llm(temperature=0)

summarize_prompt = ChatPromptTemplate.from_template(

"""Create a concise summary of the following documents related to this query: {query}

{documents}

Provide a comprehensive summary that addresses the key points relevant to the query.

"""

)

chain = (

{"documents": lambda docs: format_docs(docs), "query": lambda _: query}

| summarize_prompt

| llm

| StrOutputParser()

)

return chain.invoke(documents)These two features improve assistant’s intelligence and efficiency. The Reurieve_with_Fallback feature implements a hybrid picking mechanism: It first tries to retrieve semantically relevant documents from the local Chroma cache and, if not successful, falls back to a real-time Tavily Web Search and Cache the new results for future use. Meanwhile, Summarize_Documents are utilizing a Gemini LLM to generate concise summary from retrieved documents, controlled by a structured prompt that ensures relevance to the query. Together they enable the low latence, informative and context -conscious answers.

def advanced_chain(query_engine="enhanced", model="gemini-1.5-pro", include_history=True):

llm = get_llm(model_name=model)

if query_engine == "enhanced":

retriever = lambda query: retrieve_with_fallback(query)

else:

retriever = enhanced_retriever.invoke

def chain_with_history(input_dict):

query = input_dict["question"]

chat_history = memory.load_memory_variables({})["chat_history"] if include_history else []

docs = retriever(query)

context = format_docs(docs)

result = prompt.invoke({

"context": context,

"question": query,

"chat_history": chat_history

})

memory.save_context({"input": query}, {"output": result.content})

return llm.invoke(result)

return RunnableLambda(chain_with_history) | StrOutputParser()The Advanced_chain feature defines a modular, end-to-end-reasoning workflow to answer user requests using cache or real-time search. It initializes the specified gemini model, selects the retrieval strategy (cache fall or direct search), constructs a response pipeline containing chat history (if enabled), formats documents in context and asking for LLM using a system-controlled template. The chain also logs the interaction in memory and returns the final answer, analyzed in pure text. This design enables flexible experimentation with models and retrieval strategies while maintaining the context of the conversation.

qa_chain = advanced_chain()

def analyze_query(query):

llm = get_llm(temperature=0)

analysis_prompt = ChatPromptTemplate.from_template(

"""Analyze the following query and provide:

1. Main topic

2. Sentiment (positive, negative, neutral)

3. Key entities mentioned

4. Query type (factual, opinion, how-to, etc.)

Query: {query}

Return the analysis in JSON format with the following structure:

{{

"topic": "main topic",

"sentiment": "sentiment",

"entities": ["entity1", "entity2"],

"type": "query type"

}}

"""

)

chain = analysis_prompt | llm | output_parser

return chain.invoke({"query": query})

print("Advanced Tavily-Gemini Implementation")

print("="*50)

query = "what year was breath of the wild released and what was its reception?"

print(f"Query: {query}")We initialize the final components of the intelligent assistant. QA_CHIN is the total reasoning pipeline ready to process user queries using retrieval, memory and gemi-based response generation. The Analyze_query feature performs a light semantic analysis on a query that extracts the main topic, mood, devices and request type using the Gemini model and a structured JSON -prompt. The sample request, about Breath of the Wild’s release and reception, shows how the assistant is triggered and prepared for full stack inferens and semantic interpretation. The printed heading marks the start of interactive execution.

try:

print("nSearching for answer...")

answer = qa_chain.invoke({"question": query})

print("nAnswer:")

print(answer)

print("nAnalyzing query...")

try:

query_analysis = analyze_query(query)

print("nQuery Analysis:")

print(json.dumps(query_analysis, indent=2))

except Exception as e:

print(f"Query analysis error (non-critical): {e}")

except Exception as e:

print(f"Error in search: {e}")

history = enhanced_retriever.get_search_history()

print("nSearch History:")

for i, h in enumerate(history):

print(f"{i+1}. Query: {h['query']} - Results: {h['num_results']} - Time: {h['response_time']:.2f}s")

print("nAdvanced search with domain filtering:")

specialized_retriever = EnhancedTavilyRetriever(

max_results=3,

search_depth="advanced",

include_domains=["nintendo.com", "zelda.com"],

exclude_domains=["reddit.com", "twitter.com"]

)

try:

specialized_results = specialized_retriever.invoke("breath of the wild sales")

print(f"Found {len(specialized_results)} specialized results")

summary = summarize_documents(specialized_results, "breath of the wild sales")

print("nSummary of specialized results:")

print(summary)

except Exception as e:

print(f"Error in specialized search: {e}")

print("nSearch Metrics:")

plot_search_metrics(history)

We demonstrate the complete pipeline in action. It performs a search using QA_CHIN, shows the generated response and then analyzes the query for mood, subject, devices and type. It also picks up and prints each query history, response time and number of results. It also runs a domain-filtered search that focuses on Nintendo-related sites, summarizes the results and visualizes the search service using Plot_search_Metrics, which provides a comprehensive overview of the assistant’s capabilities in real-time use.

Finally, after this tutorial users, there is a comprehensive plan for creating a very skilled, context-conscious and scalable clothing system that bridges in real-time web intelligence with conversation AI. Tavily Search API lets users directly pull fresh and relevant content from the Internet. Gemini LLM adds robust reasoning and summary features, while Langchain’s abstraction layer allows trouble -free orchestration between memory, embedders and model outputs. The implementation includes advanced features such as domain -specific filtration, query analysis (mood, topic and device extraction) and relapse strategies using a semantic vector cache built with Chroma and Googlegenerativaimbedings. Structured logging, error handling and analysis Dashboards also provide transparency and diagnostics for implementation in the real world.

Check Colab Notebook. All credit for this research goes to the researchers in this project. You are also welcome to follow us on Twitter And don’t forget to join our 90k+ ml subbreddit.

Asif Razzaq is CEO of Marketchpost Media Inc. His latest endeavor is the launch of an artificial intelligence media platform, market post that stands out for its in -depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts over 2 million monthly views and illustrates its popularity among the audience.

🚨 Build Genai you can trust. ⭐ Parlant is your open source engine for controlled, compatible and targeted AI conversations-star parlant on GitHub! (Promoted)