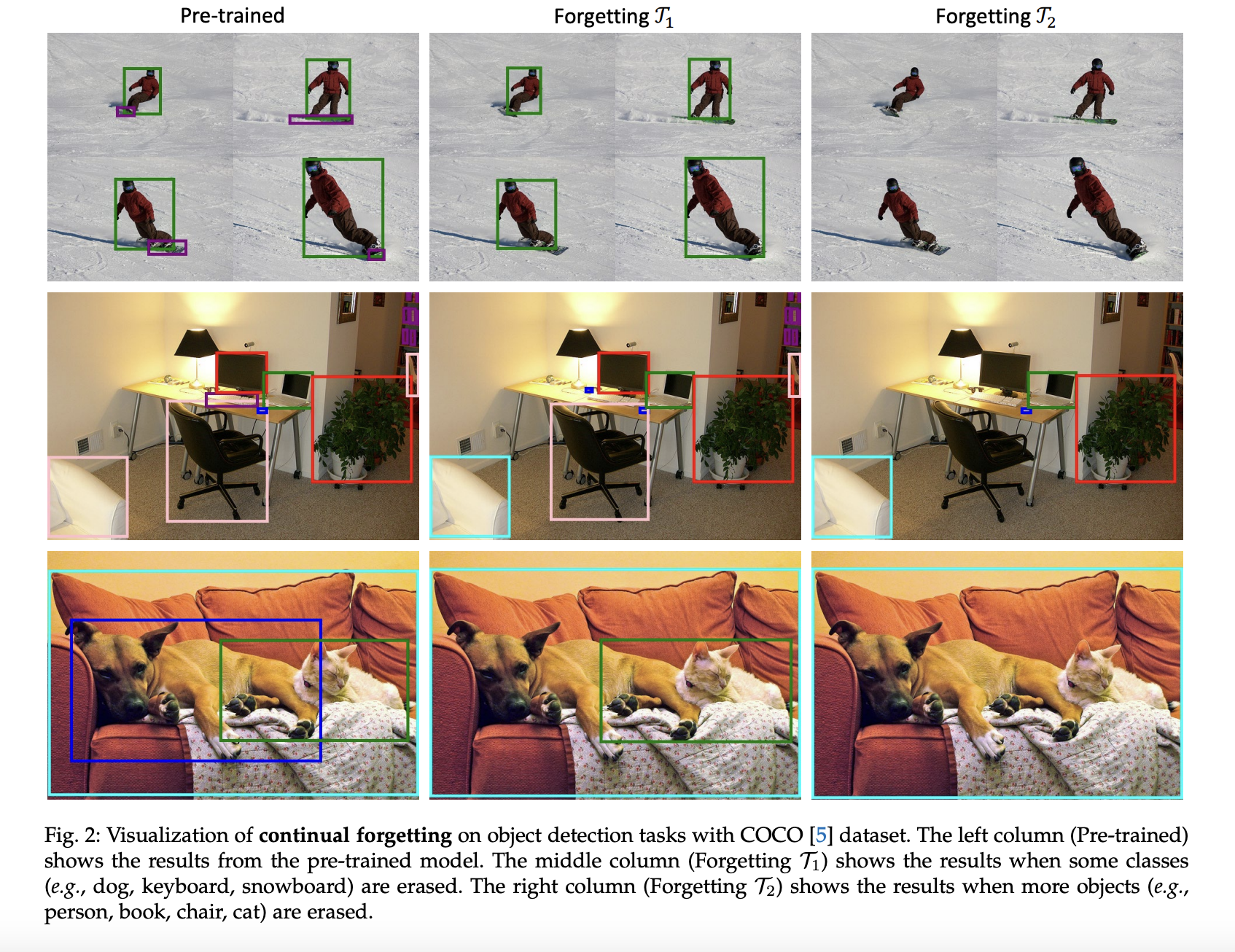

Pretrained vision models have been the foundation of modern computer vision advances across various domains, such as image classification, object detection, and image segmentation. There is a fairly massive amount of data inflow, creating dynamic data environments that require a continuous learning process for our models. New data protection regulations require specific information to be deleted. However, these pre-trained models face the issue of catastrophic forgetting when exposed to new data or tasks over time. When prompted to delete certain information, the model may forget valuable data or parameters. To tackle these problems, researchers from the Institute of Electrical and Electronics Engineers (IEEE) have developed Practical Continual Forgetting (PCF), which enables the models to forget task-specific features while maintaining their performance.

Current methods to mitigate catastrophic forgetting involve regularization techniques, replay buffers, and architectural extension. These techniques work well but do not allow for selective forgetting; instead, they increase the complexity of the architecture, causing inefficiencies when new parameters are adopted. An optimal balance between plasticity and stability trade-offs must exist in order not to excessively retain irrelevant information and be unable to adapt to new environments. However, this turns out to be a significant struggle, giving rise to the need for a new method that enables flexible forgetting mechanisms and provides efficient adaptation.

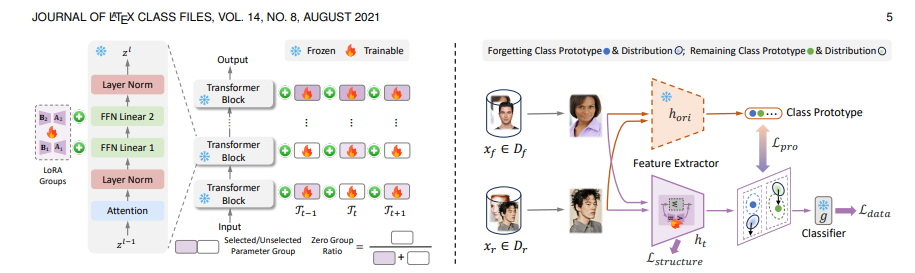

The proposed approach, Practical Continual Forgetting (PCF), has taken a sensible strategy to deal with catastrophic forgetting and encourage selective forgetting. This framework is developed to enhance the strengths of pre-trained vision models. The methodology of PCF involves:

- Adaptive Forgetting Modules: These modules keep analyzing the features previously learned by the model and discard them when they become redundant. Task-specific features that are no longer relevant are removed, but their broader understanding is retained to ensure that no generalization problem occurs.

- Task-Specific Regularization: PCF introduces constraints during training to ensure that the previously learned parameters are not drastically affected. Adaptation to new tasks ensures maximum performance while retaining previously learned information.

To test the performance of the PCF framework, experiments were conducted across different tasks such as face recognition, object detection, and image classification under different scenarios, including missing data and constant forgetting. The frame performed strongly in all these cases, outperforming the base models. Fewer parameters were used, making them more efficient. The methods showed robustness and practical applicability, handling rare or missing data better than other techniques.

The paper introduces the Practical Continual Forgetting (PCF) framework, which effectively addresses the problem of continuous forgetting in pretrained vision models by offering a scalable and adaptive solution to selective forgetting. It has the advantages of being analytically accurate and adaptable, and shows strong potential in privacy-sensitive and quite dynamic applications, as confirmed by strong performance metrics on various architectures. Nevertheless, it would be good to further validate the approach with real-world datasets and in even more complex scenarios to fully evaluate its robustness. Overall, the PCF framework sets a new benchmark for knowledge retention, adaptation, and forgetting in vision models, which has important implications for privacy compliance and task-specific adaptability.

Check out paper and GitHub site. All credit for this research goes to the researchers in this project. Also, don’t forget to follow us Twitter and join ours Telegram channel and LinkedIn Grup. Don’t forget to join our 65k+ ML SubReddit.

🚨 [Recommended Read] Nebius AI Studio expands with vision models, new language models, embeddings and LoRA (Promoted)

Afeerah Naseem is a consultant intern at Marktechpost. She is pursuing her B.tech from Indian Institute of Technology(IIT), Kharagpur. She is passionate about Data Science and fascinated by the role of artificial intelligence in solving real-world problems. She loves discovering new technologies and exploring how they can make everyday tasks easier and more efficient.

📄 Meet ‘Højde’: The Only Autonomous Project Management Tool (Sponsored)