Large -scale Language Models (LLMS) has put forward the area of artificial intelligence as they are used in many applications. While they can almost perfectly simulate human language, they tend to lose in response diversity. This limitation is particularly problematic in tasks that require creativity, such as synthetic data recovery and storytelling, where different outputs are important for maintaining relevance and commitment.

One of the biggest challenges in language model optimization is the reduction in the response diversity due to preference training techniques. Post-training methods such as reinforcement learning from Human Feedback (RLHF) and direct preference optimization (DPO) tend to concentrate probability mass on a limited number of high reward responses. This results in models that generate repeated outputs for different prompt that limit their adaptability in creative applications. The decrease in diversity prevents the potential of language models to function effectively in fields that require wide output.

Previous methods of preference optimization primarily emphasize to adapt models with high quality human preferences. Monitored fine-tuning and RLHF techniques while effective in improving model adjustment leading unintentionally to response homogenization. Direct Preference Optimization (DPO) chooses highly rewarded answers while the treasurers respond of low quality, strengthening the trend for models to produce predictable output. Attempts to counteract this problem, such as adjusting sampling temperatures or using KL divergence, have failed to improve diversity significantly without compromising the output quality.

Researchers from Meta, New York University and ETH Zurich have introduced different preference theater optimization (DIVPO), a new technique designed to improve the response diversity while maintaining high quality. Unlike traditional optimization methods that prioritize the highest rewarded response, Divpo Preference Pairs choose based on quality and diversity. This ensures that the model generates output that is not only human but also varied, making them more effective in creative and data -driven applications.

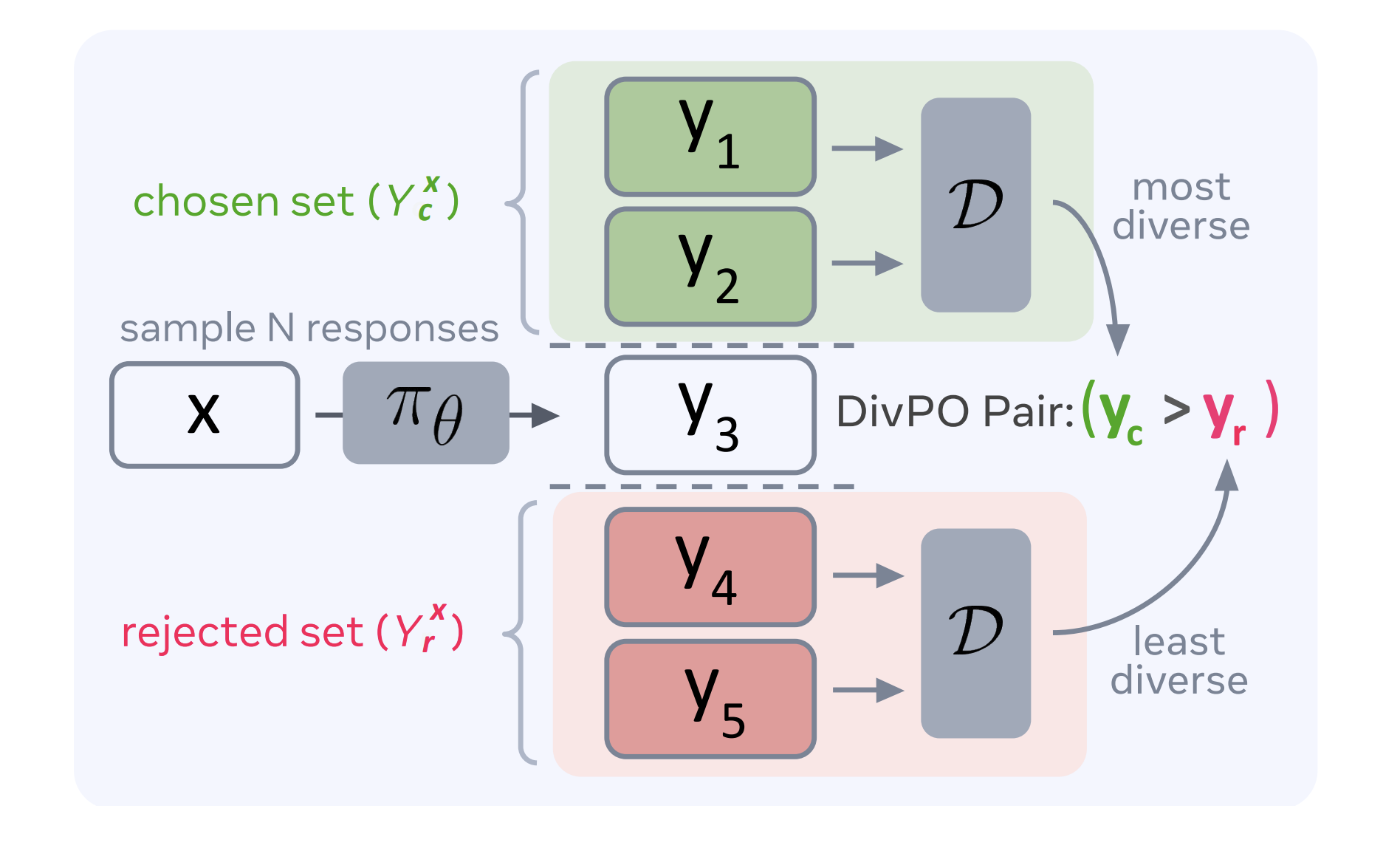

Divpo works by trying out multiple answers to a given quick and scoring them using a reward model. Instead of choosing the individual highest rewarded response, the most different high quality response is selected as the preferred output. At the same time, the least varied response that does not meet the quality threshold is chosen as the rejected output. This contrastive optimization strategy allows Divpo to learn a wider distribution of answers while ensuring that each output retains a high quality standard. The procedure incorporates different diversity criteria, including model probability, word frequency and an LLM-based diversity assessment, to assess the distinctive character of each answer systematically.

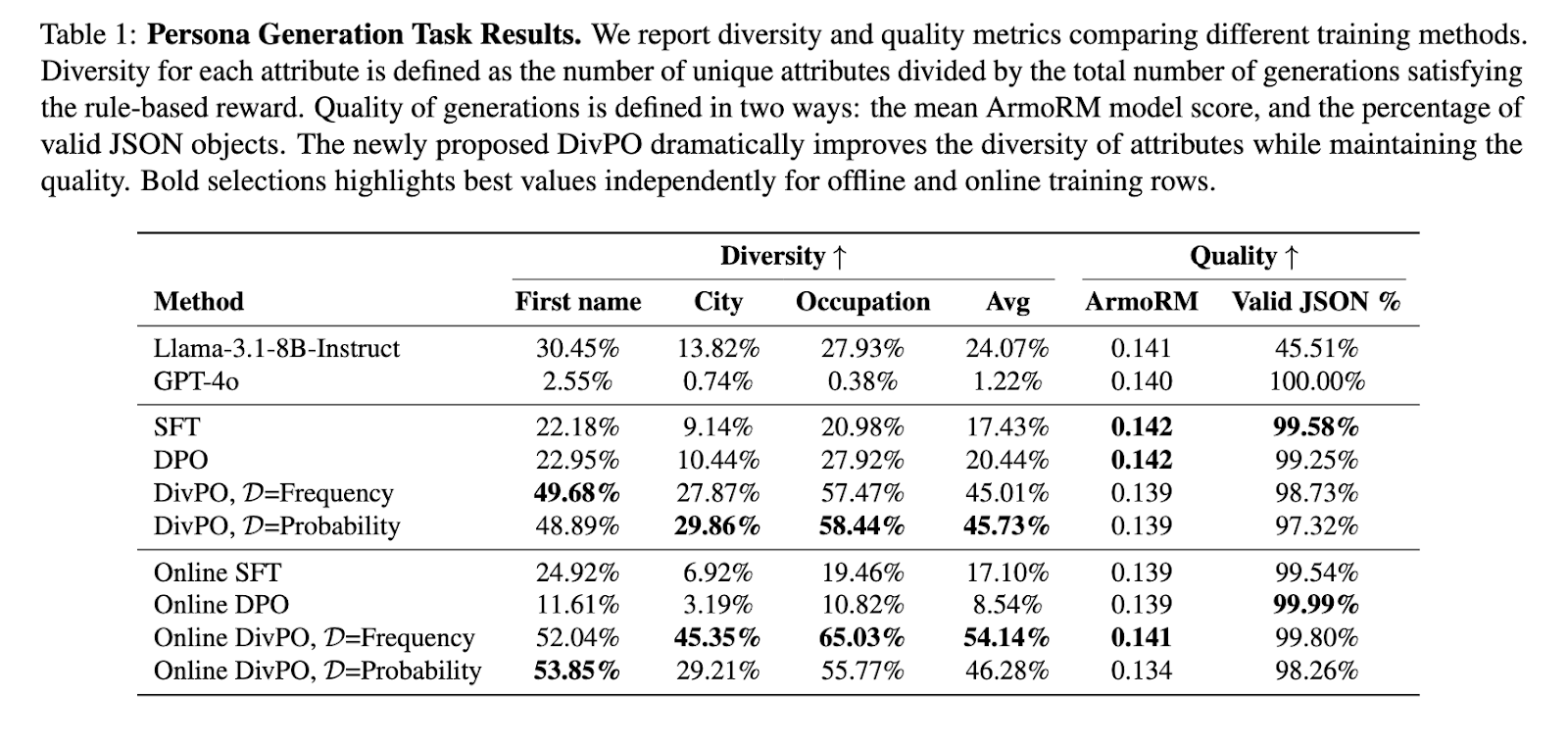

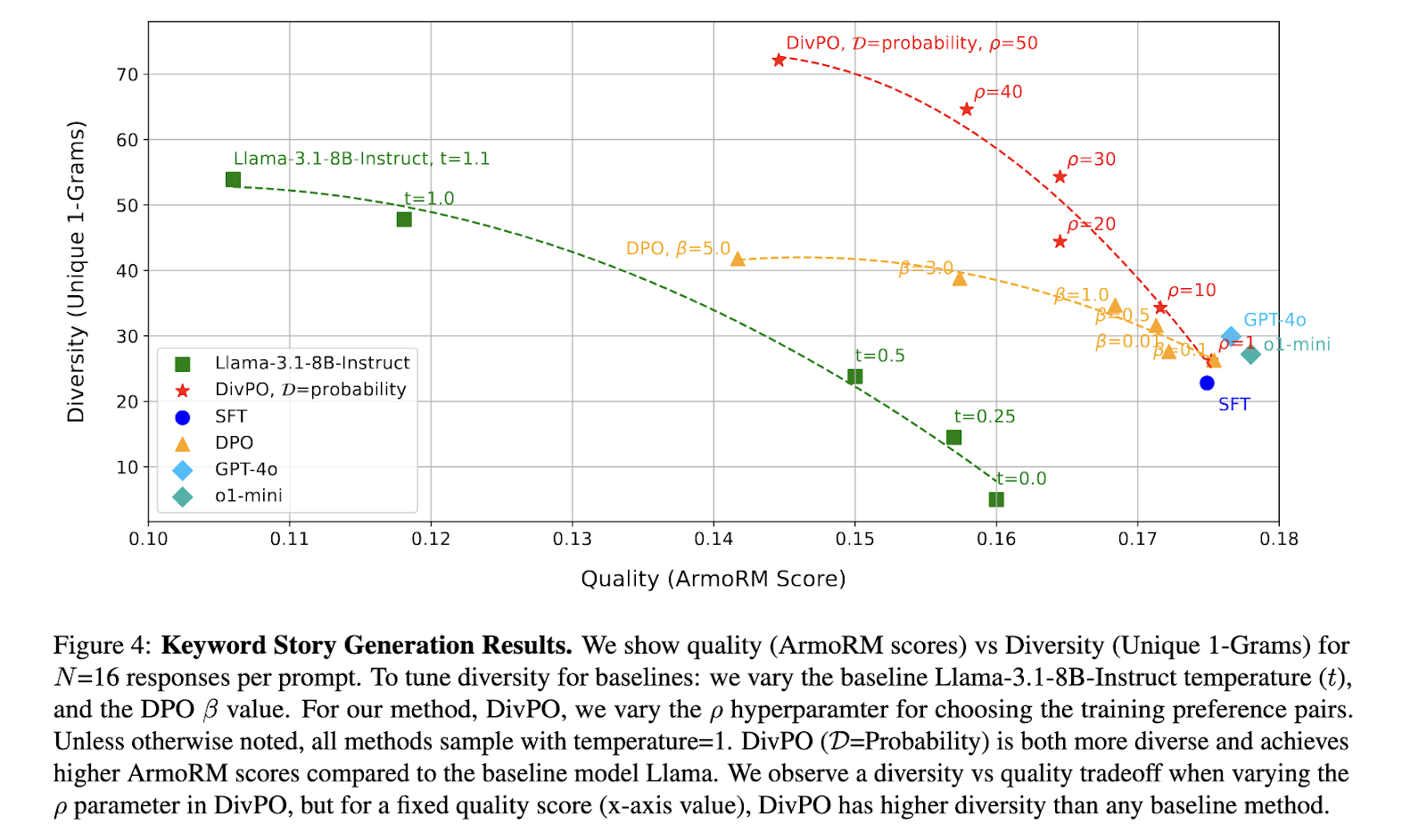

Extensive experiments were performed to validate the effectiveness of Divpo focusing on structured staff generation and open creative writing assignments. The results demonstrated that Divpo significantly increased diversity without sacrificing quality. Compared to standard pre -optimization methods, Divpo led to a 45.6% increase in Persona attribute diversity and a 74.6% increase in history’s diversity. The experiments also showed that Divpo prevents models from generating a small subgroup of answers disproportionately, ensuring a more even distribution of generated attributes. A key observation was that models trained using Divpo consistently surpassed baseline models in diversity evaluations while maintaining high quality, as assessed by the arm form rewarding model.

Further analysis of staff generation revealed that traditional fine-tuned models, such as Llama-3.1-8B instructions, could not produce different person attributes, often repeated a limited set of names. Divpo addressed this problem by expanding the generated attribute area, which led to a more balanced and representative output distribution. The structured staff generation task demonstrated that the online Divpo with word election criteria improved the diversity by 30.07% compared to the baseline model while maintaining a comparable level of response quality. Similarly, the key word -based creative writing assignment showed a significant improvement in which Divpo achieved a 13.6% increase in diversity and a 39.6% increase in quality compared to the standard preference optimization models.

These findings confirm that preference optimization methods inherently reduce diversity, challenging language models designed for open tasks. DIVPO effectively reduces this problem by incorporating criteria for diversity -conscious selection, enabling language models to maintain high quality answers without limiting variation. By balancing diversity with adaptation, DIVPO improves adaptability and the usability of LLMs across multiple domains, ensuring that they remain useful for creative, analytical and synthetic data rating applications. The introduction of Divpo marks significant progress in preference optimization, which offers a practical solution to the long -standing problem of response collapse in language models.

Check out the paper. All credit for this research goes to the researchers in this project. Nor do not forget to follow us on Twitter and join in our Telegram Channel and LinkedIn GrOUP. Don’t forget to take part in our 75k+ ml subbreddit.

🚨 Marketchpost invites AI companies/startups/groups to collaborate with its upcoming AI magazines on ‘Open Source AI in Production’ and ‘Agentic AI’.

Nikhil is an internal consultant at MarkTechpost. He is pursuing an integrated double degree in materials at the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast who always examines applications in fields such as biomaterials and biomedical science. With a strong background in material science, he explores new progress and creates opportunities to contribute.

✅ [Recommended] Join our Telegram -Canal