Large language models (LLMS) face significant challenges in optimizing their methods after training, especially when balancing monitored fine -tuning (SFT) and reinforcement learning (RL) tapestry. While SFT uses direct instructional responsibility pair and RL methods such as RLHF-use preference-based learning, the optimal allocation of limited training resources between these approaches remains unclear. Recent studies have shown that models can achieve task decor and improved reasoning functions without extensive SFT, which challenges traditional sequential pipelines after exercise. In addition, they create significant costs of collecting and annoting human data compared to calculating costs a need to understand the effectiveness of different training methods under fixed data annotation budgets.

Existing research has examined various trade -offs in language model training under fixed budgets, including comparisons between predetermination versus fine tuning and fine tuning versus model distillation. Studies have examined the data and calculated the cost of SFT and RL methods in isolation together with cost-effectiveness considerations by generating human and synthetic data. While some studies show the effects of high quality preference data on RL methods such as direct preference timer (DPO) and PPO, other studies focus on the relationship between SFT and RL methods in terms of model forgetfulness, generalization and adaptation. However, these studies have not failed to tackle optimal resource distribution between SFT and RL-based approaches under strict data motion limits.

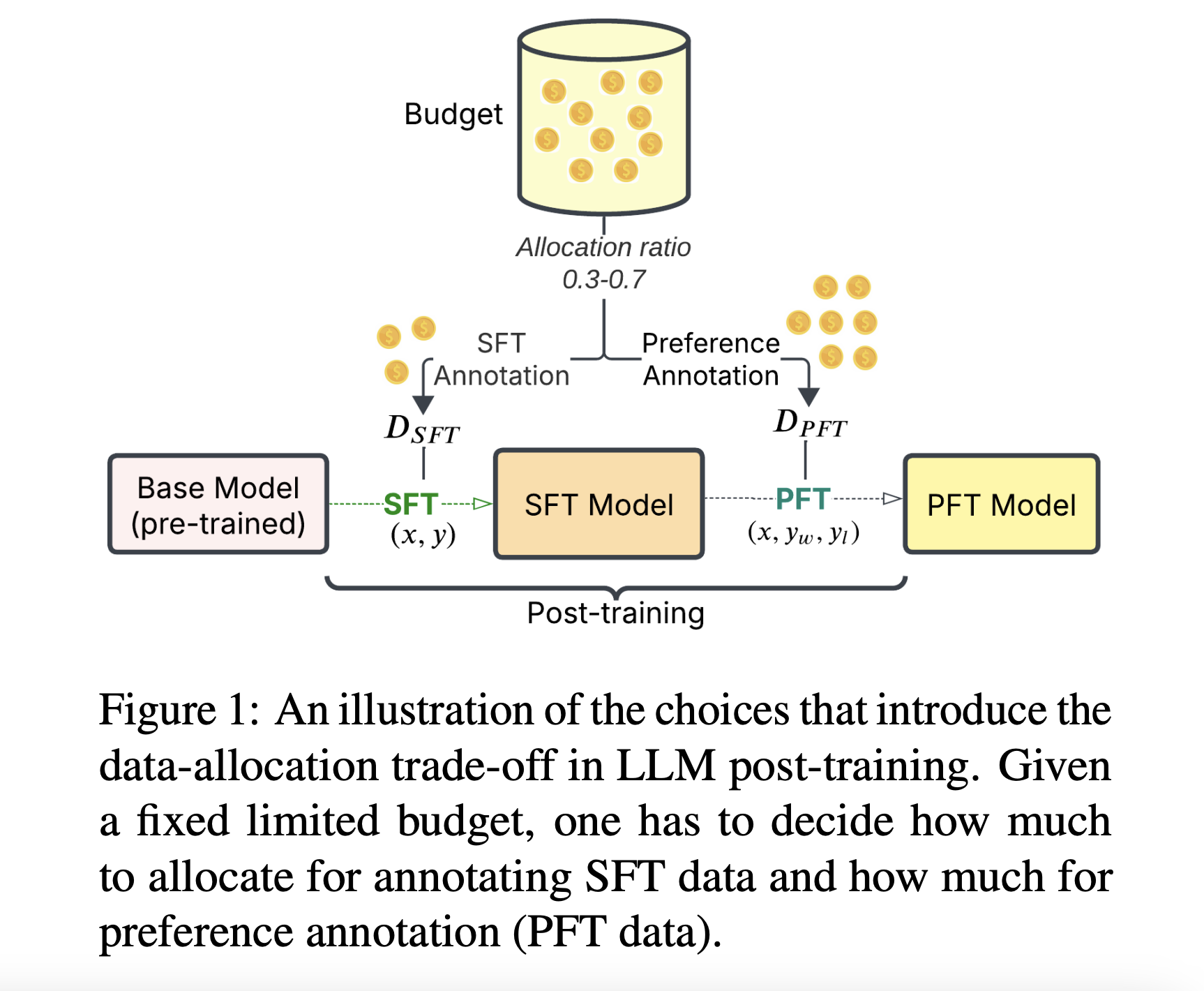

Researchers from the Georgia Institute of Technology have proposed a comprehensive study that examines the optimal allocation of educational data budgets between SFT and Preference Finetuning (PFT) in LLMS. The study examines this relationship across four different tasks, multiple model sizes and different data charges. It addresses the “cold starting problem” in mathematical tasks, where the elimination of SFT leads to suboptimal performance due to distribution changes when applying the DPO directly on the base model. Their findings suggest that although larger data budgets benefit from combining both methods, the allocation of even a small part of the budget to SFT can significantly improve the benefit of analytical tasks.

The study evaluates cost-effectiveness and optimal resource allocation between SFT and PFT in Post training LLMS under 10 billion parameters. Research methodology measures data budgets through educational examples or monetary annotation costs subject to equal labor costs for both methods and the availability of training messages. The experimental setup begins without assignment-specific labeled data using Open Source data sets or synthetic curated data for each target task. To maintain focus on task-specific improvements, general conversation data sets are often used in PFT, such as Ultrafeedback and Chatbot Arena preferences. This controlled approach enables precise measurement of performance improvements that result from targeted data motion.

The results reveal that the optimal allocation of the training budget between SFT and PFT methods proves crucial, with properly balanced data sets surpassing sub-optimally assigned data sets 2-5 times larger in size. Using 5K -examples with 25% SFT allocation for tasks such as summary, helpfulness and class school matches the mathematics performance of 20K examples with 75% SFT allocation. The study identifies that Rene SFT is distinguished in low -datascenaries, while larger data budgets benefit from higher shares of preference data. In addition, direct preference fining on base models shows limited success in mathematical tasks, and the assignment of even a small part to SFT improves the performance better by better adapting the reference model’s response style.

Finally, this paper provides crucial insight to optimize LLM after training during resource restrictions, especially with regard to the interaction between SFT and PFT. The study identifies a significant “cold start problem” when applying PFT directly on base models, which can be effectively reduced by assigning even 10% of the budget to the initial SFT. However, the research recognizes restrictions, including offline methods such as DPO and KTO use for RL implementation, and potential bias from using GPT4 to synthetic data rating and evaluation. In addition, the model size is limited to 10 billion parameters, otherwise it would be extremely calculated resource -intensive to run thousands of fining runs with larger model sizes such as 70B parameters.

Check out the paper. All credit for this research goes to the researchers in this project. You are also welcome to follow us on Twitter And don’t forget to join our 80k+ ml subbreddit.

🚨 Recommended Reading AI Research Release Nexus: An Advanced System Integrating Agent AI system and Data Processing Standards To Tackle Legal Concerns In Ai Data Set

Sajjad Ansari is a last year bachelor from IIT KHARAGPUR. As a technical enthusiast, he covers the practical uses of AI focusing on understanding the impact of AI technologies and their real world. He aims to formulate complex AI concepts in a clear and accessible way.