In this tutorial, we implement a bilingual chat assistant driven by Arcee’s Meraj-Mini model, which has been smoothly on Google Colab using the T4 GPU. This tutorial shows the capabilities of Open Source language models while providing a practical, practical experience of implementing advanced AI solutions in the limitations of free cloud resources. We use a powerful stack of tools including:

- Arcees Meraj-Mini model

- Transformers Library for model load and tokenization

- Accelerate and Bitsandbytes for Effective Quantization

- Pytorch to deep learning calculations

- Gradio to create an interactive web interface

# Enable GPU acceleration

!nvidia-smi --query-gpu=name,memory.total --format=csv

# Install dependencies

!pip install -qU transformers accelerate bitsandbytes

!pip install -q gradioFirst, we activate GPU acceleration by asking GPU’s name and total memory using the NVIDIA SMI command. It then installs and updates key python libraries – such as transformers, accelerates, Bitsandbytes and Gradio – to support machine learning tasks and implement interactive applications.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, pipeline, BitsAndBytesConfig

quant_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=True

)

model = AutoModelForCausalLM.from_pretrained(

"arcee-ai/Meraj-Mini",

quantization_config=quant_config,

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained("arcee-ai/Meraj-Mini")Then we configure 4-bit quantization settings using BitsandbytesConfig for effective model load, then loads “Arcee-IA/Meraj-mini” the causal language model along with its tokenizer from embracing face, automatic mapping devices for optimal performance.

chat_pipeline = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

max_new_tokens=512,

temperature=0.7,

top_p=0.9,

repetition_penalty=1.1,

do_sample=True

)

Here we create a text generation pipeline tailored to chat interactions using Hugging Face’s Pipeline feature. It configures maximum new tokens, temperature, top_p and repetition penalty to balance diversity and coherence during text generation.

def format_chat(messages):

prompt = ""

for msg in messages:

prompt += f"<|im_start|>{msg['role']}n{msg['content']}<|im_end|>n"

prompt += "<|im_start|>assistantn"

return prompt

def generate_response(user_input, history=[]):

history.append({"role": "user", "content": user_input})

formatted_prompt = format_chat(history)

output = chat_pipeline(formatted_prompt)[0]['generated_text']

assistant_response = output.split("<|im_start|>assistantn")[-1].split("<|im_end|>")[0]

history.append({"role": "assistant", "content": assistant_response})

return assistant_response, historyWe define two features to facilitate a conversation interface. The first features format a chat history to a structured prompt with custom delineation, while the second adds a new user message, generates an answer using the text generation pipeline and updates the history of the conversation accordingly.

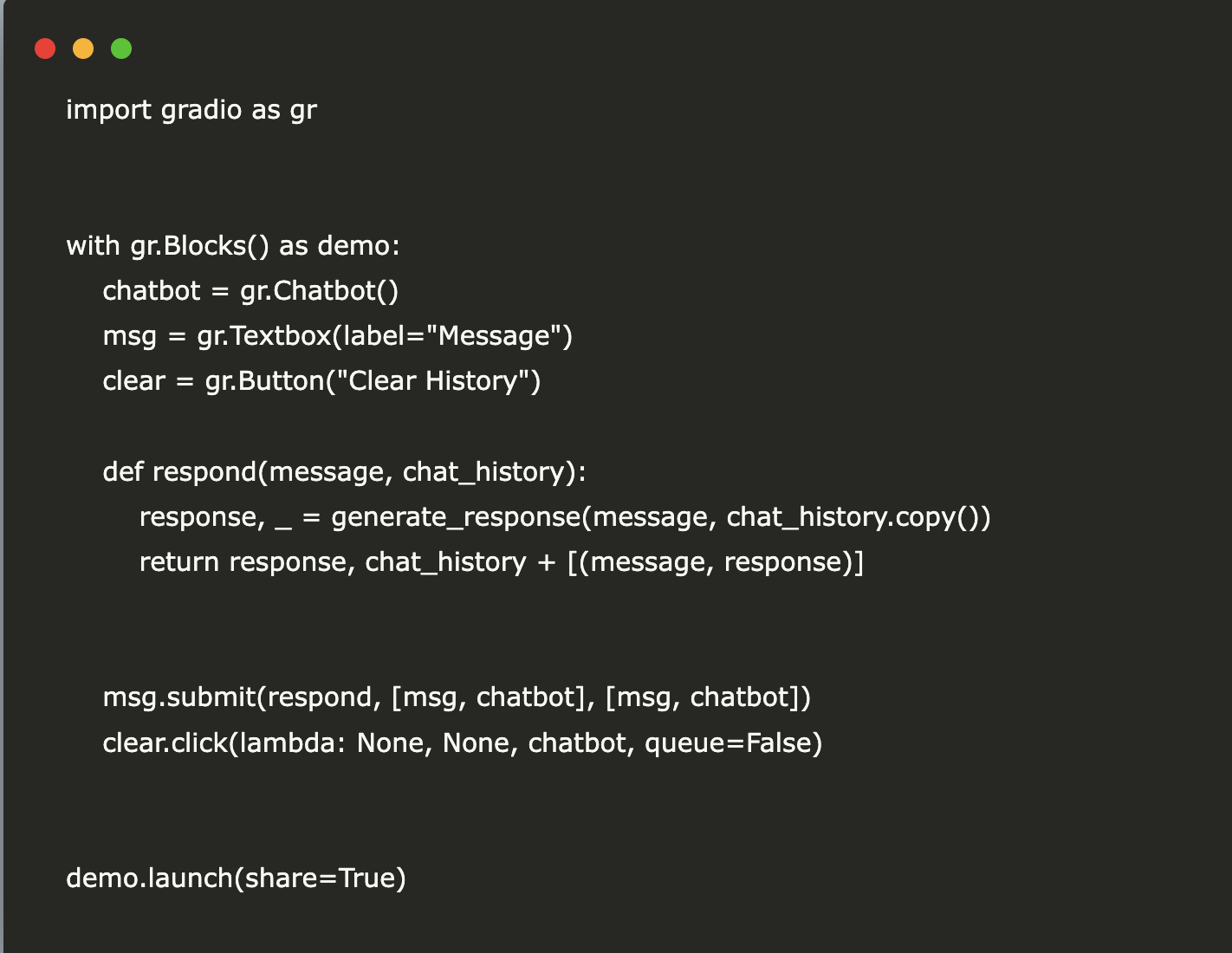

import gradio as gr

with gr.Blocks() as demo:

chatbot = gr.Chatbot()

msg = gr.Textbox(label="Message")

clear = gr.Button("Clear History")

def respond(message, chat_history):

response, _ = generate_response(message, chat_history.copy())

return response, chat_history + [(message, response)]

msg.submit(respond, [msg, chatbot], [msg, chatbot])

clear.click(lambda: None, None, chatbot, queue=False)

demo.launch(share=True)Finally, we build a web-based chatbot interface using Gradio. It creates UI elements for chat history, message input and a clear history button and defines a response function that is integrated with the text generation pipeline to update the conversation. Finally, the demo is launched with sharing enabled for public access.

Here it is Colab notebook. Nor do not forget to follow us on Twitter and join in our Telegram Channel and LinkedIn GrOUP. Don’t forget to take part in our 80k+ ml subbreddit.

Asif Razzaq is CEO of Marketchpost Media Inc. His latest endeavor is the launch of an artificial intelligence media platform, market post that stands out for its in -depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts over 2 million monthly views and illustrates its popularity among the audience.

Parlant: Build Reliable AI customer facing agents with llms 💬 ✅ (promoted)