LLMs are widely used for conversation AI, content generation and company automation. However, balancing performance with calculation efficiency is an important challenge in this field. Many advanced models require extensive hardware resources, making them impractical for smaller businesses. The demand for cost-effective AI solutions has led to researchers developing models that provide high performance with lower calculation requirements.

The training and implementation of AI models presents obstacles to researchers and businesses. Large models require considerable calculation power, making them expensive to maintain. AI models must also handle multilingual tasks, ensuring high instructional accuracy and support company applications such as data analysis, automation and coding. Current market solutions, although often effective, often require infrastructure out of the range of many companies. The challenge is to optimize AI models for treatment efficiency without compromising on accuracy or functionality.

Several AI models are currently dominating the market, including GPT-4O and Deepseek-V3. These models are distinguished in natural language treatment and generation, but require advanced hardware, sometimes needs up to 32 GPUs to function effectively. While providing advanced options in text generation, multilingual support and coding, their hardware dependence limits availability. Some models also struggle with teaching at company-level-subsequent accuracy and tool integration. Businesses need AI solutions that maintain competitive results while minimizing infrastructure and implementation costs. This demand has driven the efforts to optimize language models to work with minimal hardware requirements.

Scientists from Cohers introduced Command A.A high-performance AI model designed specifically for business applications that require maximum efficiency. Unlike conventional models that require large calculation resources, the command works on only two GPUs while maintaining competitive results. The model includes 111 billion parameters and supports a context length of 256K, making it suitable for corporate applications involving long -shaped document processing. Its ability to effectively deal with business -critical agent and multilingual tasks sets it apart from its predecessors. The model has been optimized to provide high quality text generation while reducing operating costs, making it a cost -effective alternative for companies aimed at utilizing AI for different applications.

The underlying technology in command A is structured around an optimized transformer architecture that includes three layers of sliding window attention, each with a window size of 4096 tokens. This mechanism improves local context modeling, enabling the model to maintain important details across expanded text inputs. A fourth layer contains global attention without positional embedders, enabling unlimited token interactions throughout the sequence. The model’s monitored fine-tuning and preferential training further refines its ability to adapt responses with human expectations of accuracy, security and helpfulness. Command A also supports 23 languages, making it one of the most versatile AI models for companies with global operations. Its chat features are pre -configured for interactive behavior, enabling trouble -free conversation -ai applications.

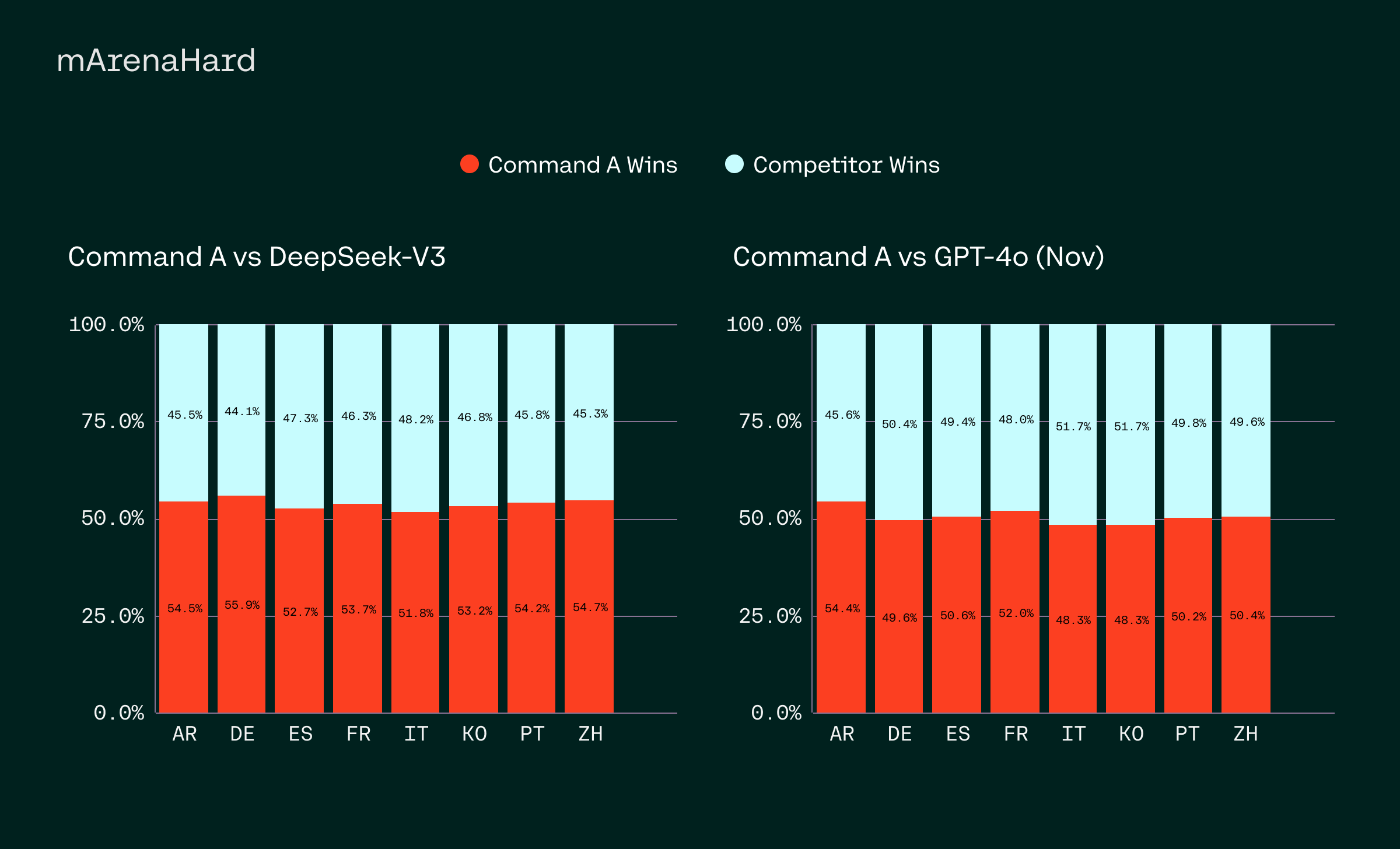

Performance evaluations indicate that Command A is competing positively to lead AI models such as GPT-4O and Deepseek-V3 across different business-focused benchmarks. The model achieves a token generation speed of 156 symbols per day. Second, 1.75 times higher than GPT-4O and 2.4 times higher than Deepseek-V3, making it one of the most effective models available. In terms of cost-effectiveness, private implementations of command A are up to 50% cheaper than API-based alternatives, reducing the financial burden of businesses significantly. Command A is also distinguished in instructional tasks, SQL-based queries and retrieval-eugmented generation (RAG) applications. It has shown great accuracy in the real world data evaluations, which surpasses its competitors in multilingual cases of business use.

In a direct comparison of Enterprise Task Performance, human evaluation results show that the command consistently surpasses its competitors in fluid, faithfulness and response tools. The model’s business-ready options include robust retrieval-augmented generation with verifiable quotes, advanced agent’s tool use and high-level security measures to protect sensitive business data. Its multilingual capabilities extend beyond simple translation, showing superior skills in responding exactly in regional -specific dialects. For example, evaluations of Arab dialects, including Egyptian, Saudi, Syrian and Moroccan Arabic, revealed that the command provided more precise and contextual appropriate response than leading AI models. These results emphasize its strong applicability in global business environments, where language diversity is crucial.

Several important takeaways from the research include:

- Command operates on only two GPUs, which significantly reduces calculation costs while maintaining high performance.

- With 111 billion parameters, the model is optimized for company applications that require extensive word processing.

- The model supports a 256K context length, enabling it to process longer business documents more efficiently than competing models.

- Command A is trained in 23 languages, ensuring high accuracy and contextual relevance to global companies.

- It achieves 156 tokens per day. Second, 1.75x higher than GPT-4O and 2.4x higher than Deepseek-V3.

- The model consistently exceeds competitors in evaluations of companies in the real world and stands out in SQL, Agentic and tool -based tasks.

- Advanced RAG capabilities with verifiable quotes make it very suitable for applications for obtaining businesses.

- Private implementations of command A can be up to 50% cheaper than API-based models.

- The model includes security functions in business quality, ensuring secure handling of sensitive business data.

- Demonstrates high skills in regional dialects, making it ideal for companies operating in linguistically different regions.

Check out The model on embraced face. All credit for this research goes to the researchers in this project. You are also welcome to follow us on Twitter And don’t forget to join our 80k+ ml subbreddit.

Asif Razzaq is CEO of Marketchpost Media Inc. His latest endeavor is the launch of an artificial intelligence media platform, market post that stands out for its in -depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts over 2 million monthly views and illustrates its popularity among the audience.