Artificial intelligence models have advanced significantly in recent years, especially in tasks that require reasoning, such as mathematics, programming, and scientific problem solving. But these advances come with challenges: computational inefficiency and a tendency to overthink. Overthinking in artificial intelligence occurs when models engage in overly lengthy reasoning, leading to increased inference costs and slower response times without significant gains in accuracy. This problem becomes particularly problematic in tasks involving complex multi-step reasoning, where large models often produce detailed outputs. As the demand for efficient AI systems grows, tackling these inefficiencies has become a critical focus for researchers.

Inference costs pose another challenge, especially for organizations that rely on large models. The high computational cost limits availability and wider use, creating barriers for smaller research groups and developers. Moreover, the lack of open access to robust AI models and training resources amplifies these problems, inhibiting innovation and collaboration. A solution requires a balance between computational efficiency, accuracy, and availability.

Introducing the Sky-T1-32B-Flash by NovaSky Lab

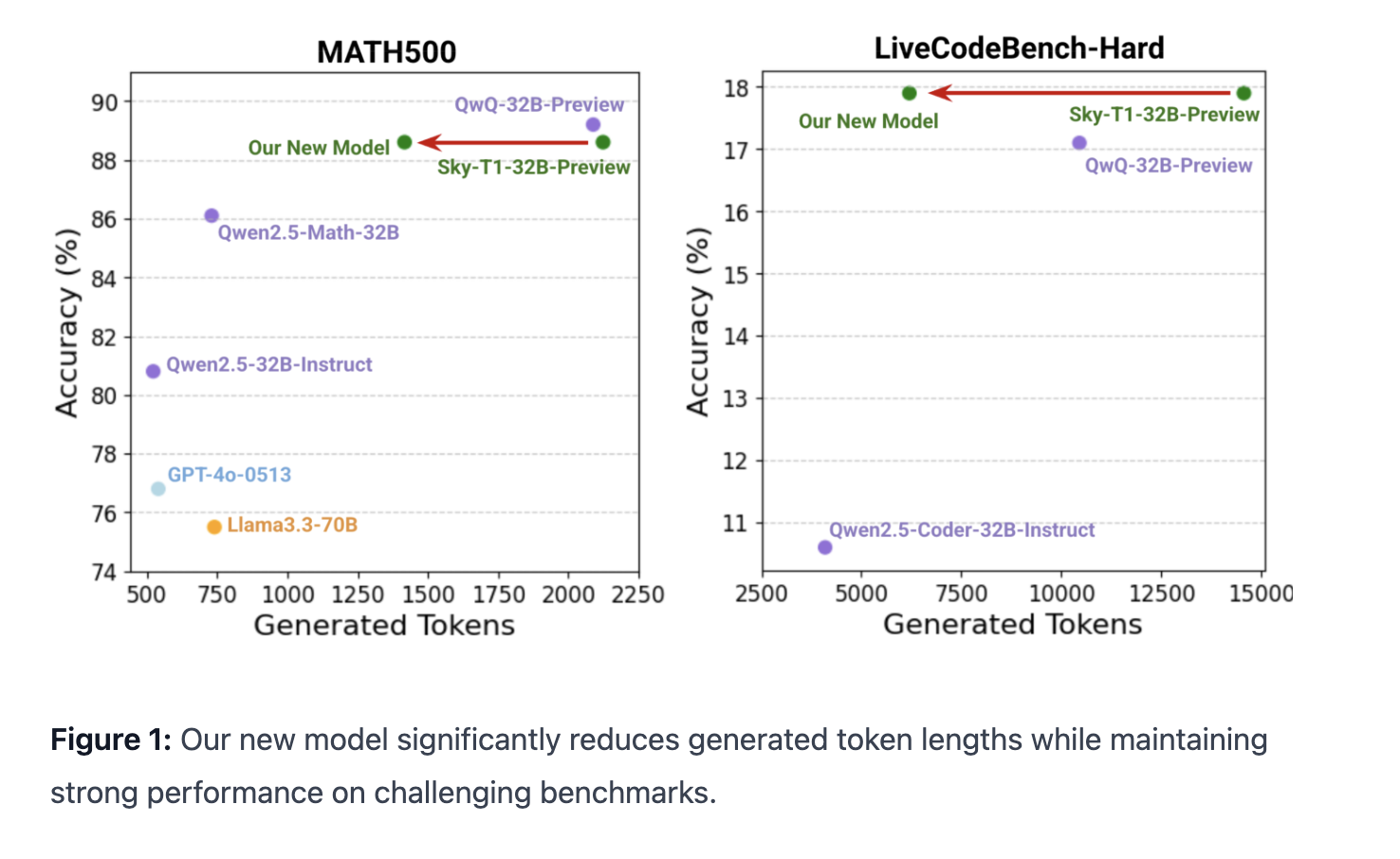

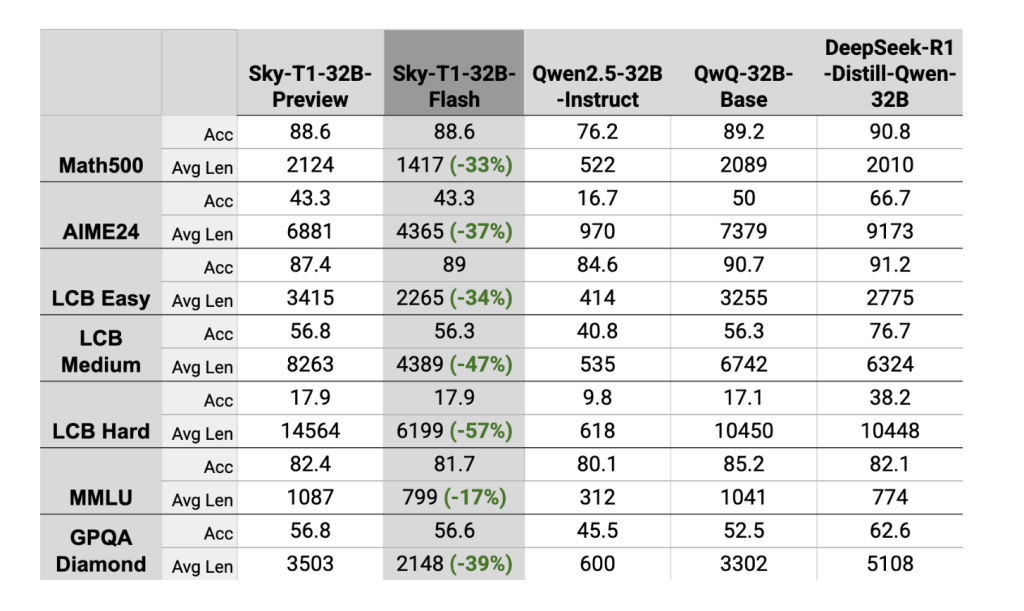

NovaSky Lab, a UC Berkeley research initiative, has introduced Sky-T1-32B-Flash, a reasoning language model designed to address these challenges. This is a 32B reasoning model, preferentially optimized on top of Sky-T1-32B-Preview. The model’s performance is on par with the o1-preview model in both math and coding tasks, while reducing generation lengths by up to 57% compared to Sky-T1-32B-Preview.Sky-T1-32B-Flash reduces overhead and reduces inference costs complex reasoning tasks by up to 57% while maintaining accuracy. The model works consistently across different domains, including math, coding, science, and general knowledge.

A notable feature of the Sky-T1-32B-Flash is its cost-effectiveness. Training the model costs approximately $275 using 8 NVIDIA H100 GPUs, based on Lambda Cloud pricing, making it one of the most economical large-scale models to date. In addition, NovaSky Lab has prioritized transparency by opening up the entire development pipeline. This includes data generation and preprocessing workflows, preference optimization methods, evaluation scripts, and release of model weights and datasets. These efforts allow researchers to reproduce results, experiment with improvements, and contribute to the development of the model.

The Sky-T1-32B-Flash is more than a new entry in language models; it represents a conscious effort to address inefficiencies and make advanced AI research more accessible. By reducing computational requirements and fostering collaboration, NovaSky Lab aims to push the boundaries of cost-effective AI development.

Technical innovations and benefits

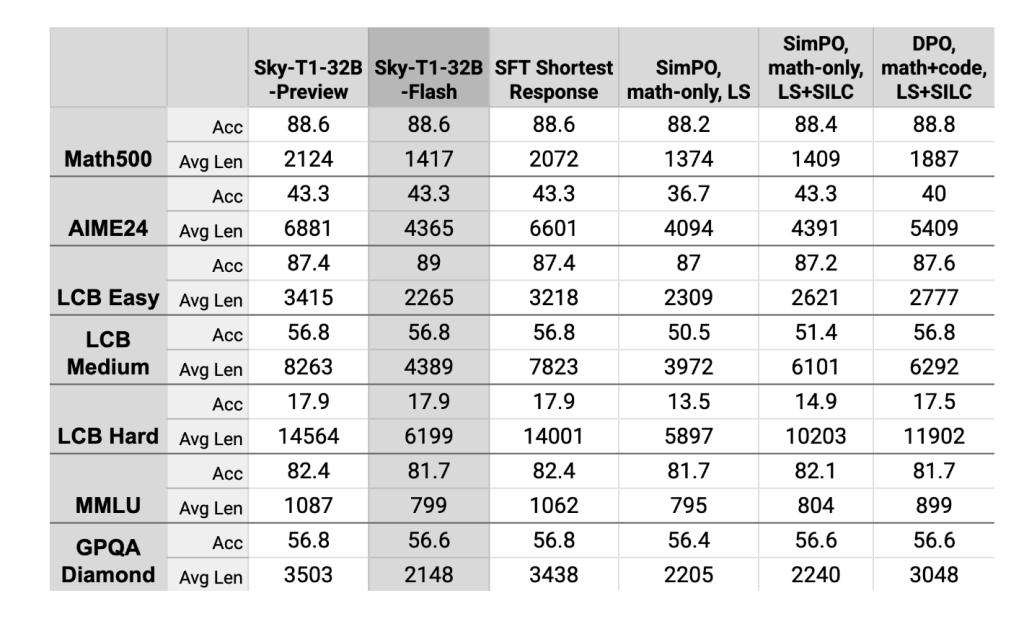

Sky-T1-32B-Flash’s ability to reduce overthinking stems from its optimized design and advanced preference optimization techniques. These methods guide the model toward concise, high-quality outputs, eliminating unnecessary computation while maintaining performance on complex tasks.

The model also benefits from efficient data generation and preprocessing workflows. These workflows ensure high-quality data sets that improve reasoning across different domains. In addition, the evaluation framework used for Sky-T1-32B-Flash provides reliable benchmarks, enabling consistent performance assessments.

One of the standout aspects of the Sky-T1-32B-Flash is its scalability and affordability. The model requires only $275 for training on 8 NVIDIA H100 GPUs and shows that cutting-edge research does not have to be financially restrictive. This availability paves the way for smaller organizations and academic institutions to conduct meaningful AI research without extensive computational resources.

Results and insights

The Sky-T1-32B-Flash delivers impressive results. By reducing inference costs by up to 57%, it achieves significant computational efficiency without compromising performance. Model accuracy remains high across math, science, and coding tasks, striking a critical balance between efficiency and reliability.

The open source nature of the Sky-T1-32B-Flash further enhances its utility. Researchers and developers gain access to a comprehensive pipeline, from data generation to evaluation, allowing them to replicate results and explore potential improvements. The availability of model weights and datasets encourages the wider AI community to build on this foundation and tackle new challenges.

Evaluation insights highlight the model’s ability to handle diverse and complex reasoning tasks effectively. For example, in fields such as mathematics and coding, where precision and logical consistency are essential, the Sky-T1-32B-Flash consistently delivers concise and accurate output. This reliability positions the model as a valuable tool for both academic research and industrial applications.

Conclusion

Sky-T1-32B-Flash solves key challenges in AI development, including overthinking and high inference costs, and sets a new standard for efficiency and accessibility. Its ability to reduce computational waste while maintaining accuracy across different domains makes it a practical and powerful tool for real-world applications.

Open-sourcing the entire development pipeline marks a decisive step towards democratizing AI research. By sharing methods, model weights, and datasets, NovaSky Lab fosters a culture of collaboration and transparency, encouraging innovation across the AI community. The Sky-T1-32B-Flash is not just a model, but a comprehensive framework for building efficient, high-performance AI systems.

Check out the model on Hugging Face and Blog. All credit for this research goes to the researchers in this project. Also, don’t forget to follow us Twitter and join ours Telegram channel and LinkedIn Grup. Don’t forget to join our 70k+ ML SubReddit.

🚨 [Recommended Read] Nebius AI Studio expands with vision models, new language models, embeddings and LoRA (Promoted)

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. His latest endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understood by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.

📄 Meet ‘Højde’: The Only Autonomous Project Management Tool (Sponsored)