Biophysical modeling acts as a valuable tool for understanding brain function by connecting neural dynamics at the cellular level with large -scale brain activity. These models are controlled by biologically interpretable parameters, many of which can be measured directly through experiments. However, some parameters remain unknown and must be set to adjust simulations with empirical data, such as rest mode fmri. Traditional optimization methods – including exhaustive search, gradient displacement, evolutionary algorithms and bayesian optimization – require repeated numerical integration of complex differential equations, making them computational intensive and difficult to scale for models involving several parameters or brain areas. As a result, many studies simplify the problem by setting only a few parameters or assuming uniform properties across regions, limiting biological realism.

Recent efforts aim to improve biological plausibility by explaining spatial heterogeneity in cortical properties using advanced optimization techniques such as Bayesian or evolutionary strategies. These methods improve the struggle between simulated and real brain activity and can generate interpretable measurements, such as excitation/inhibition, validated through pharmacological and PET image formation. Despite these progress, a significant bottleneck remains: the high calculation costs of integrating differential equations during optimization. Deep neural networks (DNNs) have been suggested in other scientific fields to approximate this process by learning the relationship between model parameters and resulting output that significantly speeds up the calculation. However, the use of DNNs on brain models is more challenging due to the stochastic nature of the equations and the large number of required integration steps, making the current DNN-based methods insufficient without significant adaptation.

Researchers from institutions, including the National University of Singapore, the University of Pennsylvania and Universitat Pompeu Fabra have introduced parties (deep learning to surrogate statistics optimization in average field modeling). This framework replaces costly numerical integration with a deep learning model that predicts whether specific parameters provide biologically realistic brainednamics. Applied to FEEDBACK Inhibition Control (FIC) Model offers a 2000 × Speedup and maintains the accuracy. Integrated with evolutionary optimization generalizes it across data sets, such as HCP and PNC, without further setting, obtain a 50 × speedup. This approach enables large -scale, biologically earthy modeling in neuroscience studies at the population level.

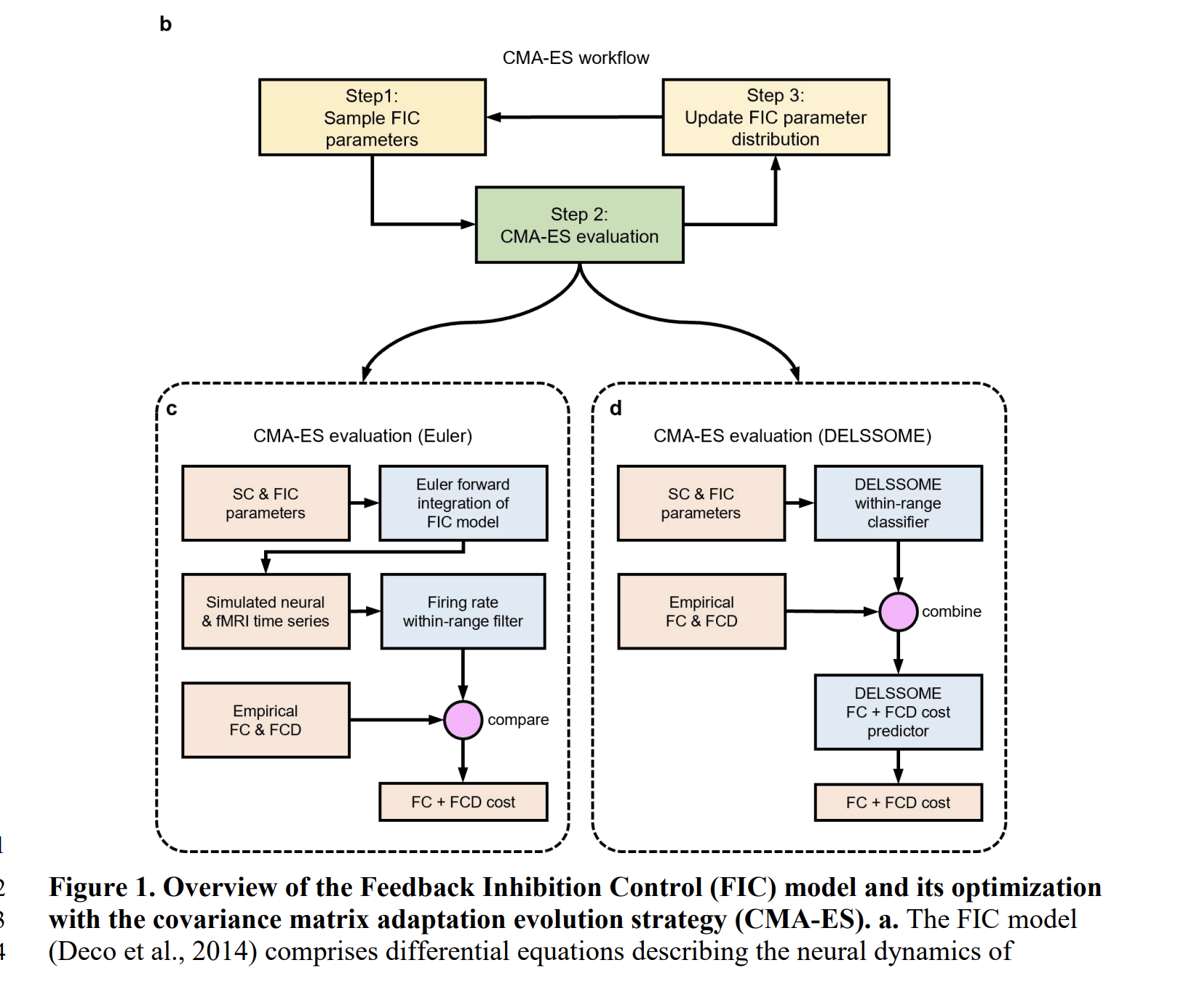

The study used Neuroimaging data from the HCP and PNC data set, treatment of rest mode FMRI and diffusion MRI scans to calculate functional connection (FC), functional connecting dynamics (FCD) and structural compound (SC) matrixes. A deep learning model, partly, was developed with two components: a classifier within reach to predict whether firing speeds are falling within a biological interval, and a cost deprivation to estimate discrepancies between simulated and empirical FC/FCD data. Exercise used CMA-ES optimization, generating over 900,000 data points across training, validation and test sets. Separate MLPs embedded inputs such as FIC parameters, SC and Empirical FC/FCD to support accurate prediction.

The FIC model simulates the activity of exciting and inhibiting neurons in cortical regions using a system of differential equations. The model was optimized using the CMA-ES-algorithm to make it more accurate, evaluating several parameter sets through calculating expensive numerical integration. To reduce these costs, the researchers introduced the parties, a deeply learning -based surrogate that predicts whether model parameters will provide biologically plausible firing speeds and realistic FCD. Partly achieved a 2000 × speed-up in evaluation and a 50 × speed-up in optimization, while maintaining comparable accuracy with the original method.

Finally, the study introduces the parties, a deep learning framework that significantly speeds up the estimation of parameters in biophysical brain models that achieve a 2000 × speedup over traditional Euler integration and a 50 × boost when combined with CMA-ES-Optimization. Partsome includes two neural networks that predict firing speed validity and FC+FCD costs using shared embedders of model parameters and empirical data. The framework is generalized across data sets without further setting and maintains model accuracy. Although retraining is required for different models or parameters, DelSssom’s core-approach-predicting surrogate statistics are rather than time series-a scalable solution for brain modeling at the population level.

Here it is Paper. Nor do not forget to follow us on Twitter and join in our Telegram Channel and LinkedIn GrOUP. Don’t forget to take part in our 90k+ ml subbreddit.

🔥 [Register Now] Minicon Virtual Conference On Agentic AI: Free Registration + Certificate for Participation + 4 Hours Short Event (21 May, 9- 13.00 pst) + Hands on Workshop

Sana Hassan, a consultant intern at MarkTechpost and dual-degree students at IIT Madras, is passionate about using technology and AI to tackle challenges in the real world. With a great interest in solving practical problems, he brings a new perspective to the intersection of AI and real solutions.