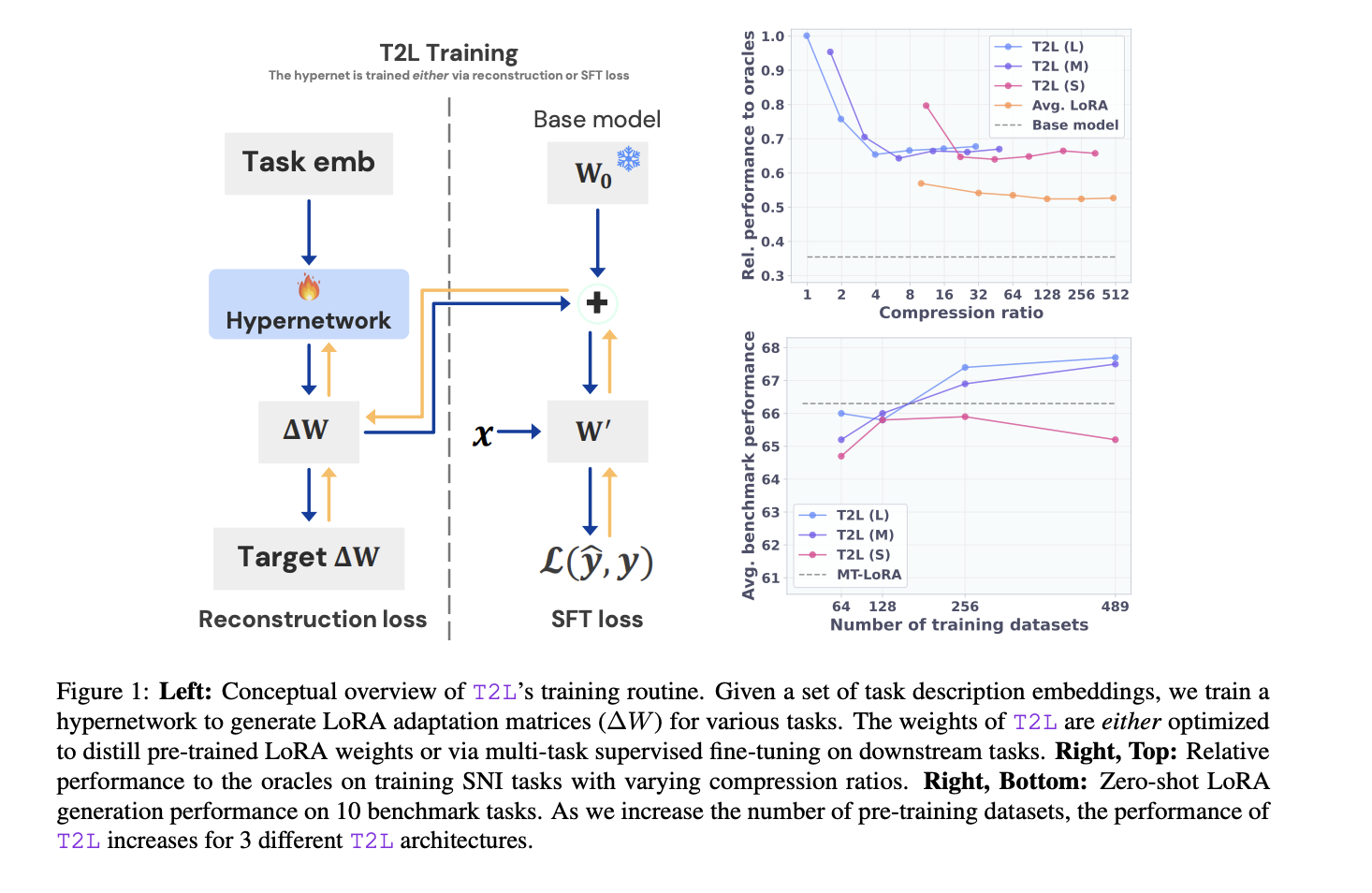

Sakana AI introduces text-to-Lora (T2L): A hypernetworks that generate task-specific LLM adapters (Loras) based on a text description of the assignment

Transformer models have significantly affected how AI systems are approaching tasks in natural language understanding, translation and reasoning. These large models, especially large language models (LLMs), have grown in size and complexity to the point where they include broad capabilities across different domains. However, it is a complex operation to apply these models to new, … Read more