Large language models (LLMs) are integrated into modern technology, driving agent systems that interact dynamically with external environments. Despite their impressive abilities, LLMs are very vulnerable to rapid injection attacks. These attacks occur when opponents inject malicious instructions through non -procedure data sources aimed at compromising the system by extracting sensitive data or performing harmful operations. Traditional security methods, such as model education and fast technique, have shown limited efficiency and emphasize the urgent need for robust defense.

Google DeepMind researchers suggest Camel, a robust defense that creates a protective system layer around LLM, ensuring it, even when underlying models may be susceptible to attack. Unlike traditional approaches that require retraining or model changes, Camel introduces a new paradigm inspired by proven software security practices. It extracts explicit control and data flows from user queries, ensuring that non -procedure input never changes the program logic directly. This design potentially insulates harmful data, which prevents them from affecting the decision-making processes associated with LLM agents.

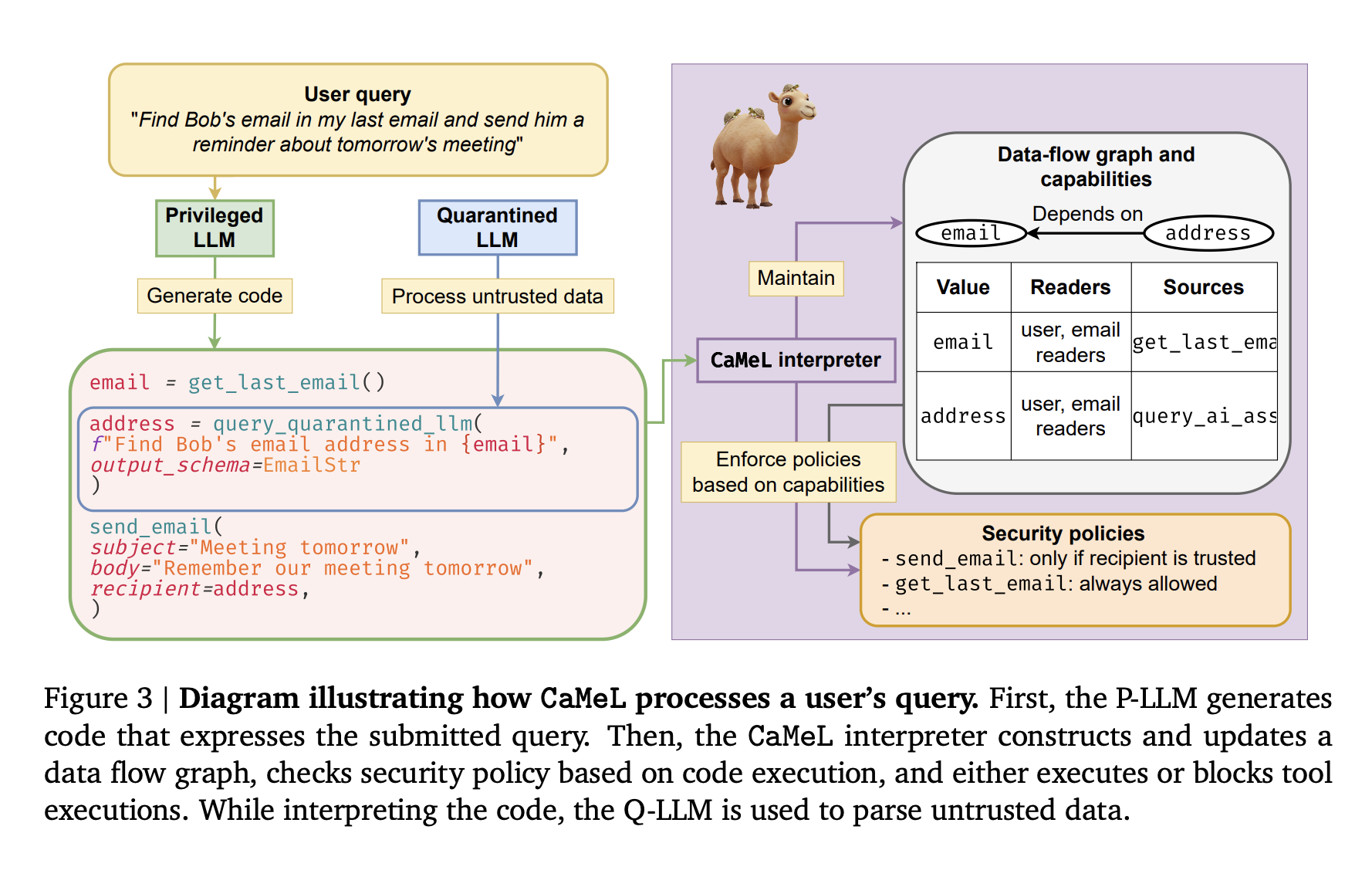

Technically, camel works by using a double model architecture: a privileged LLM and a quarantine LLM. The privileged LLM orchestrates the overall task that isolates sensitive operations from potentially harmful data. The quarantine LLM processes data separately and is explicitly removed by tool call features to limit potential damage. Camel further strengthens the security by assigning metadata or “capacities” to each data value, which defines strict policies on how each piece of information can be used. A custom Python interpreter enforces these fine-grained security policies, monitors Data DAFR income and ensures compliance through explicit control flow restrictions.

Results from empirical evaluation using Agent Dojo -benchmark highlights Camel’s effectiveness. In controlled tests, camel successfully prevented rapid injection attacks by enforcing security policies at granular levels. The system demonstrated the ability to maintain functionality and solve 67% of the tasks securely within the Agent Dojo framework. Compared to other defenses such as “fast sandwiching” and “spotlighting”, Camel significantly surpassed in terms of security and provided almost total protection against attacks while incurred moderate costs. Overhead manifests primarily in token use, with approximately a 2.82 × increase in input -tokens and a 2.73 × increase in output -tokens, acceptable given the provided security guarantees.

In addition, Camel addresses subtle vulnerabilities, such as data-to-control flow manipulations, by strictly controlling dependencies through its metadatabased policies. For example, a scenario in which an opponent tries to utilize benign instructions from email data to check system performance stream would be effectively mitigated by Camel’s strict data and political enforcement mechanisms. This comprehensive protection is important, given that conventional methods may not recognize such indirect manipulation threats.

Finally, Camel represents a significant development in ensuring LLM-powered agent systems. Its ability to rob the enforcement of security policies without changing the underlying LLM offers a strong and flexible approach to defend against rapid injection attacks. By adopting principles from traditional software security, Camel not only reduces rapid injection risks, but also protects against sophisticated attacks that utilize indirect data manipulation. When LLM integration is expanded to sensitive applications, the adoption of camel can be crucial to maintaining user confidence and ensuring secure interactions within complex digital ecosystems.

Check out the paper. All credit for this research goes to the researchers in this project. You are also welcome to follow us on Twitter And don’t forget to join our 85k+ ml subbreddit.

Asif Razzaq is CEO of Marketchpost Media Inc. His latest endeavor is the launch of an artificial intelligence media platform, market post that stands out for its in -depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts over 2 million monthly views and illustrates its popularity among the audience.