Text-to-Tech (TTS) technology has evolved dramatically in recent years, from robot-sounding voices to very natural speech synthesis. Bark is an impressive open source TTS model developed by Suno that can generate remarkably human-like speech in multiple languages, complete with non-verbal sounds like laughing, sighing and crying.

In this tutorial, we implement bark using Hugging Face’s Transformers library in a Google Colab environment. At the end you will be able to:

- Create and Run Bark in Colab

- Generate speech from text input

- Experiments with different voices and voice styles

- Create Practical TTS -Applications

Bark is fascinating because it is a fully generative text-to-audio model that can produce natural-sounding speech, music, background noise and simple sound effects. Unlike many other TTS systems that depend on extensive sound processing and voice cloning, bark can generate different voices without speaking-specific training.

Let’s get started!

Implementation steps

Step 1: Setting up the environment

First, we need to install the necessary libraries. Bark requires the transformer library from embracing face along with a few other dependencies:

# Install the required libraries

!pip install transformers==4.31.0

!pip install accelerate

!pip install scipy

!pip install torch

!pip install torchaudioNext we import the libraries we use:

import torch

import numpy as np

import IPython.display as ipd

from transformers import BarkModel, BarkProcessor

# Check if GPU is available

device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"Using device: {device}")Step 2: Loading the Bark Model

Now let’s load the bark model and processor from embracing face:

# Load the model and processor

model = BarkModel.from_pretrained("suno/bark")

processor = BarkProcessor.from_pretrained("suno/bark")

# Move model to GPU if available

model = model.to(device)Bark is a relatively large model, so this step can take a minute or two to complete as it downloads the model weights.

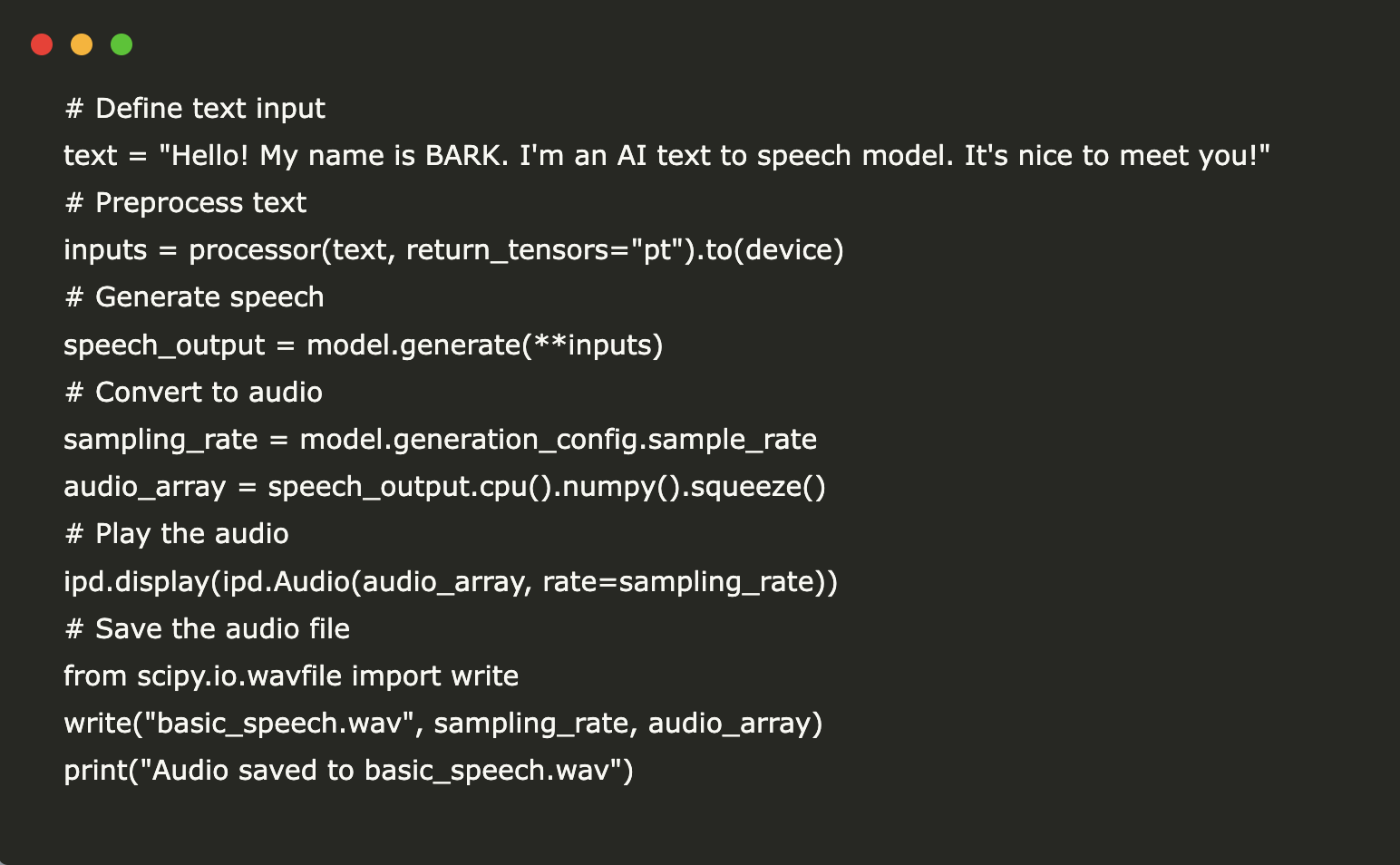

Step 3: Generating basic speech

Let’s start with a simple example to generate speech from text:

# Define text input

text = "Hello! My name is BARK. I'm an AI text to speech model. It's nice to meet you!"

# Preprocess text

inputs = processor(text, return_tensors="pt").to(device)

# Generate speech

speech_output = model.generate(**inputs)

# Convert to audio

sampling_rate = model.generation_config.sample_rate

audio_array = speech_output.cpu().numpy().squeeze()

# Play the audio

ipd.display(ipd.Audio(audio_array, rate=sampling_rate))

# Save the audio file

from scipy.io.wavfile import write

write("basic_speech.wav", sampling_rate, audio_array)

print("Audio saved to basic_speech.wav")Output: To listen to audio please refer to the laptop (find the attached link at the end

Step 4: Using different speakers’ presets

Bark comes with multiple predefined speaker advances in different languages. Let’s explore how to use them:

# List available English speaker presets

english_speakers = [

"v2/en_speaker_0",

"v2/en_speaker_1",

"v2/en_speaker_2",

"v2/en_speaker_3",

"v2/en_speaker_4",

"v2/en_speaker_5",

"v2/en_speaker_6",

"v2/en_speaker_7",

"v2/en_speaker_8",

"v2/en_speaker_9"

]

# Choose a speaker preset

speaker = english_speakers[3] # Using the fourth English speaker preset

# Define text input

text = "BARK can generate speech in different voices. This is an example of a different speaker preset."

# Add speaker preset to the input

inputs = processor(text, return_tensors="pt", voice_preset=speaker).to(device)

# Generate speech

speech_output = model.generate(**inputs)

# Convert to audio

audio_array = speech_output.cpu().numpy().squeeze()

# Play the audio

ipd.display(ipd.Audio(audio_array, rate=sampling_rate))Step 5: Generating multilingual speech

Bark supports several languages out of the box. Let’s generate speak in different languages:

# Define texts in different languages

texts = {

"English": "Hello, how are you doing today?",

"Spanish": "¡Hola! ¿Cómo estás hoy?",

"French": "Bonjour! Comment allez-vous aujourd'hui?",

"German": "Hallo! Wie geht es Ihnen heute?",

"Chinese": "你好!今天你好吗?",

"Japanese": "こんにちは!今日の調子はどうですか?"

}

# Generate speech for each language

for language, text in texts.items():

print(f"\nGenerating speech in {language}...")

# Choose appropriate voice preset if available

voice_preset = None

if language == "English":

voice_preset = "v2/en_speaker_1"

elif language == "Spanish":

voice_preset = "v2/es_speaker_1"

elif language == "German":

voice_preset = "v2/de_speaker_1"

elif language == "French":

voice_preset = "v2/fr_speaker_1"

elif language == "Chinese":

voice_preset = "v2/zh_speaker_1"

elif language == "Japanese":

voice_preset = "v2/ja_speaker_1"

# Process text with language-specific voice preset if available

if voice_preset:

inputs = processor(text, return_tensors="pt", voice_preset=voice_preset).to(device)

else:

inputs = processor(text, return_tensors="pt").to(device)

# Generate speech

speech_output = model.generate(**inputs)

# Convert to audio

audio_array = speech_output.cpu().numpy().squeeze()

# Play the audio

ipd.display(ipd.Audio(audio_array, rate=sampling_rate))

write("basic_speech_multilingual.wav", sampling_rate, audio_array)

print("Audio saved to basic_speech_multilingual.wav")Step 6: Creating a Practical Application – Audio Book Generator

Let’s build a simple audiobook generator that can convert section of text to speech:

def generate_audiobook(text, speaker_preset="v2/en_speaker_2", chunk_size=250):

"""

Generate an audiobook from a long text by splitting it into chunks

and processing each chunk separately.

Args:

text (str): The text to convert to speech

speaker_preset (str): The speaker preset to use

chunk_size (int): Maximum number of characters per chunk

Returns:

numpy.ndarray: The generated audio as a numpy array

"""

# Split text into sentences

import re

sentences = re.split(r'(?<=[.!?])\s+', text)

chunks = []

current_chunk = ""

# Group sentences into chunks

for sentence in sentences:

if len(current_chunk) + len(sentence) < chunk_size:

current_chunk += sentence + " "

else:

chunks.append(current_chunk.strip())

current_chunk = sentence + " "

# Add the last chunk if it's not empty

if current_chunk:

chunks.append(current_chunk.strip())

print(f"Split text into {len(chunks)} chunks")

# Process each chunk

audio_arrays = []

for i, chunk in enumerate(chunks):

print(f"Processing chunk {i+1}/{len(chunks)}")

# Process text

inputs = processor(chunk, return_tensors="pt", voice_preset=speaker_preset).to(device)

# Generate speech

speech_output = model.generate(**inputs)

# Convert to audio

audio_array = speech_output.cpu().numpy().squeeze()

audio_arrays.append(audio_array)

# Concatenate audio arrays

import numpy as np

full_audio = np.concatenate(audio_arrays)

return full_audio

# Example usage with a short excerpt from a book

book_excerpt = """

Alice was beginning to get very tired of sitting by her sister on the bank, and of having nothing to do. Once or twice she had peeped into the book her sister was reading, but it had no pictures or conversations in it, "and what is the use of a book," thought Alice, "without pictures or conversations?"

So she was considering in her own mind (as well as she could, for the hot day made her feel very sleepy and stupid), whether the pleasure of making a daisy-chain would be worth the trouble of getting up and picking the daisies, when suddenly a White Rabbit with pink eyes ran close by her.

"""

# Generate audiobook

audiobook = generate_audiobook(book_excerpt)

# Play the audio

ipd.display(ipd.Audio(audiobook, rate=sampling_rate))

# Save the audio file

write("alice_audiobook.wav", sampling_rate, audiobook)

print("Audiobook saved to alice_audiobook.wav")In this tutorial, we have successfully implemented the Bark Text-to-Speech model using Hugging Face’s Transformers Library in Google Colab. In this tutorial we have learned to:

- Create and load the bark model in a Colab environment

- Generate basic speech from text input

- Use different speaker advances for variation

- Create multilingual speech

- Build a practical application on audio book generator

Bark represents an impressive progress in text-to-speech technology that offers expressive speech production of high quality without the need for extensive training or fine-tuning.

Future experimentation that you can try

Some potential next steps to further explore and expand your work with bark:

- Voice cloning: Experiment with voice cloning techniques to generate speech that mimics specific speakers.

- Integration with other systems: Combine bark with other AI models, such as language models for personalized voice assistants in dynamics such as restaurants and reception, content generation, translation systems, etc.

- Web application: Build a web interface for your TTS system to make it more accessible.

- Custom fine tuning: Explore techniques for fine tuning bark on specific domains or voice styles.

- Performance optimization: Investigate methods of optimizing inference speed for real -time applications. This will be an important aspect of any use in production because the inference time to treat even a small part of text, these giant models take significant time because of their generalization for a large number of use cases.

- Quality evaluation: Implement objective and subjective evaluation measurements to assess the quality of the generated speech.

The field of text-to-speech develops rapidly, and projects such as Bark push the limits of what is possible. As you continue to explore this technology, you will discover even more exciting applications and improvements.

Here it is Colab notebook. Nor do not forget to follow us on Twitter and join in our Telegram Channel and LinkedIn GrOUP. Don’t forget to take part in our 80k+ ml subbreddit.

🚨 Meet Parlant: An LLM-First Conversation-IA frame designed to give developers the control and precision they need in relation to their AI Customer Service Agents, using behavioral guidelines and Runtime supervision. 🔧 🎛 It is operated using a user -friendly cli 📟 and native client SDKs in Python and Typescript 📦.

Asjad is an internal consultant at Marketchpost. He surpasses B.Tech in Mechanical Engineering at the Indian Institute of Technology, Kharagpur. Asjad is a machine learning and deep learning enthusiast who always examines the use of machine learning in healthcare.

Parlant: Build Reliable AI customer facing agents with llms 💬 ✅ (promoted)