Artificial intelligence has made significant progress in recent years, yet it is a complex challenge to integrate real -time speech interaction with visual content. Traditional systems are often dependent on separate components of voice activity detection, speech recognition, text dialogue and text-to-speech synthesis. This segmented approach can introduce delays and may not be able to capture the nuances of human conversation, such as emotions or non-number sounds. These limitations are particularly evident in applications designed to help visually impaired individuals where timely and accurate descriptions of visual scenes are important.

Addressing these challenges, Kyutai has introduced Moshivis, an Open Source Vision Speech Model (VSM) that enables natural speech interactions in real time about images. Building on their previous work with the Moshi-one Speech Text Foundation Model Designed for real-time dialogue is expanding these options to include visual input. This improvement allows users to participate in fluid conversations about visual content that mark a remarkable progress in AI development.

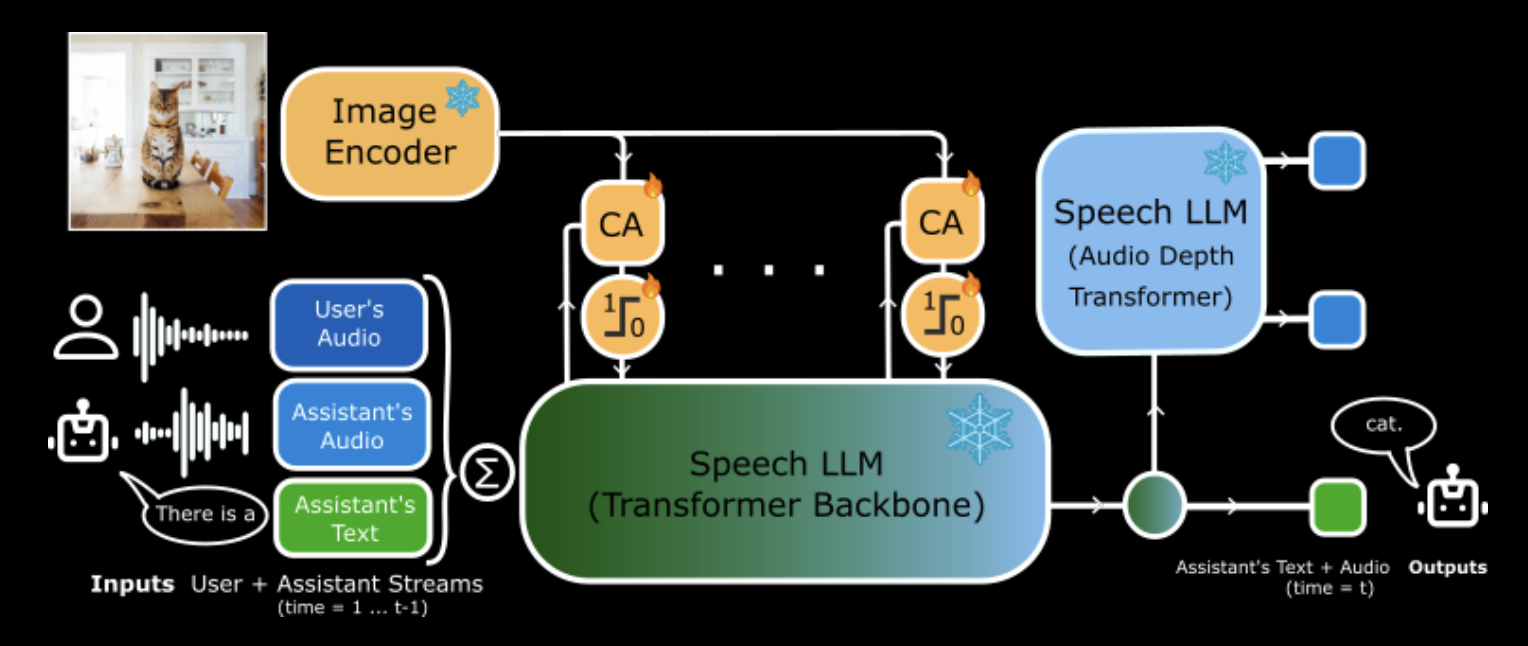

Technically, Moshivis Moshi enhances lightweight transverse attention modules that provide visual information from an existing visual codes in Moshi’s speech-token stream. This design ensures that Moshi’s original conversation skills remain intact while introducing the ability to treat and discuss visual input. A gate mechanism within the cross -security modules allows the model to selectively engage in visual data, maintain efficiency and responsiveness. Moshivis especially adds approx. 7 milliseconds with latency per day. Inference steps on units for consumer quality, such as a Mac Mini with an M4 Pro chip, resulting in the total of 55 milliseconds per year. Inferensal steps. This performance remains well under the threshold of 80 milliseconds for real -time latency, ensuring smooth and natural interactions.

In practical uses, Moshivis demonstrates his ability to give detailed descriptions of visual scenes through natural speech. For example, when presented to a picture showing green metal structures surrounded by trees and a building with a light brown exterior articulates moshivis:

“I see two green metal structures with a mesh top and they are surrounded by large trees. In the background you can see a building with a light brown exterior and a black roof that appears to be made of stone.”

This capacity opens up new options for applications such as the supply of audio descriptions to visual impaired, improving accessibility and enabling more natural interactions with visual information. By releasing Moshivis as an open source project, Kyutai invites the research community and developers to explore and expand this technology and promote innovation in vision-speech models. The availability of model weight, inferens and visual speech Benchmarks supports further collaboration efforts to refine and diversify the use of Moshivis.

Finally, Moshivis represents a significant progress in AI, which merges visual understanding with real -time speech interaction. Its open source nature encourages widespread adoption and development and paves the way for more accessible and natural interactions with technology. As AI continues to develop, innovations like Moshivis bring us closer to trouble -free integration of multimodal understanding, which improves user experiences across different domains.

Check out The technical details and try it here. All credit for this research goes to the researchers in this project. You are also welcome to follow us on Twitter And don’t forget to join our 80k+ ml subbreddit.

Asif Razzaq is CEO of Marketchpost Media Inc. His latest endeavor is the launch of an artificial intelligence media platform, market post that stands out for its in -depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts over 2 million monthly views and illustrates its popularity among the audience.