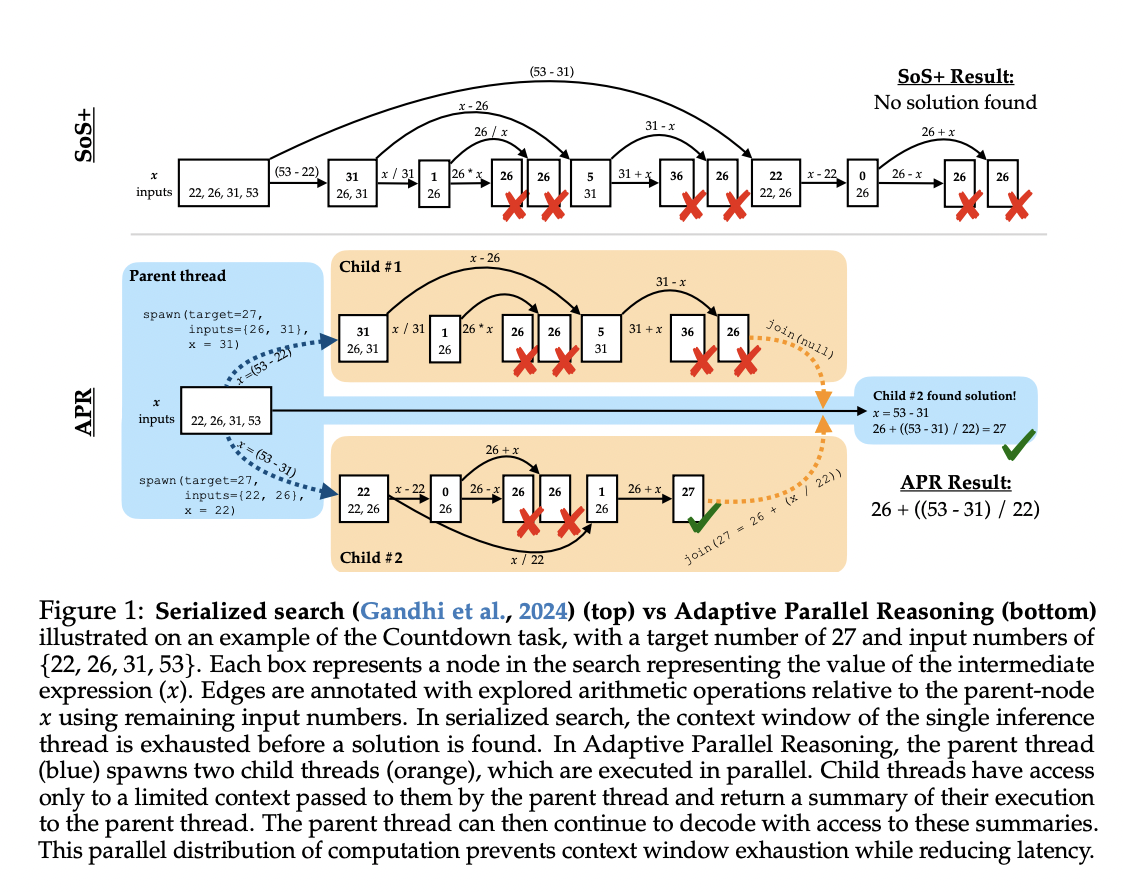

Large Language Models (LLMS) have made significant progress in reasoning features, exemplified by breakthrough systems such as Openai O1 and Deepsekr1, using test time calculation for search and reinforcement learning to optimize performance. Despite this progress, the current methods are facing critical challenges that prevent their effectiveness. Serialized chain-to-thinking approaches generate excessive long starting sequences, increase latency and push against context window limits. In contrast, parallel methods such as best-of-n and self-consistency suffer from poor coordination between inference paths and lack of end-to-end optimization, resulting in calculation inefficiency and limited improvement potential. Structured inference time Search techniques such as wood-to-thoughts also depend on manually designed search structures, which significantly limits their flexibility and ability to scale across different reasoning tasks and domains.

Several approaches have emerged to tackle the calculation challenges in LLM Reasoning. Inference-time scaling methods have improved downstream task performance by increasing the calculation of test time, but typically generates significantly longer output sequences. This creates higher latency time and forces models to fit entire reasoning chains into a single context window, making it difficult to participate in relevant information. Parallelization strategies such as ensembling have tried to mitigate these problems by running more independent language model calls at the same time. However, these methods suffer from poor coordination across parallel threads, leading to redundant calculation and ineffective resource utilization. Fixed parallelizable rationale structures, such as wooden tanks and multi-agent-reasoning systems, have been suggested, but their hand-designed search structures limit flexibility and scalability. Other approaches that pasta break down tasks for parallel sub -tasks, but ultimately reintegrate the complete context of the main inferences, which fails to effectively reduce context consumption. Meanwhile Hogwild! Inference uses parallel worker threads, but is solely dependent on encouraging without end-to-end optimization.

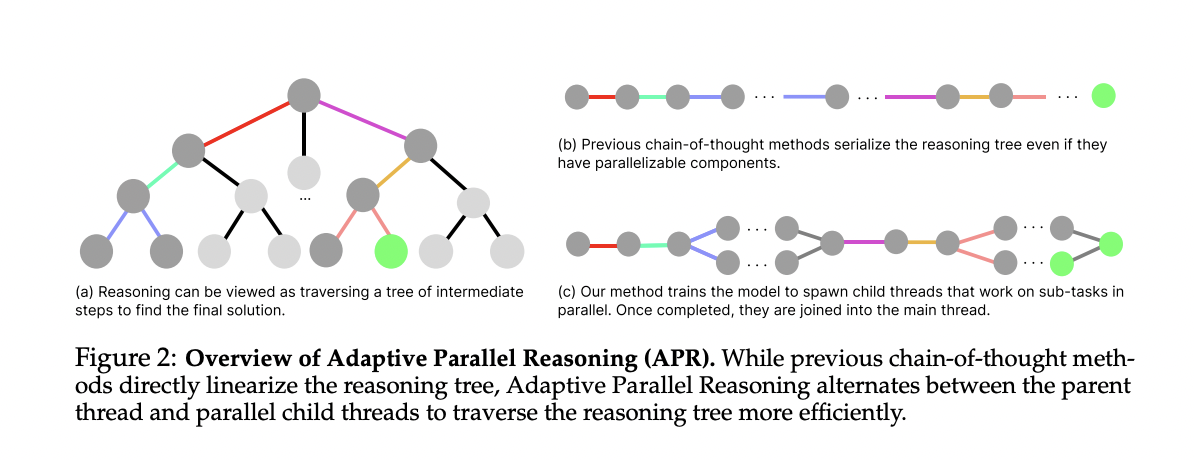

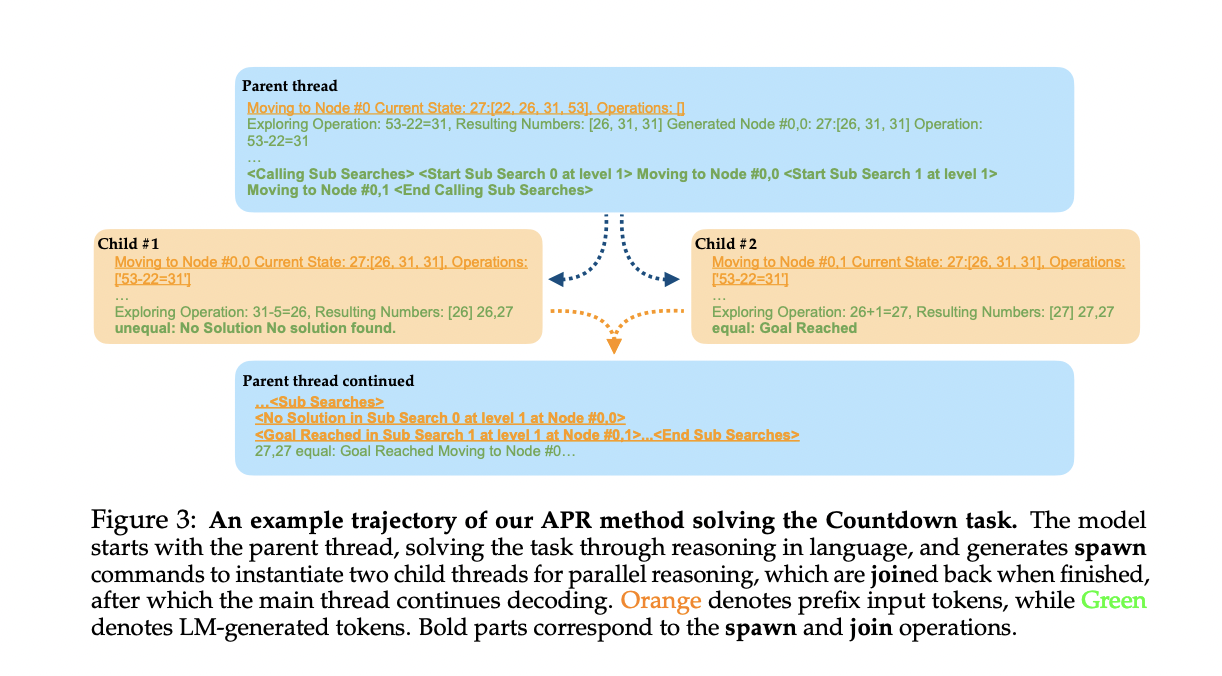

Researchers from UC Berkeley and UCSF have suggested Adaptive parallel reasoning (APR). This robust approach allows language models to dynamically distribute inference time calculation across both serial and parallel operations. This method generalizes existing reasoning methods-Inclusive serialized reasoning chain-tanks, parallelized inference with self-consistency and structured search-by-training models to determine when and how to parallel inference operations rather than introduce fixed search structures. Apr introduces two key innovations: a parent-child-thread mechanism and end-to-end reinforcement learning optimization. The thread mechanism allows overall infernic threads to delegate sub -tasks to several child threads through a spawn () operation, enabling parallel exploration of different reasoning paths. Child wires then return results to overall thread via a joining () operation so that the parent can continue to decode with this new information. Built on the Sglang model, which serves frames, reduces APR APR significantly in real-time latency by performing inference in children’s advice simultaneously through batching. The second innovation-fine tuning via end-to-end reinforcement learning optimizes to the overall task success without requiring predefined reasoning structures. This approach delivers three significant benefits: Higher performance in solid context windows, superior scaling with increased computer budgets and improved performance at equivalent latency compared to traditional methods.

APR architecture implements a sophisticated multi-threading mechanism that allows language models to dynamic orchestrate parallel inference processes. Apr addresses the limitations of serialized reasoning methods by distributing calculation of parent and child wires, minimizing latency while improving performance within context restrictions. Architecture consists of three key components:

First Multi-threading inference system Allows parental threads to spawn several child threads using a spawn (MSGS) operation. Each child thread gets a clear context and carries out inference independently, yet at the same time using the same language model. When a child thread finishes its task, it returns results to the parent via a join (MSG) operation, which selectively communicates only the most relevant information. This approach reduces the use of token significantly by holding intermediate search tracks limited to children’s wires.

Second Training methodology Uses a two-phase approach. Originally, APR is using monitored learning with automatically generated demonstrations that incorporate both in-depth and width-first search strategies, creating hybrid search patterns. The symbolic solver creates demonstrations with parallelization and breaks down searches for multiple components that avoid bottlenecks in the context window during both training and inference.

Finally, the system implements End-to-end reinforcement learning optimization with GRPO (gradient -based police optimization). In this phase, the model learns to strategically determine when and how broadly to invoke child advice, optimizes for calculation efficiency and reasoning efficiency. The model iteratively tries to reason traces, evaluate their correctness and adjust parameters accordingly, ultimately learns how to balance parallel investigation against context window limits for maximum performance.

The evaluation compared adaptive parallel reasoning with serialized chain-abhound reasoning and self-consistency methods using a standard-cod-kun language model with 228m parameters built on the Llama2 architecture and supports a 4,096-token context window. All models were initialized through monitored learning of 500,000 lane from symbolic solvers. For assessing direct calculation accuracy, the team implemented a budget limitation method with context window conditioning for SOS+ models and wire numbers for APR models. The Sglang frame was used for inference due to its support for continuous batching and radix attention, enabling effective APR implementation.

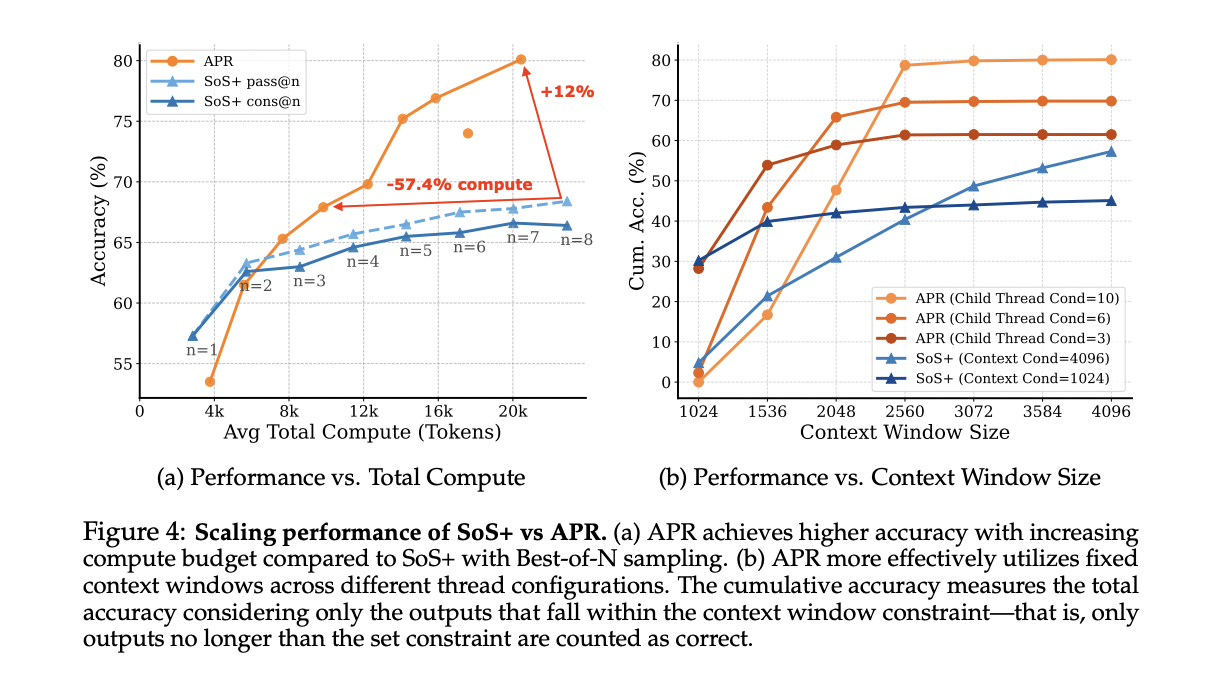

Experimental results show that APR consistently surpasses serialized methods across multiple dimensions. When you scale with higher calculation, APR originally underperses in low confirmation regimes due to parallelism costs, but surpasses significant SOS+ as calculation increases, which achieves an improvement of 13.5% at 20K-tokens and surpasses SOS+ Pass@8 Performance, while 57.4% less is calculated. For context window scaling, APR consistently utilizes context more efficiently, with 10 strands that achieve approx. 20% higher accuracy at the 4K-token limit by distributing reasoning over parallel threads instead of containing entire tracks in a single context window.

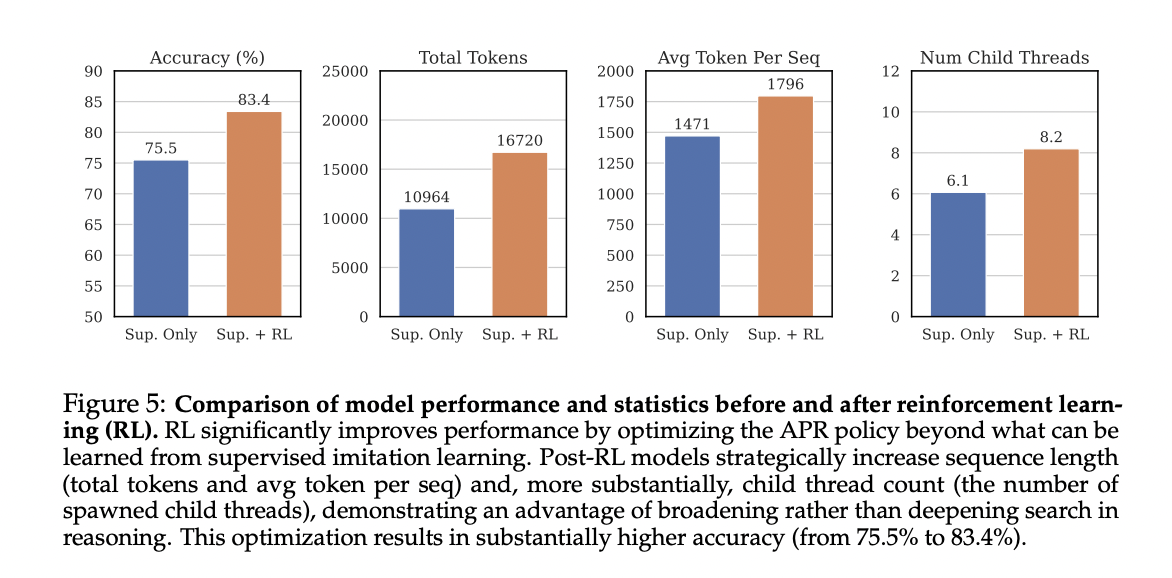

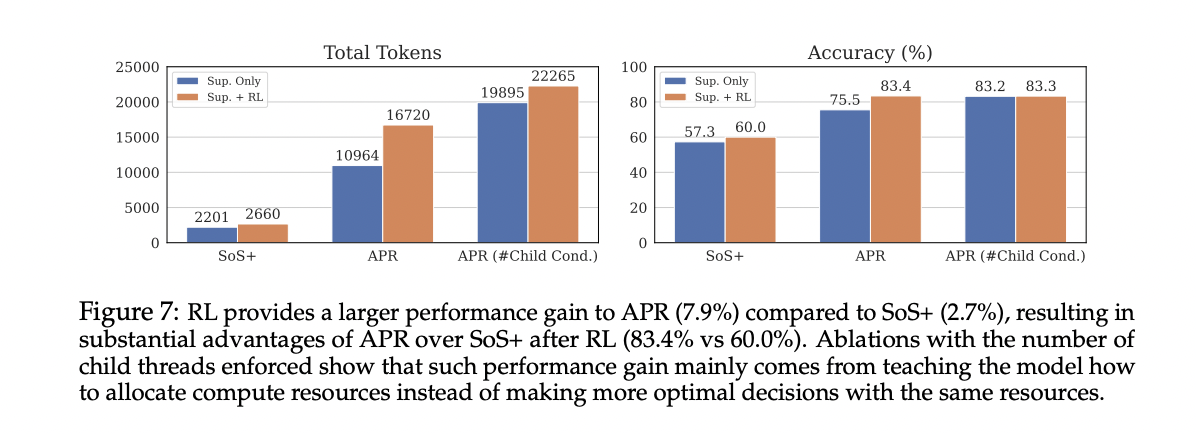

End-to-end reinforcement learning significantly improves APR performance significantly, increasing accuracy from 75.5% to 83.4%. The RL-optimized models demonstrate significantly different behavior, which increases both the sequence length (22.1% relative increase) and the number of child wires (34.4% relative increase). This reveals that for countdown tasks, RL-optimized models favor wider search patterns rather than deeper, demonstrating the ability of the algorithm to discover optimal search strategies autonomously.

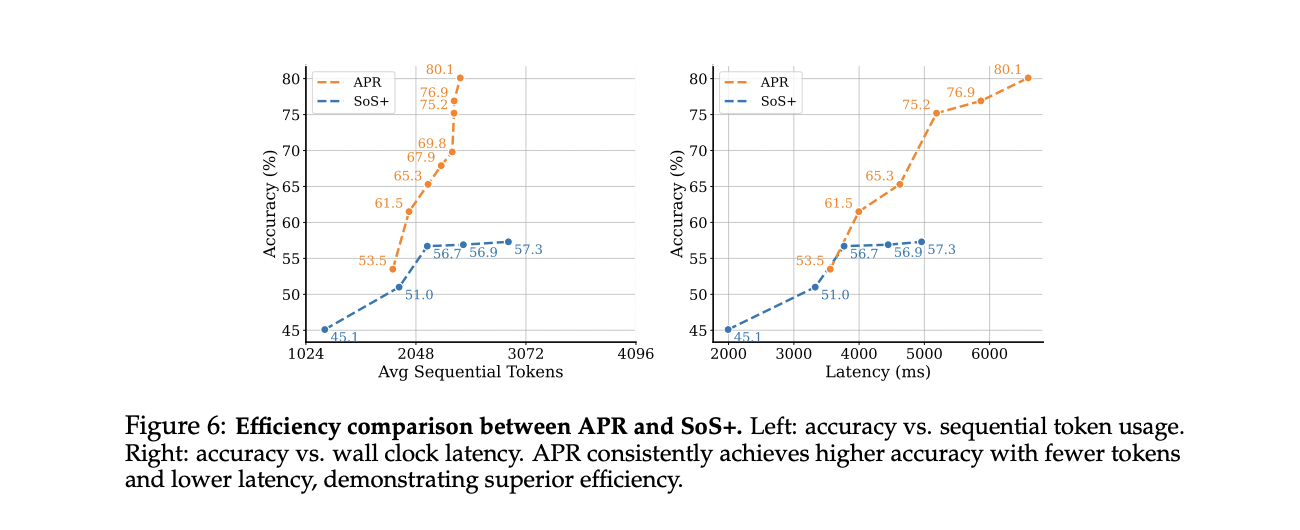

Apr demonstrates superior efficiency in both theoretical and practical evaluations. When measuring sequential token use, APR increases significant accuracy with minimal additional sequential tokens beyond 2,048, rarely over 2,500 tokens, while SOS+ shows only marginal improvements despite approaching 3,000 tokens. The real world’s latency test of an 8-GPU NVIDIA RTX A6000 server reveals APR achieves significantly better accuracy range and reaches 75% accuracy at 5000 ms per day. Sample-a 18% absolute improvement over SOS+’s 57%. These results highlight APR’s effective hardware parallelization and potential for optimized performance in implementation scenarios.

Adaptive parallel reasoning represents a significant development in language model-reasoning functions by enabling dynamic distribution of calculation across serial and parallel paths through a parent-child wire mechanism. By combining monitored training with end-to-end-reinforcing learning, APR eliminates the need for manually designed structures, while the models can develop optimal parallelization strategies. Experimental results on the countdown task demonstrate APR’s significant advantages: Higher performance within fixed context windows, superior scaling with increased computer budgets and improved success rates significantly at equivalent latency time limits. These results highlight the potential of reasoning systems that dynamically structure inference processes to achieve improved scalability and efficiency in complex problem -solving tasks.

Check Paper. Nor do not forget to follow us on Twitter and join in our Telegram Channel and LinkedIn GrOUP. Don’t forget to take part in our 90k+ ml subbreddit. For promotion and partnerships, Please speak us.

🔥 [Register Now] Minicon Virtual Conference On Agentic AI: Free Registration + Certificate for Participation + 4 Hours Short Event (21 May, 9- 13.00 pst) + Hands on Workshop

Asjad is an internal consultant at Marketchpost. He surpasses B.Tech in Mechanical Engineering at the Indian Institute of Technology, Kharagpur. Asjad is a machine learning and deep learning enthusiast who always examines the use of machine learning in healthcare.