Long-graph Multi-Agent Swarm is a Python library designed to orchestrate multiple AI agents as a coherent “Sverm.” It is based on Langgraph, a frame for the construction of robust, state-of-the-art agent work, to enable a specialized form of multi-agent architecture. In a SVERM, agents with different specializations deliver dynamic control to each other when the tasks require, rather than a single monolithic remedy that tries everything. The system traces which agent was last active, so when a user delivers the next input, the conversation resumes seamlessly with the same agent. This approach solves the problem of building co-operative AI workflows where the most qualified agent can handle each sub-task without losing context or continuity.

Langgraph Swarm aims to make such a multi-agent coordination easier and more reliable to developers. It provides abstractions to connect individual language model (each potentially with their tools and prompts) to an integrated application. The library comes with out-of-the-box support for streaming responses, short-term and long-term memory integration and even human-i-loop intervention thanks to its foundation on long graph. By utilizing Langgraph (a lower-level orchestration frame) and naturally mounted in the wider Langchain ecosystem, the Langgraph Swarm allows machine learning engineers and researchers the opportunity to build complex AI agent systems and at the same time maintain explicit control over the flow of information and decisions.

Langgraph Swarm Architecture and Key Functions

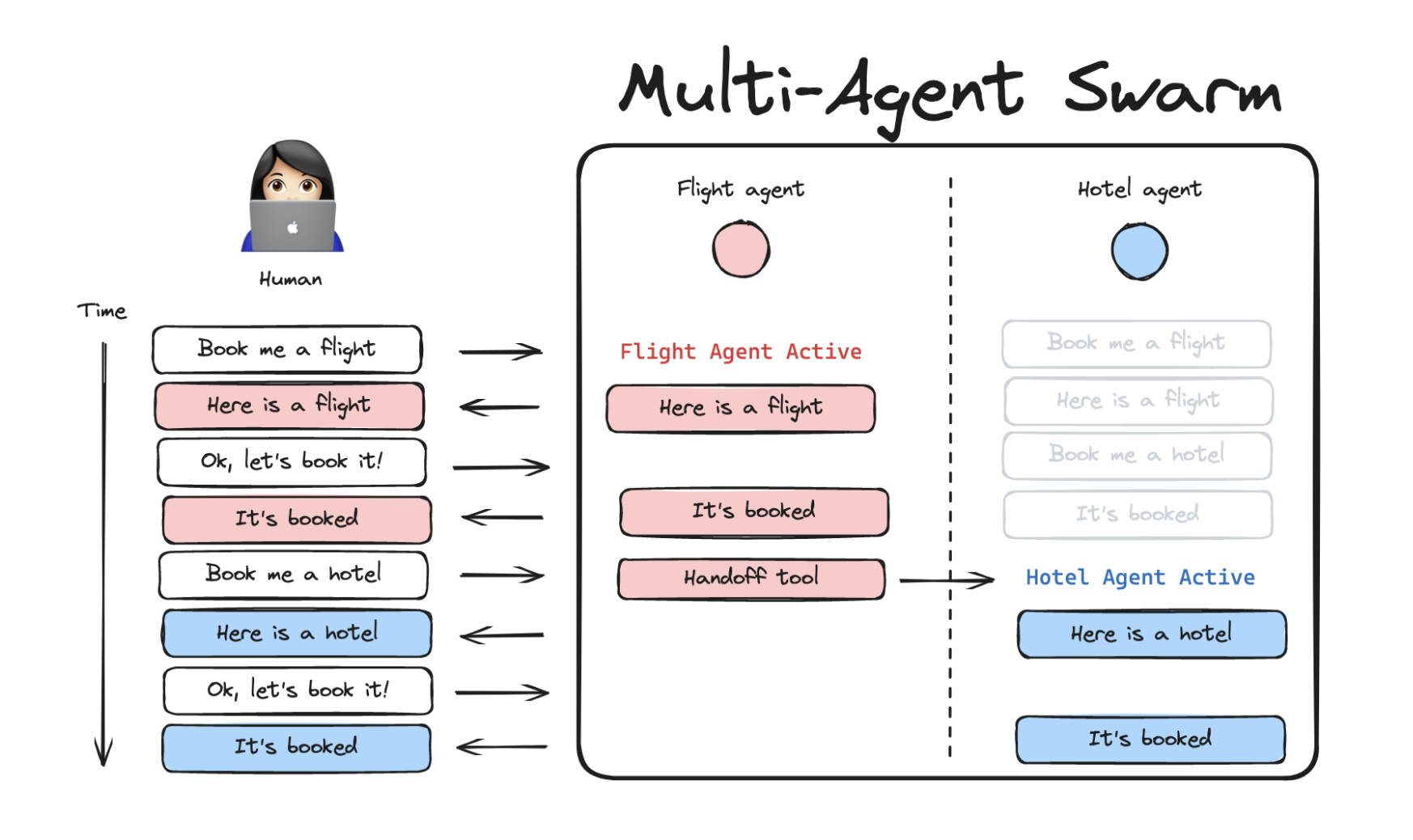

In its core, Langgraph Swarm represents several agents as nodes in a corrected state graph, edges define delivery paths, and a shared state tracks ‘active_ agent’. When an agent calls on a pass, the library updates this field and transmits the necessary context so that the next agent has seamlessly continues the conversation. This setup supports collaborative specialization and lets each agent focus on a narrow domain while offering customized delivery tools for flexible workflows. Built on long-grapes streaming and memory modules retain Swarm short-term conversation coherence and long-term knowledge, ensuring coherent, multi-turning interactions even when control switches between agents.

Agent Coordination via Handoff tools

Langgraph Swarms Handoff tools let an agent transfer control to another by issuing a ‘command’ that updates the shared state, switches ‘Active_Agent’ and passes with context, such as relevant messages or a custom summary. While the default tool is handing out the full conversation and inserting a review, developers can implement custom tools to filter context, add instructions or rename the action to influence LLM’s behavior. Unlike autonomous AI-routing patterns, Swarms Routing explicitly defines: Each delivery tool specifies which agent can take over, ensuring predictable currents. This mechanism supports collaborative patterns, such as a “travel planner” that delegates medical questions to a “medical adviser” or a coordinator that distributes technical and invoicing questions to specialized experts. It depends on an internal router to direct user messages to the current agent until another handover occurs.

State management and memory

State and memory handling is important to preserve context when agents hand out tasks. By default, Langgraph Swarm maintains a shared state that contains the history of the conversation and an ‘active_ agent’ marker and uses a checkpoint (such as a memory saving or database shop) to continue this state across turns. It also supports a memory store for long -term knowledge that allows the system to log facts or previous interactions to future sessions while holding a window with recent messages to immediate context. Together, these mechanisms ensure that the swarm never “forgets” as an agent is active or what has been discussed, enabling seamless multi-turn dialogues and accumulating user preferences or critical data over time.

When more granular control is needed, developers can define custom mode forms so that each agent has its private message story. When wrapping agent calls to map the global state to agent -specific fields before inviting and merging updates afterwards, Keep Tailor’s degree of context sharing. This approach supports workflows ranging from fully collaborative means to isolated reasoning modules, all of utilizing the long -graph Swarm’s robust orchestration, memory and state management infrastructure.

Adaptation and enlargement

Langgraph Swarm offers extensive flexibility to custom workflows. Developers can override the standard handling tool that passes all messages and changes the active means to implement specialized logic, such as summarizing context or attaching additional metadata. Custom tools simply return a long-graph command to update mode, and agents need to be configured to handle these commands via the relevant node types and state schedule keys. In addition to deliveries, one can redefine how agents share or insulate memory using Langgraph’s entered state schedules: Mapping the global swarm state to fields per year. Agent before invoking and merging the results afterwards. This enables scenarios where an agent maintains a private conversation story or uses another communication format without postponing its internal reasoning. For full control, it is possible to bypass API at high level and manually assemble a ‘state graph’: add each compiled agent as a node, define transition edges and fasten the active-agent router. While most use cases take advantage of the simplicity of ‘Create_Swarm’ and ‘Create_react_Agent’, the ability to fall to long graph primitives ensures that practitioners can inspect, adjust or expand all aspects of multi-agent coordination.

Ecosystem integration and addictions

Langgraph Swarm is tightly integrated with Langchain, utilizes components such as Langsmith for evaluation, langchain \ _openai for model access and long graphs for orchestration features such as persistence and cache. Its Model-Replacent Design lets it coordinate agents across any LLM-backend (Openai, Hugging Face or others), and it is available in both Python (‘Pip Install Long-Graph swarm’) and JavaScript/Typescript (‘@Langchain/Langgraph swarm’), making it suitable for web or serlic environment. Distributed under the MY license and with active development it continues to take advantage of community contributions and improvements in the langchain ecosystem.

Sample implementation

Below is a minimal setup of a two-agent SVERM:

from langchain_openai import ChatOpenAI

from langgraph.checkpoint.memory import InMemorySaver

from langgraph.prebuilt import create_react_agent

from langgraph_swarm import create_handoff_tool, create_swarm

model = ChatOpenAI(model="gpt-4o")

# Agent "Alice": math expert

alice = create_react_agent(

model,

[lambda a,b: a+b, create_handoff_tool(agent_name="Bob")],

prompt="You are Alice, an addition specialist.",

name="Alice",

)

# Agent "Bob": pirate persona who defers math to Alice

bob = create_react_agent(

model,

[create_handoff_tool(agent_name="Alice", description="Delegate math to Alice")],

prompt="You are Bob, a playful pirate.",

name="Bob",

)

workflow = create_swarm([alice, bob], default_active_agent="Alice")

app = workflow.compile(checkpointer=InMemorySaver())Here Alice handles additions and can hand over to Bob, while Bob responds playfully, but routes math questions back to Alice. Inmemory saver ensures that conversational state continues across turns.

Use cases and applications

Long-graph Swarm Unlocks Advanced Multi-Agent Collaboration by Enabling A Central Coordinator to Dynamically Delegate Sub-Tasks To Specialized Agents, Whether That’s Triaging Emergencies by Handing Off to Medical, Security, OR DISASTER-RESPECTSE EXPERTS, ROUTING TRAVEL BOOKINGS BETWEEN FIGHT, Hotel, Hotel, Hotel, Hotel, Hotel, Hotel, Hotel and Car-Rental Agents, Orchestrating a Pair Programming Workflow Between A Coding Agent and A Reviewer, OR Splitting Research and Report Generation Tasks Among the Researcher, Report and Facts Controllers. In addition to these examples, the framework can operate customer -supporting bots that route inquiries to departmental specialists, interactive storytelling with different character agents, scientific pipelines with stage -specific processors or any scenario where work divides between expert “Sverm” members increases reliability and clarity. At the same time, Langgraph Swarm handles the underlying message route, state management and smooth transitions.

Finally, Langgraph Swarm marks a jump against really modular, cooperative AI systems. Structured several specialized agents in a fixed graph solve tasks that a single model struggles with, each agent handling his expertise and then handing out control seamlessly. This design keeps individual agents simple and interpretable, while Svermen collectively controls complex workflows involving reasoning, tool use and decision making. The library is built on Langchain and Langgraph and taps a mature ecosystem of LLMs, tools, memory stores and debugging tools. Developers retain explicit control over agent interactions and state sharing and ensure reliability, yet LLM utilizes flexibility to decide when to invoke tools or delegate to another agent.

Check GitHub Page. All credit for this research goes to the researchers in this project. You are also welcome to follow us on Twitter And don’t forget to join our 90k+ ml subbreddit.

Sana Hassan, a consultant intern at MarkTechpost and dual-degree students at IIT Madras, is passionate about using technology and AI to tackle challenges in the real world. With a great interest in solving practical problems, he brings a new perspective to the intersection of AI and real solutions.