Mathematical reasoning is still one of the most complex challenges in AI. While AI is advanced in NLP and pattern recognition, its ability to solve complex mathematical problems with human -like logic and reasoning still hangs. Many AI models struggle with structured problem solving, symbolic reasoning and understand the deep relations between mathematical concepts. Tackling this hole requires structured high quality data sets that allow AI to learn from expert mathematical reasoning and improve problem solving accuracy.

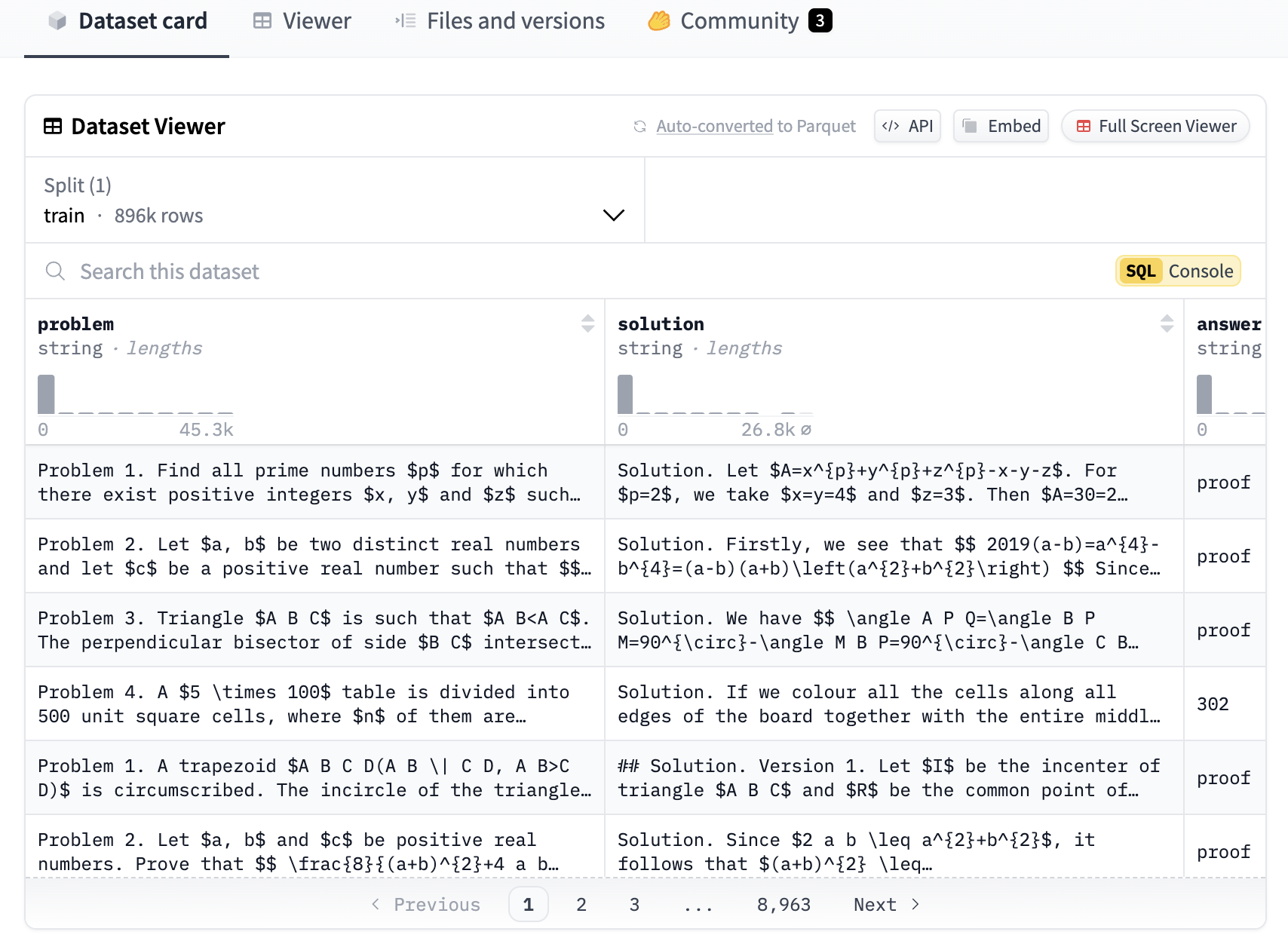

Recognition of the above needs has Project-Numina launched Numinamath 1.5, the second version of its advanced AI training data set, Numinamath, tailored specifically for mathematical reasoning. Numinamath 1.5 is based on its predecessor by offering a curated collection of approximately 900,000 mathematical problems at the competition level. These problems are structured using a chain of tanks (COT) methodology, ensuring that AI models follow a logical step-by-step resonance process to reach solutions. Dataset sources problems from Chinese high school mathematics, US mathematics competitions and international Olympic Olympics, providing a wide range of difficulty levels to train AI systems effectively.

The biggest innovation in Numinamath 1.5 is its enriched problem metadata, which includes:

- Final response to word problems.

- Mathematical domains include algebra, geometry, number of theory and calculation.

- Problem types are categorized in multiple-choice questions (MCQs), evidence-based problems and word problems.

These improvements make Numinamath 1.5 a more structured and verifiable resource for AI training. They allow for better generalization and reasoning when they tackle unseen mathematical challenges.

Project-Numina has adopted a manual validation method for problems taken from Olympiad data sets to ensure the accuracy and reliability of the data set. The previous version of Numinamath encountered parsing problems due to automated extraction techniques, which sometimes mistakenly interpreted problem structures. In response, Numinamath 1.5 now uses official sources from National Olympiad sites, ensuring that each problem and solution is accurately transcribed and formatted.

The latest data set includes manually curated problems in critical mathematical fields such as:

- Chinese Math Competitions (Cn_Contest)

- Inequalities and number of theory, verified by expert mathematicians

This focus on curated and verified data ensures that AI models learn from high quality authentic sources.

Another important improvement of Numinamath 1.5 is the removal of synthetic data sets, such as syntheth_amc. While previous iterations included synthetic problems to expand the data set diversity, ablation studies found that synthetic data marginal hindered AI performance by introducing discrepancies to the problem structure. As a result, Numinamath eliminates 1.5 synthetic problems, ensuring that AI models only engage in mathematics at the competition level rather than artificially generated content.

Numinamath 1.5 causes problems from multiple sources, ensuring different mathematical challenges. The data set includes:

- Olympiad Problems: Verified problems from national and international math –Olympiads.

- AOPS Forum Data: Retrieved from mathematical discussion forums with a mixture of general problems and competitive style problems.

- AMC and AIME Problems: Questions from the American Mathematics Competitions (AMC) and American Invitational Mathematics Examination (AIME).

- Chinese K-12 math: A large subgroup of problems from Chinese Gymnasium-Reading Plans that provide a strong foundation in algebra and geometry.

In conclusion, Numinamath delivers 1.5 896,215 verified math problems at the competition level from Olympics, national competitions and academic forums. Structured metadata, including problem type, question format and verified solutions, ensure precise categorization and analysis. The data set removes synthetic problems focusing on manually curated high quality data. It is an important resource for research and AI training that covers 268,000+ K-12 problems, 73,000 from forums and elite competition sets.

Check out the data set. All credit for this research goes to the researchers in this project. Nor do not forget to follow us on Twitter and join in our Telegram Channel and LinkedIn GrOUP. Don’t forget to take part in our 75k+ ml subbreddit.

🚨 Recommended Open Source AI platform: ‘Intellagent is an open source multi-agent framework for evaluation of complex conversation-a-system‘ (Promoted)

Nikhil is an internal consultant at MarkTechpost. He is pursuing an integrated double degree in materials at the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast who always examines applications in fields such as biomaterials and biomedical science. With a strong background in material science, he explores new progress and creates opportunities to contribute.

✅ [Recommended] Join our Telegram -Canal