In the field of artificial intelligence, multilingual speech recognition and translation have become important tools to facilitate global communication. Development of models, which, however, can precisely transcribe and translate multiple languages in real time, constitute significant challenges. These challenges include managing different linguistic shades, maintaining high accuracy, ensuring low latency and implementation of models effectively across different devices.

To tackle these challenges, NVIDIA AI Open Sourced two models: Canary 1b Flash and Canary 180m Flash. These models are designed for multilingual speech recognition and translation that support language such as English, German, French and Spanish. These models are released under the allowed CC-by-4.0 license, and are available for commercial use and encourages innovation within the AI community.

Technically, both models use a coding decoder architecture. The codes are based on fixed conforms that effectively process sound functions while the transformer -dere codes handle text generation. Task -specific symbols, including

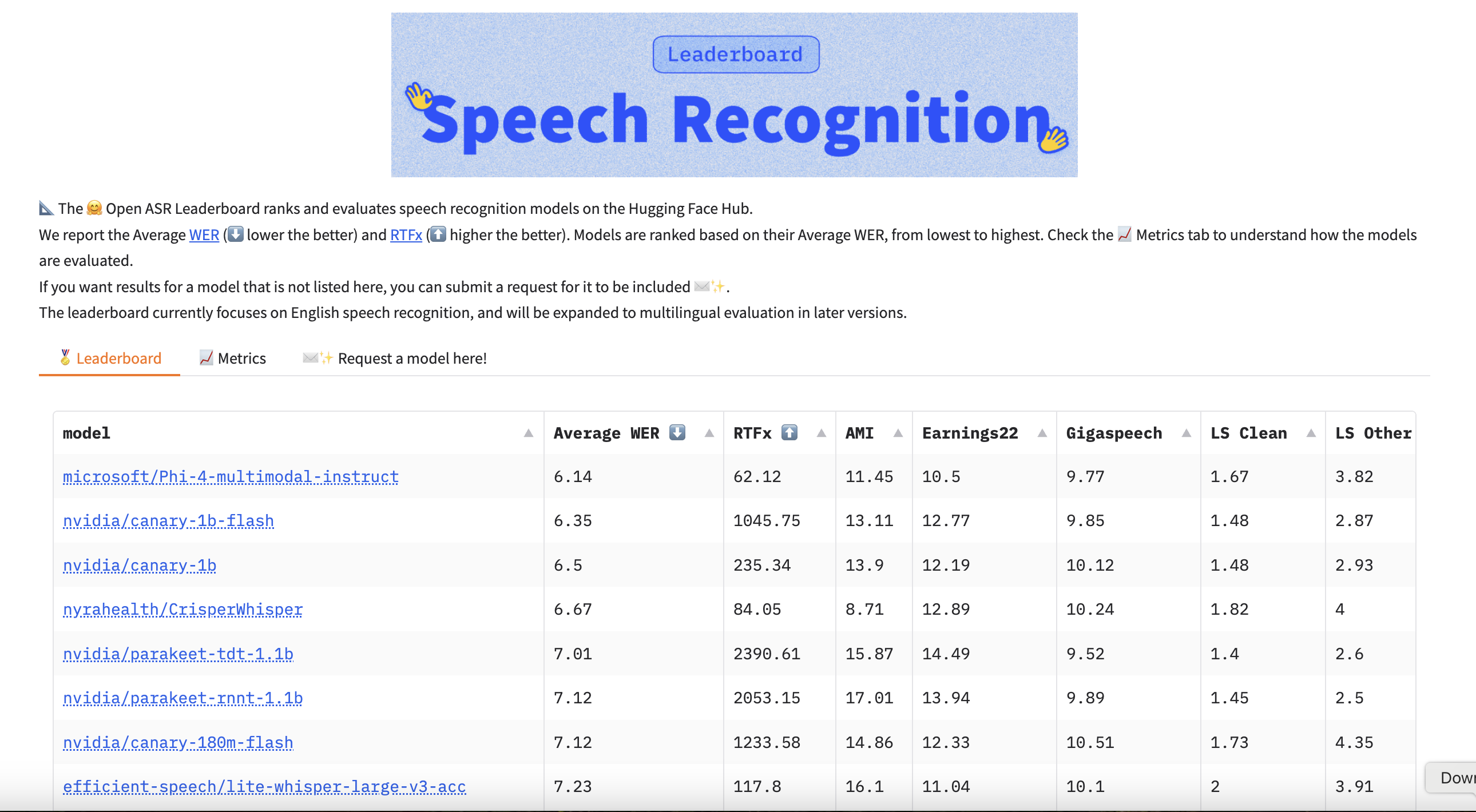

Performance measurements indicate that the Canary 1B Flash model achieves an inference speed that exceeds 1000 RTFX on data set with open ASR Leaderboard, enabling real-time treatment. In English Automatic Speech Recognition (ASR) Tasks it achieves a Word Error speed (WER) of 1.48% on Librispech Clean Dataset and 2.87% on the second data set on Librispech. For the multilingual ASR, the WERS model reaches 4.36% for German, 2.69% for Spanish and 4.47% for French on the MLS test set. In Automatic Speech Translation (AST) Tasks, the model demonstrates robust performance with bleu scores of 32.27 for English for German, 22.6 for English for Spanish and 41.22 for English to French at the Fleur test set.

The smaller Canary 180m flash model also produces impressive results where an inference speed surpasses 1200 RTFX. It achieves a WER of 1.87% on Librispech Clean Dataset and 3.83% on Librispech other data set for English ASR. For the multilingual ASR, model Wers records 4.81% for German, 3.17% for Spanish and 4.75% for French on the MLS test set. In AST assignments, it achieves bleu scores of 28.18 for English for German, 20.47 for English for Spanish and 36.66 for English to French on the Fleur test set.

Both models support timestamps at word level and segment level, which improves their utility in applications that require precise adjustment between audio and text. Their compact sizes make them suitable for installation on device, enabling offline treatment and reduction in dependence on cloud services. In addition, their robustness leads to fewer hallucinations under translation tasks, ensuring more reliable output. The Open Source Refresh under the CC-by-4.0 license encourages commercial exploitation and further development from society.

Finally, Nvidia’s open sourcing of Canary 1b and 180m flash models represents a significant increase in multilingual speech recognition and translation. Their high accuracy, real -time treatment functions and adaptability to implement units address many existing challenges in the field. By making these models publicly available, Nvidia not only demonstrates its commitment to promoting AI research, but also providing developers and organizations to build more inclusive and effective communication tools.

Check out the Canary 1b Model and Canary 180m flash. All credit for this research goes to the researchers in this project. You are also welcome to follow us on Twitter And don’t forget to join our 80k+ ml subbreddit.

Asif Razzaq is CEO of Marketchpost Media Inc. His latest endeavor is the launch of an artificial intelligence media platform, market post that stands out for its in -depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts over 2 million monthly views and illustrates its popularity among the audience.