One of the hardest parts of our three-light format is to decide what is not to be included, and a lot Really useful Advice is to collect dust in my Google drive.

They offered that? What offended you really about the offer

By Jeff Altman, Big Game Hunter Click here if the video is not played properly Have a job offer ever left you to feel offended, ie Because the salary was too low or something else like that? How can I see another person’s LinkedIn profile without them knowing? Has a job offer ever left you … Read more

Strategy & Frames for Demonstration of Value

Creating a consistent brand image can increase your company’s revenue by up to 23%. Just by outlining a clear and consistent fire positioning strategy, your business can cause more clients, make more money and optimize all its marketing efforts. Developing a fire positioning strategy can only come after you run market segmentation and outline your … Read more

‘Cancer sucks in any form’

British singer Jessie J has revealed that she had recently been diagnosed with “early breast cancer.” The musician (born Jessica Cornish) shared the health update on social media on Tuesday (June 3) and told her supporters that the diagnosis came shortly before the release of the recent single “No Secrets” in April. “I highlight the … Read more

Nvidia ai releases llama nemotron nano vl: a compact vision language model optimized for document understanding

Nvidia has introduced Lama nemotron nano vlA Vision Language Model (VLM) designed to address document level understanding tasks with efficiency and precision. This release is built on the LAMA 3.1 architecture and combined with a lightweight vision codes, and targets applications that require accurate parsing of complex document structures, such as scanned forms, financial reports … Read more

How I applied the 95-5 Rule of Building Gong’s Fire from Bottom Up

When I entered my role as head of content in gong, I did not come with a decade of marketing experience. I came with a sales background and a whole lot of time to chase wires. This experience turned out to be my unreasonable advantage.

Don’t forget these groups for networks

By Jeff Altman, Big Game Hunter In this video, Jeff Altman, Big Game Hunter is discussing a number of groups that you need to join except for them on LinkedIn. Why do more companies trust video interviews? Hi, I’m Jeff Altman, Big Game Hunter. Yesterday I interviewed someone for job search radio that reminded me … Read more

What is Keywords? A beginner’s step-by-step guide

What is Keywords? Keywords about the process of setting up how much you are willing to pay to achieve a specific goal with your search ads. This goal could be related to driving visibility, conversions or clicks. Let’s say you are promoting your yoga mats and will drive traffic to a destination page on your … Read more

Marcie Jones, lead singer of Marcie & The Cookies, dies at 79

Australian music Trailblazer Marcie Jones has died at the age of 79, just days after publicly revealed a leukemia diagnosis. The beloved vocalist first rose to fame in the late 1960s as the Powerhouse singer in Marcie & The Cookies, an all-kingdom vocal group that helped break the earth in Australia’s male-dominated music scene. After … Read more

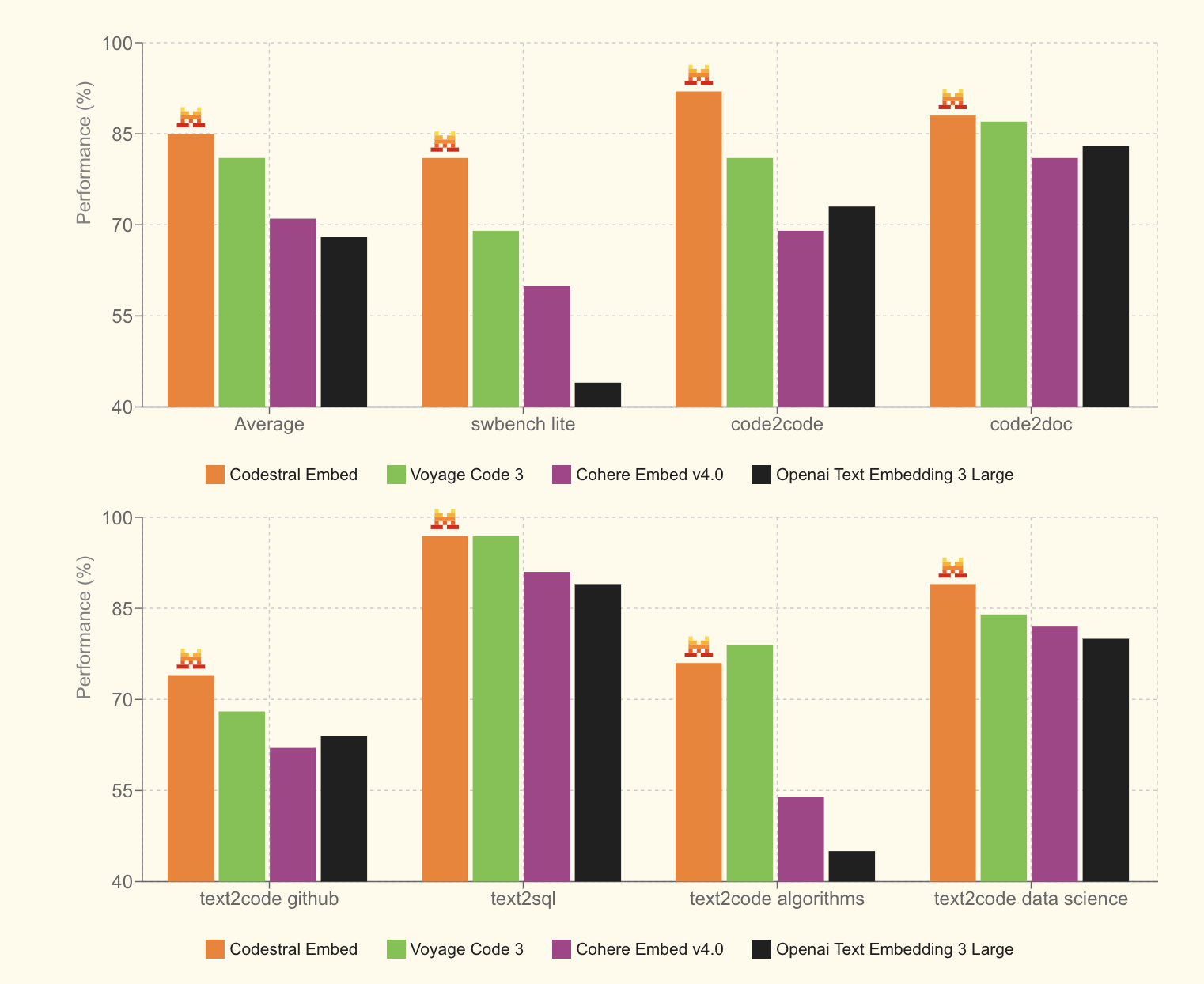

Mistral AI introduces Codestral Embed: A high -performance code Embeding model for scalable retrieval and semantic understanding

Modern software technology faces growing challenges with accurately downloading and understanding code across different programming languages and large code bases. Existing embedding models often struggle to capture the deep semantics of code, resulting in poor results in tasks such as code search, cloth and semantic analysis. These limitations prevent the ability of developers to effectively … Read more