Deep learning Faces difficulties when used for large physical systems on irregular grids, especially when interactions occur over long distances or on several scales. Handling these complexities becomes more difficult as the number of nodes increases. Several techniques have difficulty tackling these major problems, resulting in high calculation costs and inefficiency. Some important problems are to catch long -range effects, deal with multi -scale dependents and effective calculation with minimal resource consumption. These problems make it difficult to apply deep learning models effectively in fields such as molecular simulations, weather prediction and particle mechanics, where large data sets and complex interactions are common.

Currently, deep learning methods are struggling with scaling of attention mechanisms for large physical systems. Traditional Self -perception Calculates interactions between all points, leading to extremely high calculation costs. Some methods apply attention to small spots that Swintransforms For images, but irregular data needs extra steps to structure them. Techniques like Point transformer Use room filling curves, but this can break spatial conditions. Hierarchical methods, such as H-transformer and October formsGroup data at different levels, but rely on expensive operations. Clustering attention methods Reduce the complexity of gathering points, but this process loses fine details and battles with multiple scales.

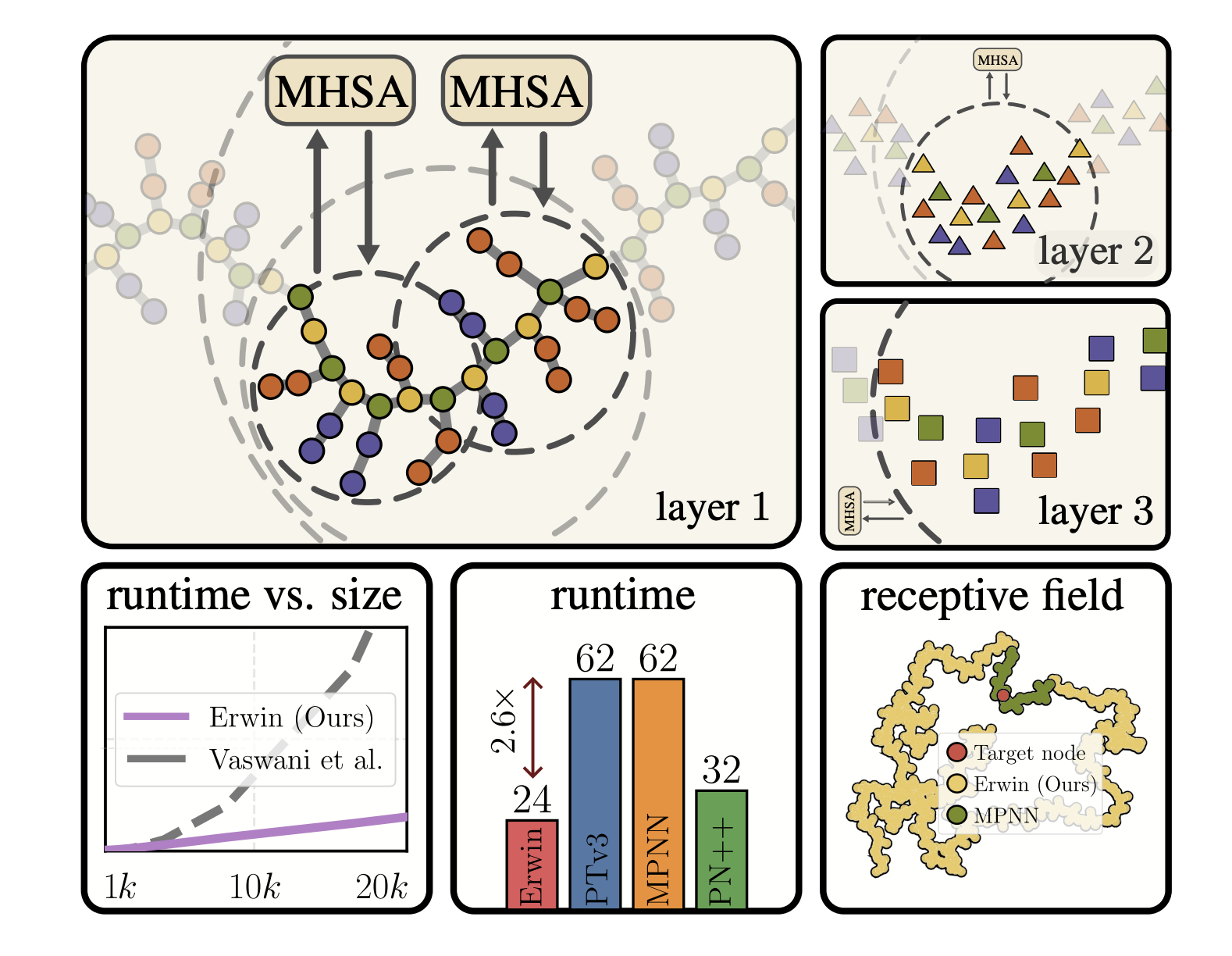

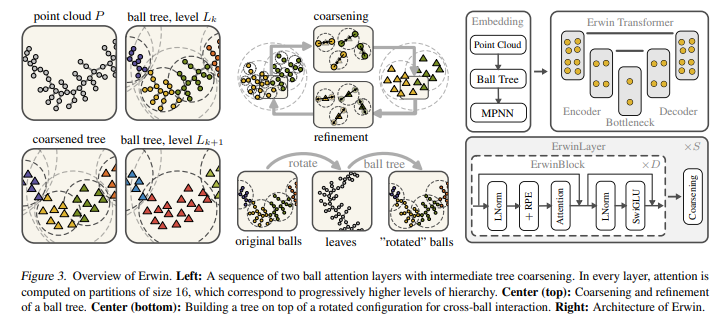

To tackle these problems introduced researchers from Amlab, University of Amsterdam and Cuspai Erwina hierarchical transformer that improves data processing efficiency through Ballwood Breakdown. Attention mechanism allows parallel calculation across clusters through ball tree partitions that partitions data hierarchically structure its calculations. This procedure minimizes the calculation complexity without sacrificing accuracy and bridge between the gap between the effectiveness of wood -based methods and the generality of attention mechanisms. Erwin Uses self -perception in localized regions with position coding and distance -based attention bias to capture geometric structures. Cross ball Connections facilitate communication between different sections where wood coarse and refinement mechanisms balance global and local interactions. Scalability and expressiveness with minimal calculation cost is guaranteed through this organized process.

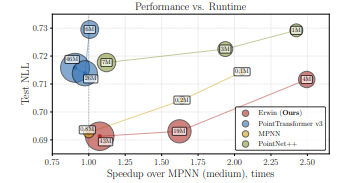

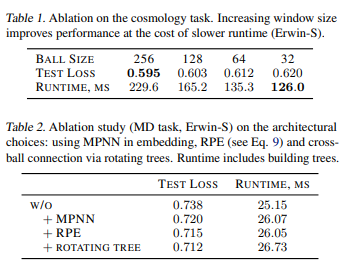

Researchers conducted experiments to evaluate Erwin. It surpassed equivalent and non-equivalent basic lines in cosmological simulations, capture of long-range interactions and improvement with larger exercise data sets. For molecular dynamics it accelerated simulations at 1.7-2.5 times Without compromising on accuracy, surpassing Mpnn and Pointnet ++ in Runtime while maintaining competitive test loss. Erwin exceeded MeshgraphnetAt GatAt Dilesnetand EAGLE In turbulent fluid dynamics that are distinguished in the pressed prediction while you are three times faster and user Eight times Less memory than EAGLE. Larger ball sizes in cosmology improved the benefit by preserving long -range dependencies, but increased calculation runtime and apply Mpnn At the embedded stage, the local interactions in molecular dynamics improved.

The hierarchical transformer design, suggested here, effectively handles physical systems with large scales with ball wood division and achieves advanced cosmology and molecular dynamic results. Although its optimized structure compromises between expressiveness and runtime, it has calculation overhead from padding and high memory requirements. Future work can explore learning pooling and other geometric coding strategies to improve efficiency. Erwin’s performance and scalability in all domains makes it a reference point for developments in modeling large particle systems, calculation chemistry and molecular dynamics.

Check out The paper and the github side. All credit for this research goes to the researchers in this project. You are also welcome to follow us on Twitter And don’t forget to join our 80k+ ml subbreddit.

🚨 Recommended Reading AI Research Release Nexus: An Advanced System Integrating Agent AI system and Data Processing Standards To Tackle Legal Concerns In Ai Data Set

Divyesh is a consulting intern at MarkTechpost. He is pursuing a BTech in agricultural and food technology from the Indian Institute of Technology, Kharagpur. He is a data science and machine learning enthusiast who wants to integrate these leading technologies into the agricultural domain and solve challenges.

🚨 Recommended Open Source AI platform: ‘Intellagent is an open source multi-agent framework for evaluating complex conversation-ai system’ (promoted)