The effectiveness of language models depends on their ability to simulate human-like step-by-step deductions. However, these reasoning sequences are resource -intensive and can be wasting for simple questions that do not require a detailed calculation. This lack of awareness of the complexity of the task is one of the most important challenges in these models. They are often standard for detailed reasoning, even for queries that could be answered directly. Such an approach increases the token use, expands the response time and increases the system’s latency and memory consumption. As a result, there is an urgent need to equip language models with a mechanism that allows them to make autonomous decisions about whether they should think deeply or respond briefly.

Current tools that try to solve this problem are either dependent on manually setting heuristics or quick technique to switch between short and long answers. Some methods use separate models and route questions based on complexity estimates. Still, these external routing systems often lack insight into the target model’s strengths and fail to make optimal decisions. Other techniques are fine -tuning models with quick signs such as “rationale to/from”, but these are dependent on static rules rather than dynamic understanding. Despite some improvements, these approaches fail to enable fully autonomous and context -sensitive control within a single model.

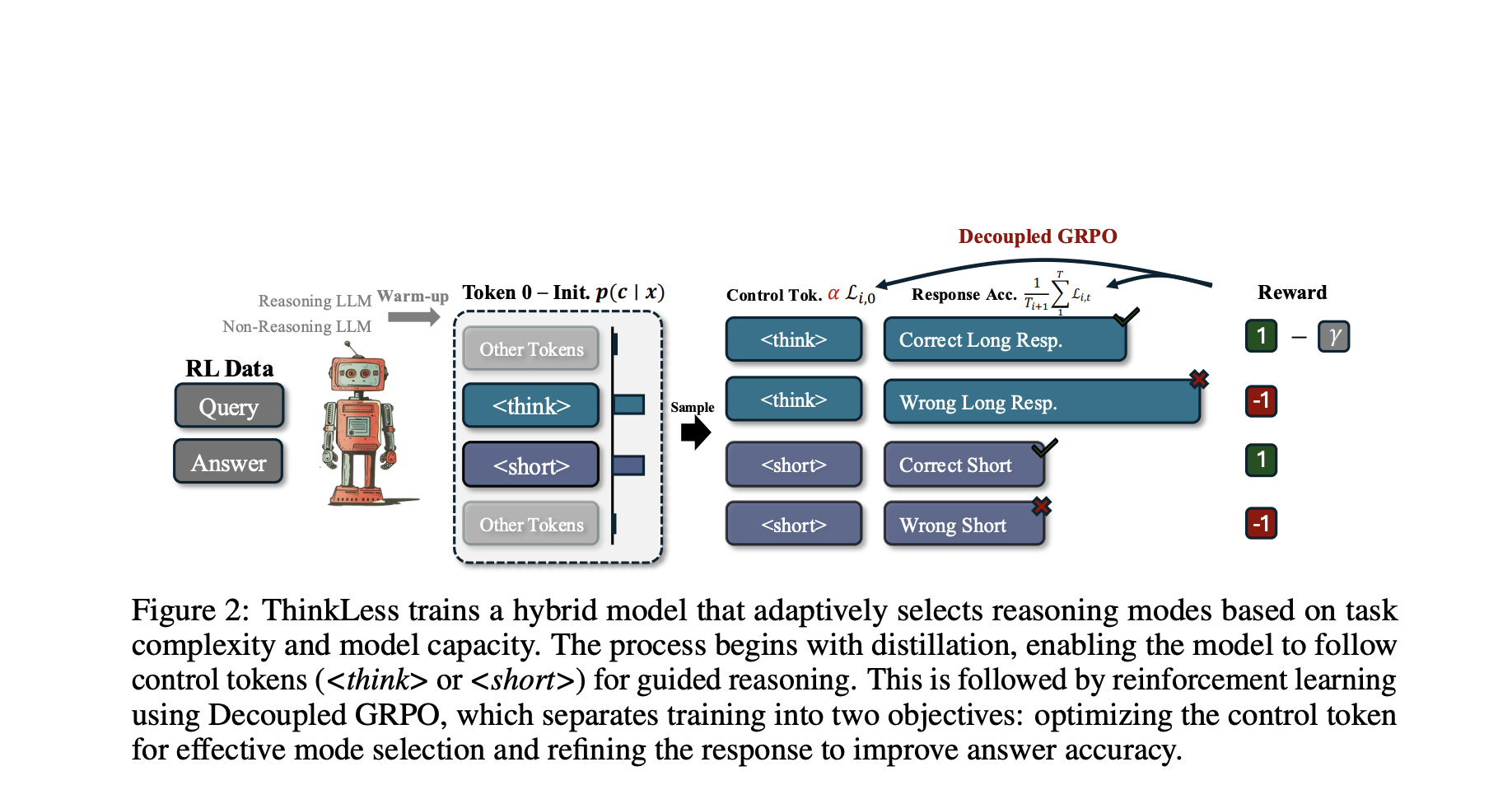

Researchers from the National University of Singapore introduced a new framework called Thinkless, which is equipping a language model with the ability to dynamically decide between using cards or long -shaped reasoning. The frame is built on reinforcement learning and introduces two special control tookenses –

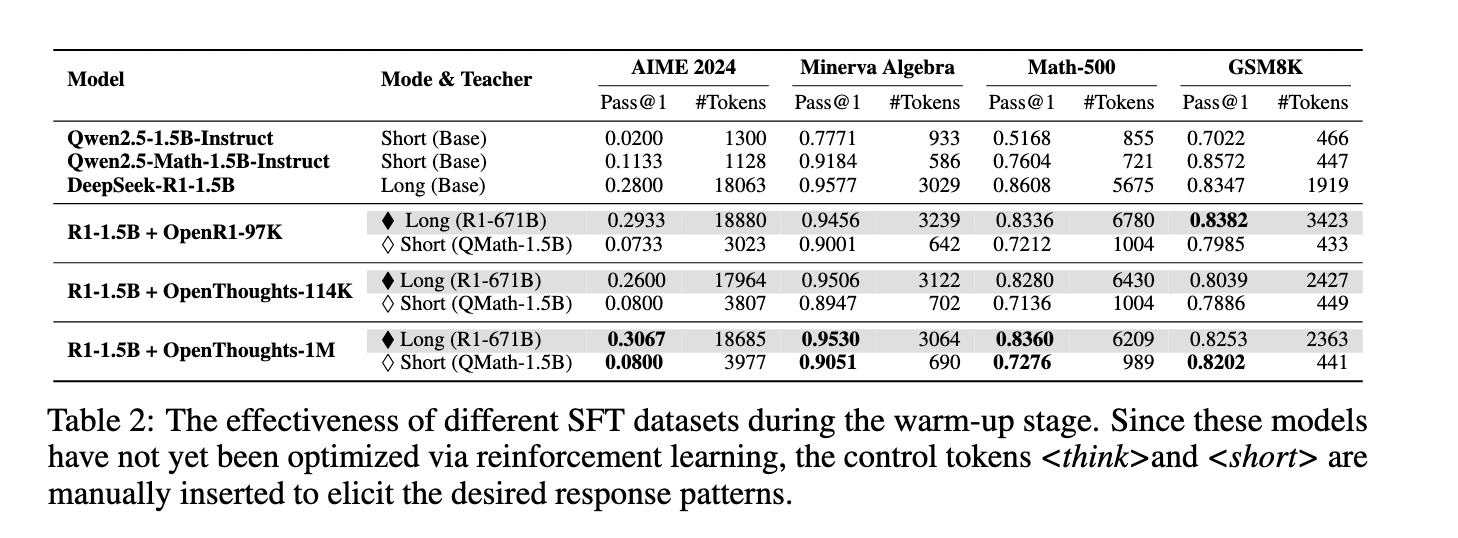

The methodology involves two phases: heating distillation and reinforcement learning. During the distillation phase, Thinkelless is trained using output from two expert models – one specializing in short answers and the other in detailed reasoning. This step helps the model to establish a fixed connection between the control token and the desired reasoning format. The reinforcement learning stage then fine -tunes the model’s ability to decide which reasoning state to use. DEGRPO breaks down the learning to two separate goals: one for training the control token and another to refine the response machines. This approach avoids gradient imbalances in previous models, where longer reactions would overman the learning signal, leading to a breakdown in the diversity of reasoning. Thinking ensures that both

When evaluated, thinking -free significantly long -shaped reasoning, while preserving high accuracy. On Minerva Algebra Benchmark used the model

Overall, this study by the National University of Singapore researchers presents a compelling solution to the inefficiency of uniform reasoning in large language models. By introducing a mechanism that allows models to judge task complexity and adjust their inference strategy accordingly, thinking optimizes both accuracy and efficiency. The method balances the depth of reasoning and response precision without relying on fixed rules and offers a data-driven approach to more intelligent language model behavior.

Check the page paper and github. All credit for this research goes to the researchers in this project. You are also welcome to follow us on Twitter And don’t forget to join our 95k+ ml subbreddit and subscribe to Our newsletter.

Nikhil is an internal consultant at MarkTechpost. He is pursuing an integrated double degree in materials at the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast who always examines applications in fields such as biomaterials and biomedical science. With a strong background in material science, he explores new progress and creates opportunities to contribute.