Driving large language models (LLMs) pose significant challenges due to their hardware requirements, but there are several options to make these powerful tools available. Today’s landscape offers several approaches-from consuming models through APIs provided by larger players such as Openai and Anthropic, to implement open source alternatives via platforms such as Hugging Face and Ollama. Whether you are in interface with models that are remote or running them locally, understanding key techniques such as fast technique and output structure can significantly improve the performance of your specific applications. This article explores the practical aspects of implementing LLMs, provides developers knowledge to navigate hardware limits, choose appropriate implementation methods and optimize model outputs through proven techniques.

1. Using LLM APIs: A quick introduction

LLM APIs offer a straightforward way to access powerful language models without controlling infrastructure. These services handle the complex calculation requirements, allowing developers to focus on implementation. In this tutorial, we will understand the implementation of these LLMs using examples to make their potential at a high level in a more direct and product -oriented way. To keep this tutorial brief, we have only limited ourselves to closed source models for the implementation part, and eventually we have added an overview of high-level open source models.

2. Implementation of closed source LLMS: API-based solutions

Closed source LLMS offers powerful capabilities through straightforward API interfaces, requiring minimal infrastructure while delivering advanced performance. These models, maintained by companies such as Openai, Anthropic and Google, provide developers ready-made intelligence available through simple API calls.

2.1 Let’s explore how we use one of the most accessible APIs with closed source, Anthropic’s API.

# First, install the Anthropic Python library

!pip install anthropic

import anthropic

import os

client = anthropic.Anthropic(

api_key=os.environ.get("YOUR_API_KEY"), # Store your API key as an environment variable

)

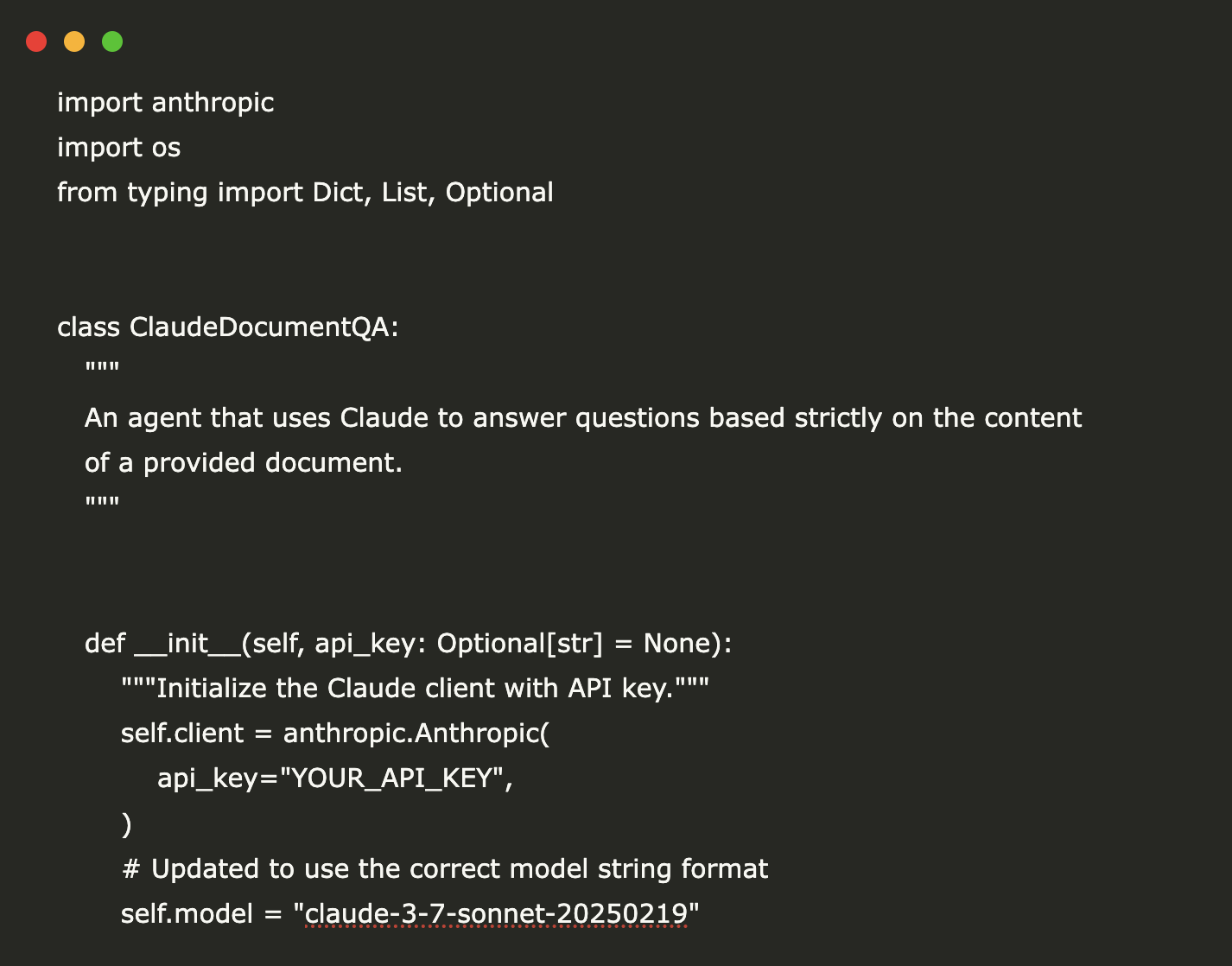

2.1.1 Application: In context questions Answering Bot for User Guides

import anthropic

import os

from typing import Dict, List, Optional

class ClaudeDocumentQA:

"""

An agent that uses Claude to answer questions based strictly on the content

of a provided document.

"""

def __init__(self, api_key: Optional[str] = None):

"""Initialize the Claude client with API key."""

self.client = anthropic.Anthropic(

api_key="YOUR_API_KEY",

)

# Updated to use the correct model string format

self.model = "claude-3-7-sonnet-20250219"

def process_question(self, document: str, question: str) -> str:

"""

Process a user question based on document context.

Args:

document: The text document to use as context

question: The user's question about the document

Returns:

Claude's response answering the question based on the document

"""

# Create a system prompt that instructs Claude to only use the provided document

system_prompt = """

You are a helpful assistant that answers questions based ONLY on the information

provided in the DOCUMENT below. If the answer cannot be found in the document,

say "I cannot find information about this in the provided document."

Do not use any prior knowledge outside of what's explicitly stated in the document.

"""

# Construct the user message with document and question

user_message = f"""

DOCUMENT:

{document}

QUESTION:

{question}

Answer the question using only information from the DOCUMENT above. If the information

isn't in the document, say so clearly.

"""

try:

# Send request to Claude

response = self.client.messages.create(

model=self.model,

max_tokens=1000,

temperature=0.0, # Low temperature for factual responses

system=system_prompt,

messages=[

{"role": "user", "content": user_message}

]

)

return response.content[0].text

except Exception as e:

# Better error handling with details

return f"Error processing request: {str(e)}"

def batch_process(self, document: str, questions: List[str]) -> Dict[str, str]:

"""

Process multiple questions about the same document.

Args:

document: The text document to use as context

questions: List of questions to answer

Returns:

Dictionary mapping questions to answers

"""

results = {}

for question in questions:

results = self.process_question(document, question)

return results### Test Code

if __name__ == "__main__":

# Sample document (an instruction manual excerpt)

sample_document = """

QUICKSTART GUIDE: MODEL X3000 COFFEE MAKER

SETUP INSTRUCTIONS:

1. Unpack the coffee maker and remove all packaging materials.

2. Rinse the water reservoir and fill with fresh, cold water up to the MAX line.

3. Insert the gold-tone filter into the filter basket.

4. Add ground coffee (1 tbsp per cup recommended).

5. Close the lid and ensure the carafe is properly positioned on the warming plate.

6. Plug in the coffee maker and press the POWER button.

7. Press the BREW button to start brewing.

FEATURES:

- Programmable timer: Set up to 24 hours in advance

- Strength control: Choose between Regular, Strong, and Bold

- Auto-shutoff: Machine turns off automatically after 2 hours

- Pause and serve: Remove carafe during brewing for up to 30 seconds

CLEANING:

- Daily: Rinse removable parts with warm water

- Weekly: Clean carafe and filter basket with mild detergent

- Monthly: Run a descaling cycle using white vinegar solution (1:2 vinegar to water)

TROUBLESHOOTING:

- Coffee not brewing: Check water reservoir and power connection

- Weak coffee: Use STRONG setting or add more coffee grounds

- Overflow: Ensure filter is properly seated and use correct amount of coffee

- Error E01: Contact customer service for heating element replacement

"""

# Sample questions

sample_questions = [

"How much coffee should I use per cup?",

"How do I clean the coffee maker?",

"What does error code E02 mean?",

"What is the auto-shutoff time?",

"How long can I remove the carafe during brewing?"

]

# Create and use the agent

agent = ClaudeDocumentQA()

# Process a single question

print("=== Single Question ===")

answer = agent.process_question(sample_document, sample_questions[0])

print(f"Q: {sample_questions[0]}")

print(f"A: {answer}\n")

# Process multiple questions

print("=== Batch Processing ===")

results = agent.batch_process(sample_document, sample_questions)

for question, answer in results.items():

print(f"Q: {question}")

print(f"A: {answer}\n")Output from the model

Claude -Document Q&A: A Specialized LLM application

This Claude document Q&A agent demonstrates a practical implementation of LLM APIs for context-conscious question answer. This application uses Anthropic’s Claude API to create a system that strictly justifies its answers in specified document content – an important capacity for many business cases.

The agent works by wrapping Claude’s powerful language capacities in a specialized framework that:

- Taking a reference document and user question as input

- Structure the prompt to define between document context and inquiry

- Using system instructions to limit claude to use only information present in the document

- Provides explicit handling to information not found in the document

- Supports both individual and batch -question treatment

This approach is especially valuable for scenarios that require high-faith response linked to specific content, such as customer support automation, legal document analysis, technical documentation collection or educational applications. The implementation shows how carefully fast technique and system design can transform a general LLM into a specialized tool for domain -specific applications.

By combining straightforward API integration with thought-provoking restrictions on the behavior of the model, this example shows how developers can build reliable, context-conscious AI applications without requiring expensive fine-tuning or complex infrastructure.

Note: This is just a basic implementation of the document question answer, we have not dived deeper into the real complexities of domain -specific things.

3. Implementation of Open Source LLMS: Local implementation and adaptability

Open Source LLMS offers flexible and customizable alternatives for closed source options, allowing developers to implement models on their own infrastructure with full control over implementation details. These models, from organizations such as Meta (Llama), Mistral AI and various research institutions, provide a balance between performance and accessibility for various implementation scenarios.

Open Source LLM implementations are characterized by:

- Local Implementation: Models can run on personal hardware or self -controlled sky infrastructure

- Customizing options: Ability to fine -tune, quantize or change models to specific needs

- Resource Scaling: Performance can be adjusted based on available calculation resources

- Preservation of Privacy: Data remains within controlled environments without external API calls

- Cost Structure: Disposable Calculation Costs Rather than Pr. Toket pricing

Large Open Source model families include:

- Llama/Llama-2: Meta’s powerful foundation models with commercial-friendly license

- Mistral: Effective models with strong performance in spite of smaller parameter counts

- Falcon: Exercise -efficient models with competitive results from tii

- Pythia: Research -oriented models with extensive documentation of training methodology

These models can be implemented through frameworks such as embracing face transformers, Llama.cpp or Ollama, giving abstractions to simplify the implementation and at the same time maintain the benefits of local control. While it typically requires more technical setup than API-based alternatives, open source LLMs provide benefits in cost management for high-volume applications, data protection and customization potential to domain-specific needs.

Here it is Colab notebook. Nor do not forget to follow us on Twitter and join in our Telegram Channel and LinkedIn GrOUP. Don’t forget to take part in our 80k+ ml subbreddit.

🚨 Recommended Reading AI Research Release Nexus: An Advanced System Integrating Agent AI system and Data Processing Standards To Tackle Legal Concerns In Ai Data Set

Asjad is an internal consultant at Marketchpost. He surpasses B.Tech in Mechanical Engineering at the Indian Institute of Technology, Kharagpur. Asjad is a machine learning and deep learning enthusiast who always examines the use of machine learning in healthcare.

🚨 Recommended Open Source AI platform: ‘Intellagent is an open source multi-agent framework for evaluating complex conversation-ai system’ (promoted)