Form primitive abstraction that breaks down complex 3D forms into simple, interpretable geometric devices is fundamental to human visual perception and has important consequences for computer vision and graphics. While recent methods in 3D generation-by help of representations such as masks, point clouds and neural fields-have created the creation of high credibility content, they often lack the semantic depth and interpretability needed for tasks, such as robot manipulation or stage understanding. Traditionally, primitive abstraction has been tackled using either optimized methods that fit geometric primitives for forms, but often over the segment them semantic or learning -based methods that train on small, category -specific data sets and thus lack generalization. Early approaches used basic primitives such as cuboids and cylinders, which later developed into more expressive forms such as superquadrics. However, a major challenge continues to design methods that can abstract forms in a way that is in line with human cognition, while also generalized across different object categories.

Inspired by recent breakthroughs in 3D content generation using large data sets and auto-gressive transformers, the authors suggest reframing formabstraction as a generative task. Instead of relying on geometric mounting or direct parameter gression, their approach constructs sequentially primitive joints to mirror human reasoning. This design captures more effectively both semantic structure and geometric accuracy. Previous works in auto-gressive modeling-such as meshgpt and meshanything-have shown strong results in mesh generation by treating 3D forms such as sequences that incorporate innovations such as compact tokenization and form conditioning.

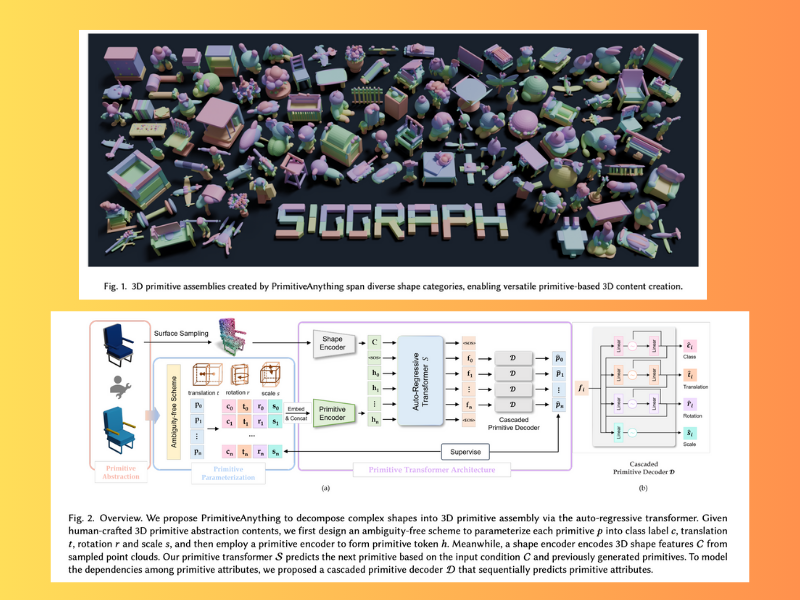

Primitiveelything is a framework developed by researchers from Tencent AIPD and Tsinghua University, which redefines forming abstraction as a primitive collection generation task. It introduces a decoding-only transformer conditioned on mold functions to generate sequences of primitives with variable length. The framework uses a unified, ambiguity -free parameterization form that supports several primitive types while maintaining high geometric accuracy and learning efficiency. By learning directly from human -designed formabstractions, primitivive animation effectively captures how complex forms are broken in simpler components. Its modular design supports easy integration of new primitive types, and experiments show that it produces high quality, perceptually adjusted abstractions across different 3D forms.

Primitiveelything is a frame that models 3D form abstraction as a sequential generation task. It uses a discreet, ambiguity -free parameterization to represent the type, translation, rotation and scale of each primitive. These are coded and brought into a transformer that predicts the next primitive based on previous and form features that are extracted from point clouds. A cascade decoder models models between attributes, ensuring coherent generation. Exercise combines losses across entropy, boundary distance to reconstruction accuracy and gumbel softmax for differential sampling. The process continues auto-gressing until the end of token signals at the end of the sequence, enabling flexible and human-like degradation of complex 3D forms.

The researchers introduce a large-scale human prime data set that includes 120K 3D samples with manually annotated primitive collections. Their method is evaluated using Metrics such as Chamfer Distance, Earth Mover’s Distance, Hausdorff Distance, Voxel-Iou and Segmentation Score (RI, VOI, SC). Compared to existing optimization and learning-based methods, the superior performance and better adaptation to human abstraction patterns show. Ablation studies confirm the importance of each design component. In addition, the frame supports 3D content generation from text or image inputs. It offers user-friendly editing, high modeling quality and over 95% storage savings, making it suitable for effective and interactive 3D applications.

Finally, primitiveelything is a new framework approaching 3D form abstraction as a sequence generation task. By learning from human designed primitive collections, the model effectively captures intuitive degradation patterns. It achieves high quality results across different object categories and highlights its strong generalization ability. The method also supports flexible creation of 3D content using primitive based representations. Due to its efficiency and light structure, primitiveelyninging is suitable for enabling user -generated content in applications such as games where both performance and easy manipulation are important.

Check out Paper, demo and github side. All credit for this research goes to the researchers in this project. You are also welcome to follow us on Twitter And don’t forget to join our 90k+ ml subbreddit.

Here is a brief overview of what we build on MarkTechpost:

Sana Hassan, a consultant intern at MarkTechpost and dual-degree students at IIT Madras, is passionate about using technology and AI to tackle challenges in the real world. With a great interest in solving practical problems, he brings a new perspective to the intersection of AI and real solutions.