Researchers have highlighted concern about hallucinations in LLMs because of their generation of plausible but inaccurate or non -related content. However, these hallucinations have potential in creativity -driven fields such as discovery of drugs where innovation is important. LLMs have been widely used in scientific domains, such as material science, biology and chemistry, help with tasks such as molecular description and drug design. While traditional models like Molt5 offer domain specific accuracy, LLMs often produce hallucinated output when not fine -tuned. Despite their lack of actual consistency, such outputs can provide valuable insights, such as high -level molecular descriptions and potential compound applications, thereby supporting investigative processes in drug discovery.

Drug discovery, a costly and time -consuming process, involving the evaluation of large chemical spaces and identification of new solutions to biological challenges. Previous studies have used machine learning and generative models to help in this field, with researchers examining the integration of LLMs into molecular design, data set surgery and prediction tasks. Hallucinations in LLMs, often considered a disadvantage, can mimic creative processes by recombining knowledge to generate new ideas. This perspective is in line with the role of creativity in innovation, exemplified by pioneering unintended discoveries such as penicillin. By utilizing hallucinated insights, LLMs could promote discovery of drugs by identifying molecules with unique properties and promoting high -level innovation.

Scads.ai and Dresden University of Technology Researchers assume that hallucinations can improve the LLM benefit in drug discovery. Using seven instruction-set LLMs, including GPT-4o and Llama-3.1-8B, the hallucinated natural language descriptions of the molecules’ smile strings in prompts for classification tasks incorporated. The results confirmed their hypothesis, where Llama-3.1-8B achieved an 18.35% ROC-AUC improvement in relation to baseline. Larger models and Chinese-generated hallucinations demonstrated the biggest gains. Analyzes revealed that hallucinated text does not provide -related, yet insightful information that helps predictions. This study highlights the potential of hallucinations in pharmaceutical research and offers new perspectives on utilizing LLMs for innovative discovery of drugs.

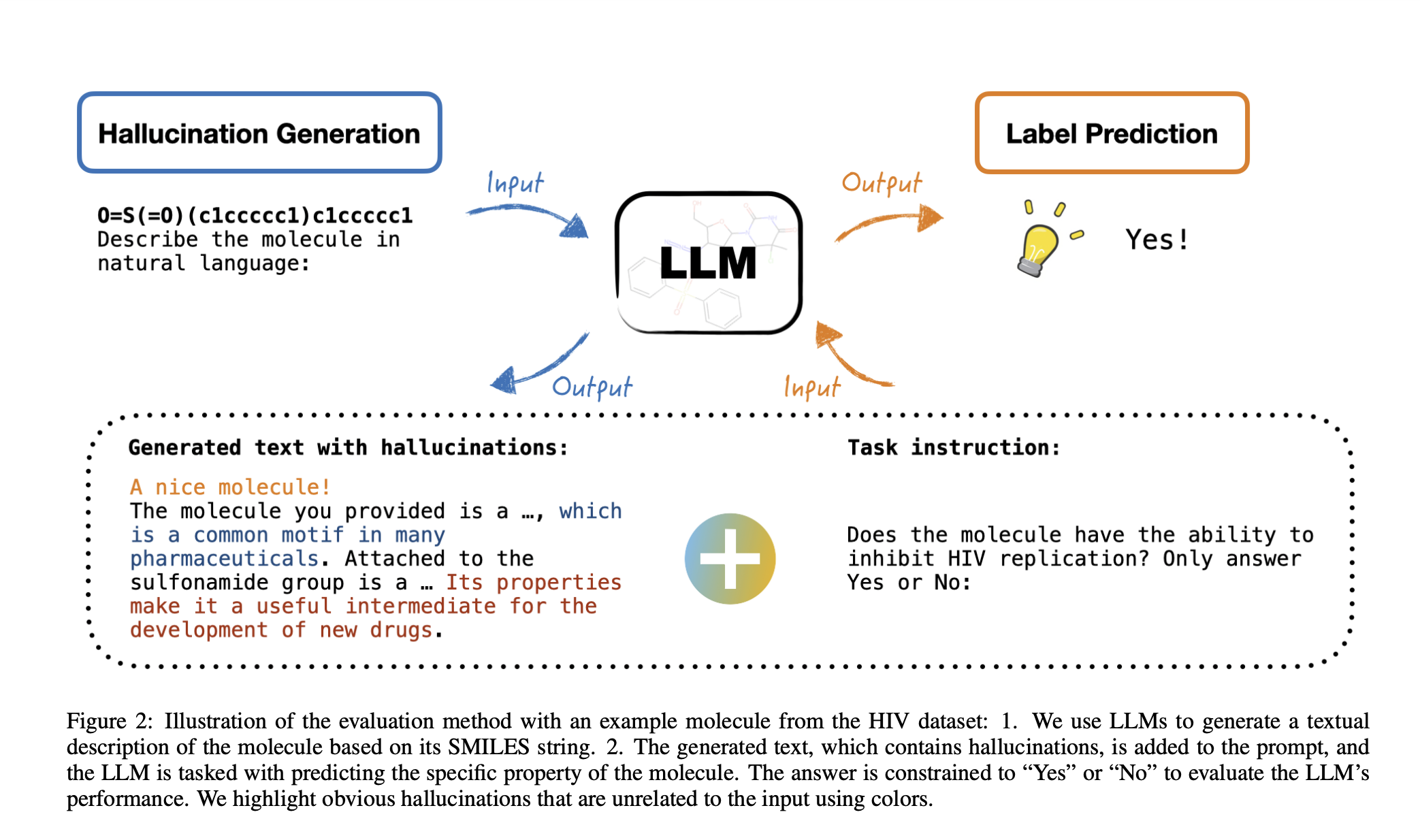

To generate hallucinations, smile strings of molecules are translated into natural language using a standardized prompt, where the system is defined as an “expert in drug discovery.” The descriptions generated are evaluated for factual consistency using HHM-2.1-open model with Molt5-generated text as a reference. The results show low factual consistency across LLMs, with Chemllm scoring 20.89% and others on average 7.42–13.58%. Pharmaceutical discovery tasks are formulated as binary classification problems and predicts specific molecular properties via the next token prediction. Employees include smiles, descriptions and task instructions with models limited to broadcasting “yes” or “no” based on the highest probability.

The study examines how hallucinations generated by different LLMS impact services in prediction tasks in molecular properties. Experiments use a standardized prompt format to compare predictions based on smil strings alone, smiles with molt5-generated descriptions and hallucinated descriptions from different LLMs. Five molecules nets were analyzed using ROC-AUC scores. The results show that hallucinations generally improve performance rather than smiles or Molt5 base lines, where the GPT-4o achieves the highest gains. Larger models take more advantage of hallucinations, but improvements to the plateau beyond 8 billion parameters. Temperature settings affect the hallucination quality, where intermediate values provide the best performance improvements.

Finally, the study examines the potential benefits of hallucinations in LLMS for drug discovery tasks. By assuming that hallucinations can improve benefits, research evaluates seven LLMs across five data sets using hallucinated molecular descriptions integrated into prompt. The results confirm that hallucinations improve LLM evil compared to baseline prompts without hallucinations. In particular, Llama-3.1-8b achieved an 18.35% ROC-AUC gain. GPT-4o-generated hallucinations provided consistent improvements across models. The results reveal that larger model sizes generally benefit from hallucinations, while factors such as generation temperature have minimal impact. The study highlights the creative potential of hallucinations in AI and encourages further exploration of applications for discovery of drugs.

Check out the paper. All credit for this research goes to the researchers in this project. Nor do not forget to follow us on Twitter and join in our Telegram Channel and LinkedIn GrOUP. Don’t forget to take part in our 70k+ ml subbreddit.

🚨 [Recommended Read] Nebius AI Studio is expanded with visual models, new language models, embedders and lora (Promoted)

Sana Hassan, a consultant intern at MarkTechpost and dual-degree students at IIT Madras, is passionate about using technology and AI to tackle challenges in the real world. With a great interest in solving practical problems, he brings a new perspective to the intersection of AI and real solutions.

📄 Meet ‘Height’: The only autonomous project management tool (sponsored)